As one of the essentials serving millions of web and mobile requests for real-estate information, the Data Science and Engineering (DSE) team at Zillow collects, processes, analyzes and delivers tons of data everyday. It is not only the giant data size but also the continually evolving business needs that make ETL jobs super challenging. The team has been striving to find a platform that could make authoring and managing ETL pipelines much easier, and it was in 2016 we met Airflow.

What is and Why Airflow?

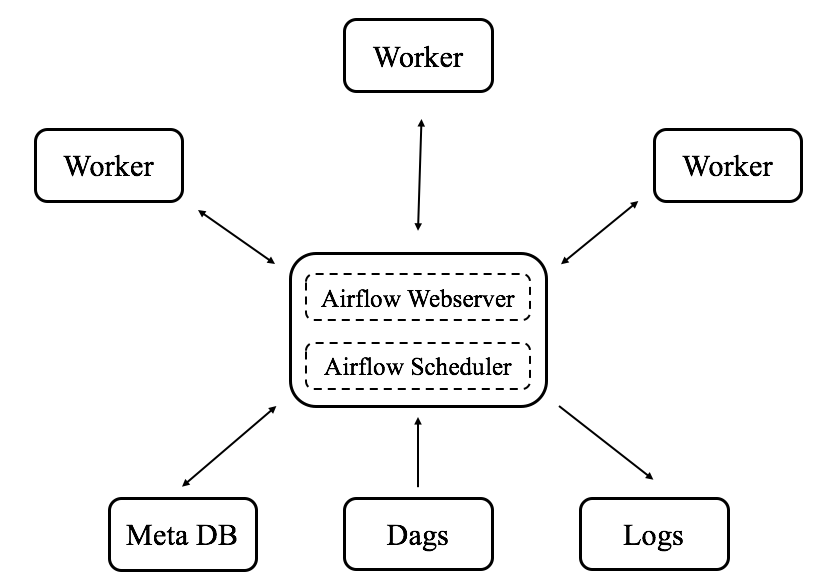

Airflow, an Apache project open-sourced by Airbnb, is a platform to author, schedule and monitor workflows and data pipelines. Airflow jobs are described as directed acyclic graphs (DAGs), which define pipelines by specifying: what tasks to run, what dependencies they have, the job priority, how often to run, when to start/stop, what to do on job failures/retries, etc. Typically, Airflow works in a distributed setting, as shown in the diagram below. The airflow scheduler schedules jobs according to the schedules/dependencies defined in the DAG, and the airflow workers pick up and run jobs with their loads properly balanced. All job information is stored in the meta DB, which is always updated in a timely manner. The users can monitor their jobs via an Airflow web UI as well as the logs.

Why do we choose Airflow? Among the top reasons, Airflow enables/provides:

- Pipelines configured as code (Python), allowing for dynamic pipeline generation

- A rich set of operators and executors for use and potentially more (you can write your own)

- High scalability in terms of adding or removing workers easily

- Flexible task dependency definitions with subdags and task branching

- Flexible schedule settings and backfilling

- Inherent support for task priority settings and load management

- Various types of connections to use: DB, S3, SSH, HDFS, etc.

- Nice logging and alerting

- Fantastic web UI showing graph view, tree view, task duration, number of retries and more

Airflow is Even More Fantastic at Zillow

Airflow makes authoring and running ETL jobs very easily, but we also want to automate the development lifecycle and Airflow backend management. The goals are:

- Full CI/CD Compliance: Every push/merge to the Airflow dag repo will be integrated and deployed automatically, without human interference

- Instant Backend Update: Updating the Airflow cluster is just one-command away

- High Scalability: Adding or removing workers easily via instant backend update

- Automatic Service Recovery: Airflow backend services (webserver/scheduler/workers) will be recovered automatically if they are dead or unhealthy

- Resource Utilization Monitoring: Resource utilization statistics are visible in real time

- High Log Availability: Both DAG and backend logs are available, visualizable and analyzable

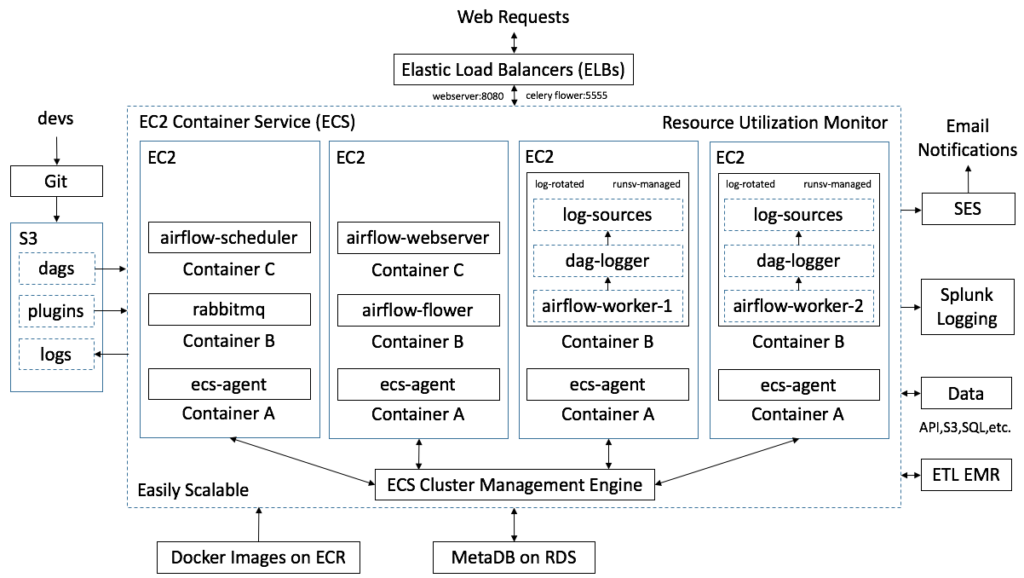

The challenging goals above are achieved by playing Airflow together with several of the most popular and cutting-edge techniques, including but not limited to Amazon Web Service (AWS), docker and Splunk. Below is a diagram that shows how an Airflow cluster works at Zillow’s DSE team, and the interpretation follows immediately.

- Amazon EC2 Container Service (ECS): The Airflow cluster is hosted in an Amazon ECS cluster, which makes Airflow docker-managed, easily scalable, service auto-recoverable and resource utilization visible

- Amazon EC2 Container Registry (ECR) and Docker Hub: On top of the hardware (EC2 instances), all backend services are containerized (dockerized), and the images are either stored in Amazon ECR (private images) or from Docker Hub (public images)

- Amazon Relational Database Service (RDS): Airflow’s meta DB is hosted on Amazon RDS, and we adopt a postgres-backed database

- RabbitMQ: The message broker we use is RabbitMQ, which is feature-complete, stable and resistant to data loss, compared to others like Redis, Amazon SQS and Zookeeper

- Amazon Simple Cloud Storage Service (S3): Storing Airflow dags, plugins and logs, Amazon S3 is an essential storage place in middle of the CI/CD process

- Amazon Elastic Load Balancer (ELB): Amazon ELBs are used for the web UI requests (airflow-webserver and airflow-flower) and also internal service discovery (rabbitmq)

- Amazon Simple Email Service (SES): Amazon SES is adopted as the email server for Airflow notifications

- Amazon Elastic MapReduce (EMR): Airflow at Zillow is implemented primarily for task scheduling and execution of small jobs, while heavy jobs (spark, hive, etc.) are distributed into an Amazon EMR cluster via SSHExecuteOperator

- Splunk: Both Airflow dag logs and backend logs are directed to Splunk, which provides powerful supports for log visualization and analysis

To smooth the entire development lifecycle, we created three independent Airflow environments, which are essentially three separate Airflow clusters:

- airflow-local: Each developer can launch his/her own local Airflow cluster on a dev machine to develop DAGs locally. Thanks to docker, launching an Airflow cluster is just commands away, and a cookicutter-enabled DAG archetype is also available for a quick start

- airflow-staging: Each Airflow DAG that passes local tests should go through airflow-staging, which mirrors the environment of airflow-prod with all necessary external connections

- airflow-prod: An Airflow DAG will be promoted to airflow-prod only when it passes all necessary tests in both airflow-local and airflow-staging

The Current and Future of Airflow at Zillow

Since we created the first data pipeline using Airflow in late 2016, we have been very active in leveraging the platform to author and manage ETL jobs. People love it, as they found that everything becomes so easy to simply plant their jobs on this fantastic platform, comparing with creating and managing self-scheduled standalone services. As at the time of writing, Airflow at Zillow is serving around 30 ETL pipelines across the team, and processing a giant size and numerous categories of data every day.

In light of the rapid growth of usage during the past few months, it can be expected that Airflow will take care of more and more ETL jobs, and potentially expand to more teams that are dealing with data everyday. We are also working on sharing Airflow job information with other systems, as an effort to build a seamlessly connected ecosystem for Data Science and Engineering.