My Internship at Zillow Group AI Part 1: Attribute Recognition in Real Estate Listings

Zillow Group is the leading real estate and rental marketplace that serves the full lifecycle of owning and living in a home: buying, selling, renting, financing, remodeling and more. ZG has a database of more than 110 million real estate listings and has huge amount of multi-modal (listing images, text descriptions, geo-location and structured data) data. In a previous blog, ZG AI team shared how they use this data to build engaging product features and improve user experience.

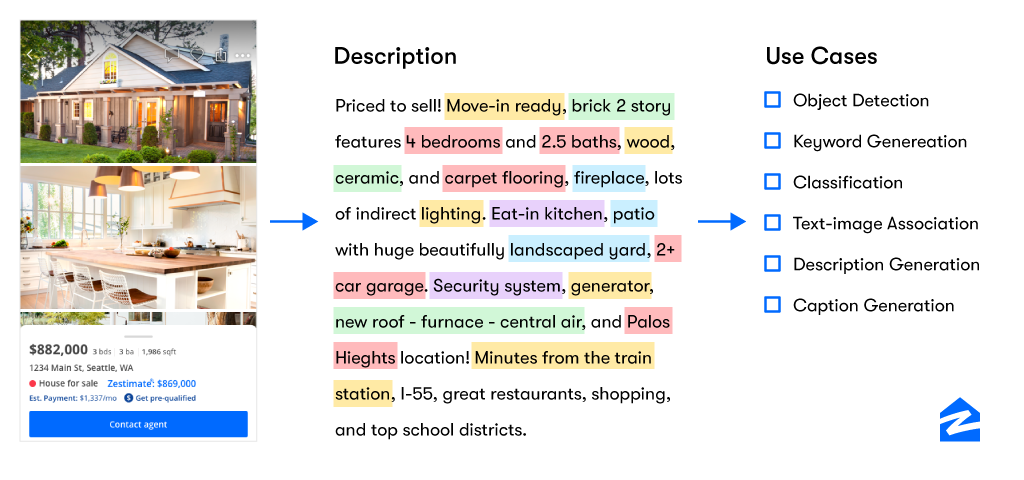

The rich multimodal database at Zillow provides unique opportunities to extract rich information and build systems for a variety of use cases.

As my internship project, I built upon this work to identify real estate attributes in listing images using listing descriptions as weak-supervision. In this setting, attributes refer to various real estate concepts like an open kitchen, granite counters, hardwood floors, stainless steel appliances, etc. However, unlike traditional supervised approaches that require large amounts of well-annotated image-level data for training, we wanted to leverage weak labels generated programmatically from our large collection of listing descriptions. These labels are abundant and require minimum human supervision to create.

Each real estate listing at ZG comes with a rich text description and a set of images. In order to generate associations between an image and the keywords in the description, the AI team has developed an algorithm that exploits semantic relations between scene type and keywords to weakly associate keywords in descriptions to the individual images.

At the high level it uses a visual model (CNN) to classify scenes in images, a keyword extraction algorithm to identify keyphrases/attributes from descriptions, a Word2Vec skipgram text model to learn semantic relationships between keywords and scene types, and a keyword-image association procedure to associate extracted keyphrases to images of semantically related scene type. For eg. “granite countertops” will be associated with images tagged as scene type Kitchen. We are able to run this process across millions of active listings to generate rich real estate collections for our users.

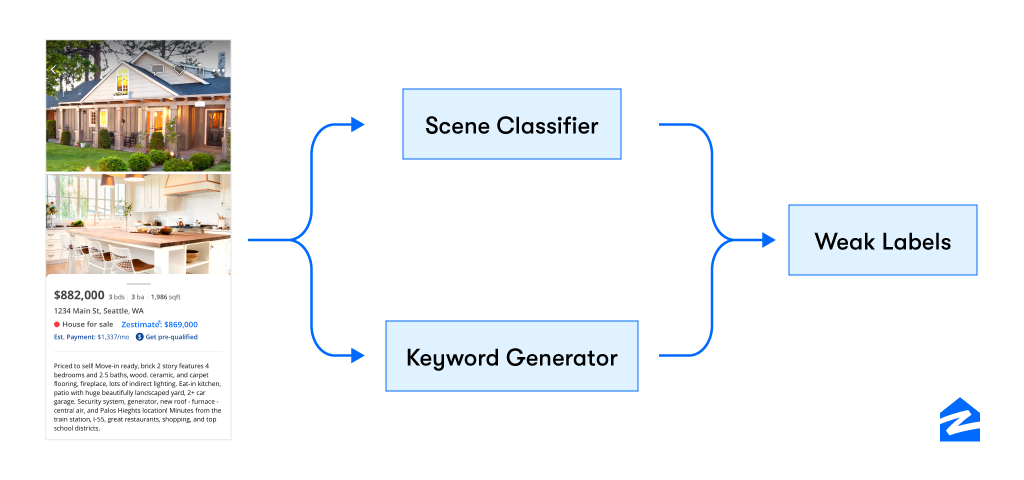

The above generation process provides us with millions of labels but makes certain assumptions around the completeness of the descriptions and images as well as the precision of the underlying models. As such we term these labels as weak labels.

Weak-label data generation process. It is easy to create but lacks label precision.

Our aim for this project was to explore if we can use these large collection of weak labels as supervision to train deep learning models that can detect attributes directly from images. This will both help to improve the accuracy of our downstream applications as well as provide us the ability to identify attributes when descriptions are either missing or incomplete.

From the onset we identified the following key challenges with the project:

In order to better understand the data and decide on a possible course of action, we narrowed our scope to only kitchen scenes and 27 attributes. The attributes consisted of both entities and concepts covering a wide variety of features associated with kitchens such as appliances, kitchen type, floor type, cabinet types, countertop type, and lighting.

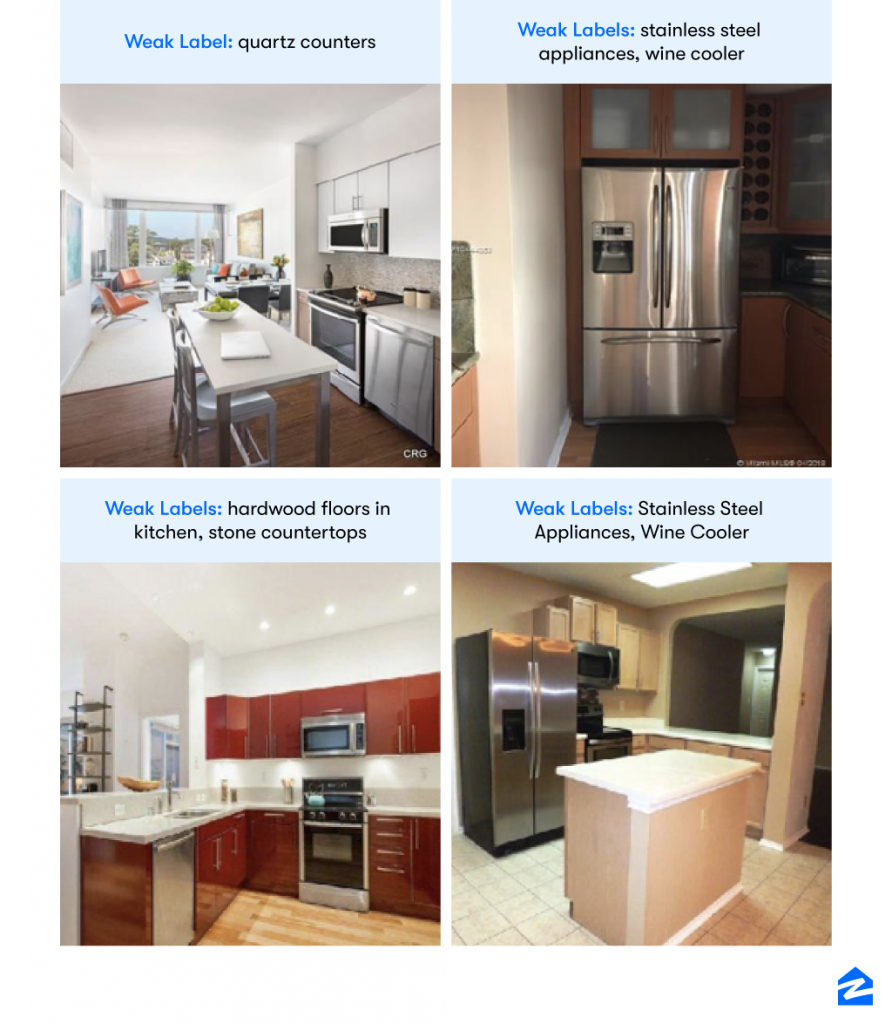

The immediate next step was to understand the label quality and assess the difficulty of classification. This understanding was crucial for the selection of loss function and model architecture. However, the only way to achieve this was through manual sampling and labeling of items across all 27 attributes. Sampling of the data revealed the following:

There were a few subjective attributes and were difficult to identify (especially floor, countertop, and cabinet types)

A few examples from weakly labeled dataset. We can see the issues of low precision, incomplete labels and partial occlusion.

Based on the label quality analysis, we decided to further reduce the attribute space and restrict it to 16 attributes with slightly better label precision. We also created a validation set of 300 images by manual labeling to evaluate model performance.

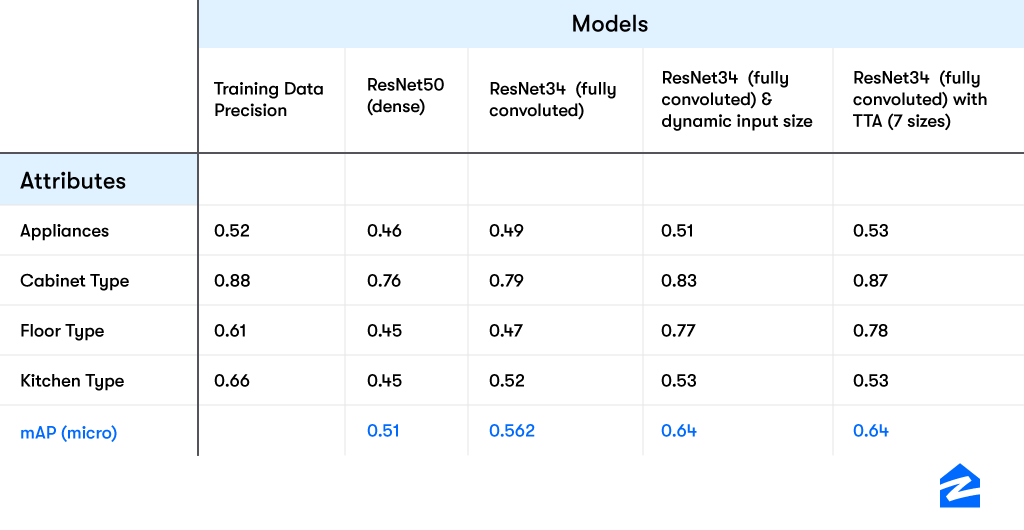

For computer vision tasks, the most efficient and quick way of building models is through transfer learning. We decided to start with the ResNet50 model trained on the places365 dataset open-sourced by MIT as the base model. The model was trained on 365 scene categories and Zillow had been using a fine-tuned version of this model for scene classification tasks. We used Binary cross-entropy loss with positive weights as we were dealing with a multi-label classification problem with class imbalance and mean average precision(micro) aka mAP as the evaluation metric.

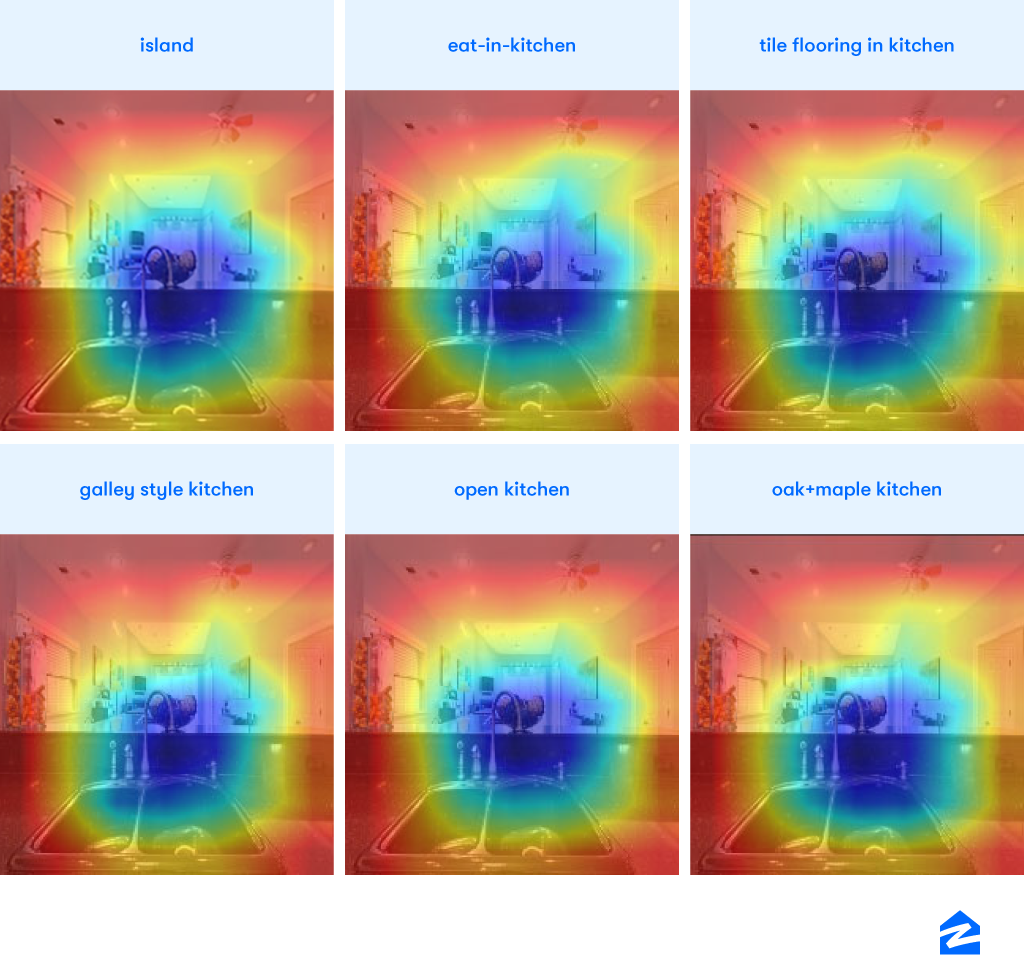

The model was fine-tuned with at least 4000 high-quality images (filtered using image quality score) per attribute and we observed a mAP of around 0.47. Although the model predictions on the test set looked fine for a baseline model trained on a 60% precise dataset, we were concerned about model learning the label correlation instead of image features. One way to ascertain this was by looking at the Class activation maps (CAM) and understanding the model behavior. CAM is a simple technique to get the discriminative image regions used by CNN to identify a specific class in the image. In other words, CAM lets us see which regions in the image are relevant to a class. The CAM for the attributes suggested that the model was learning mostly the co-occurrence as discriminative image regions of all the classes were almost the same.

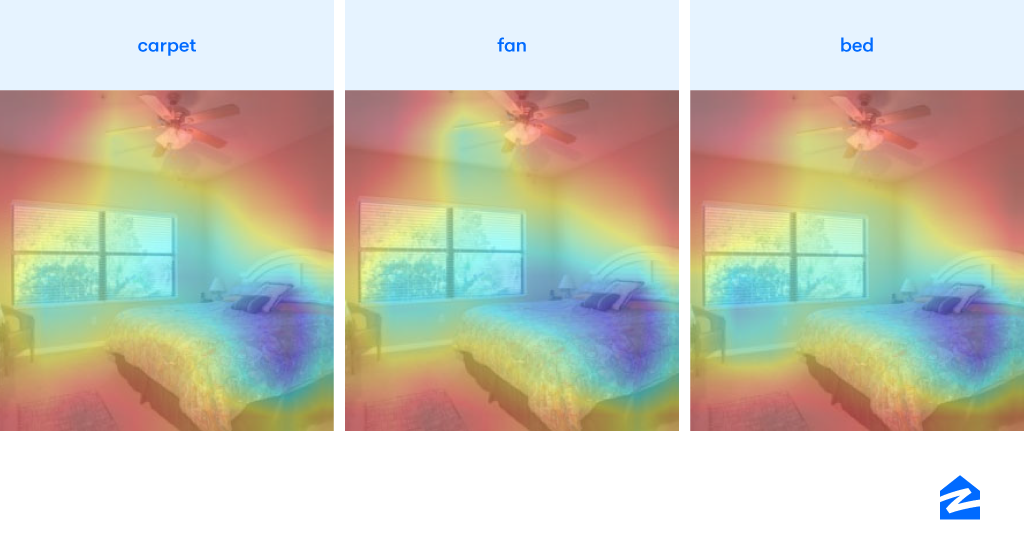

CAM of ResNet50 model pre-trained on the places365 dataset and fine-tuned on listing images. Blue shows the area of interest and it looks the same for all the classes. (The color scale is inverse of the normal representations.)

Due to the weakly labeled dataset, we were not sure what was the best way to move forward. We decided to use the learning from the paper Deep Learning is Robust to Massive Label Noise that suggests larger batch sizes, appropriate learning rates and threshold levels of correct examples can help with noisy data. We also modified our model architecture based on the paper Is object localization for free? — Weakly-supervised learning with convolutional neural networks. This paper proposes a fully convoluted architecture that helps with localization and classification. This also has the added advantage of easy model interpretability with the help of CAMs.

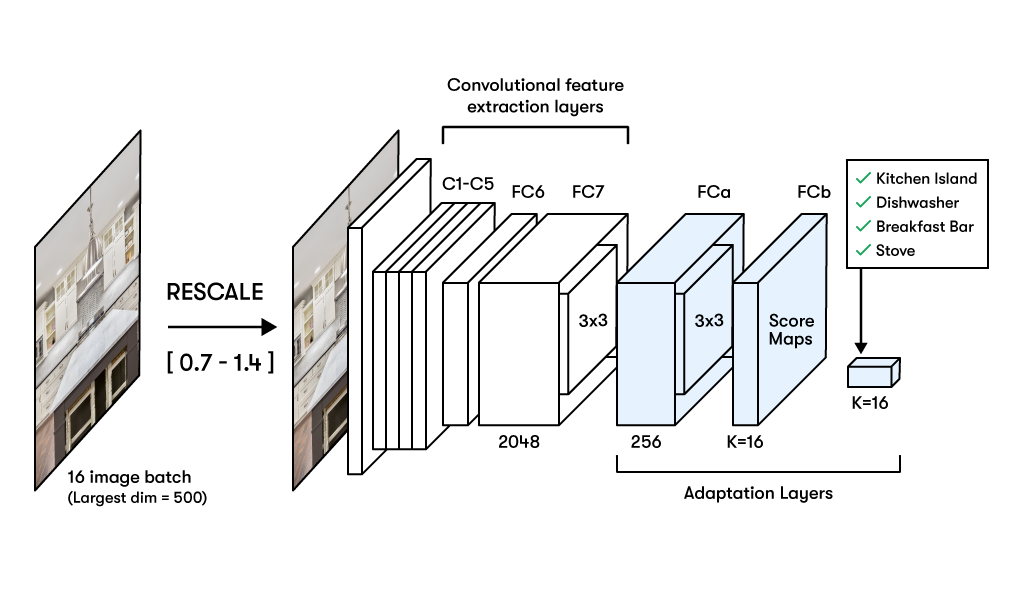

Although we formulated the problem as a multi-label classification task, due to the nature of the use case, it resembled object detection and localization tasks. The model needs to focus on different parts of the image to clearly assign attributes. Inspired by the paper, we decided to use 7 layers of a ResNet model and added 2 fully convolutional layers instead of dense layers to the architecture. The architecture had K(= no. of attributes) filters and a global max pooling at the last layer so that we end up with a Bx1x1xK tensor, where B is the batch size. This tensor acts as the final prediction for each class post applying sigmoid. The suggested architecture had the following advantages:

Modified network architecture for weakly supervised training based on this paper.

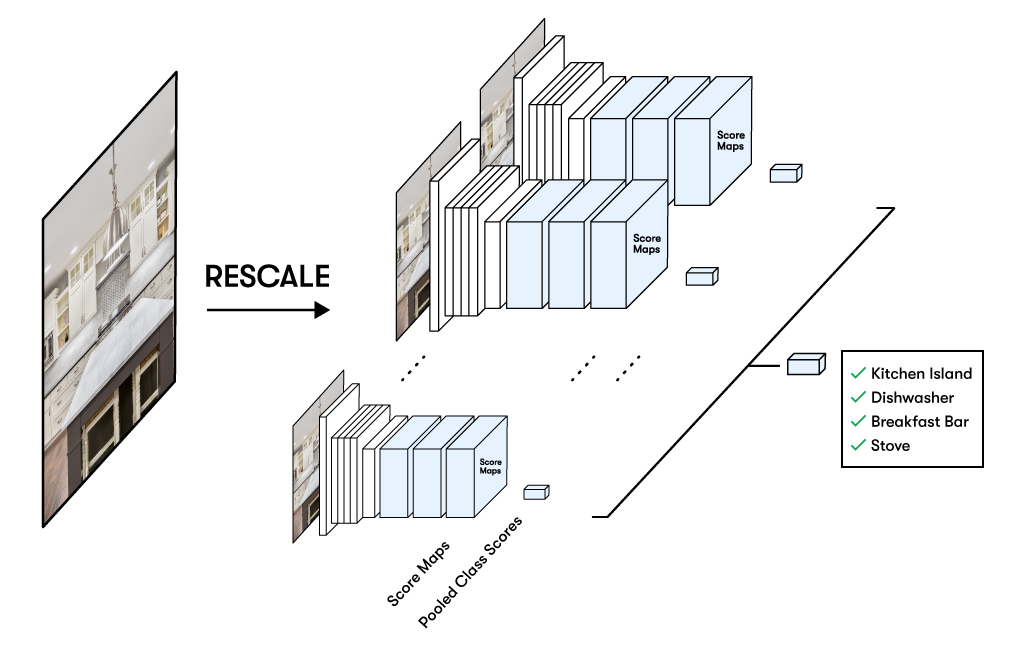

Test time augmentation incorporating multi-scale image classification

We tried various base architectures ResNet34 (trained on imagenet), ResNet50 (trained on places365), different image sizes and test time augmentation(TTA) to improve model performance. For TTA, we resized the given images to 7 different scales and averaged the model prediction to account for smaller attribute size in the images. As shown in the result table, new architecture performed much better compared to a dense layer and was able to learn even with weakly label dataset. We also observe that, dynamic resizing of images while training and TTA further improved model performance as it became easier for the model to identify even smaller objects.

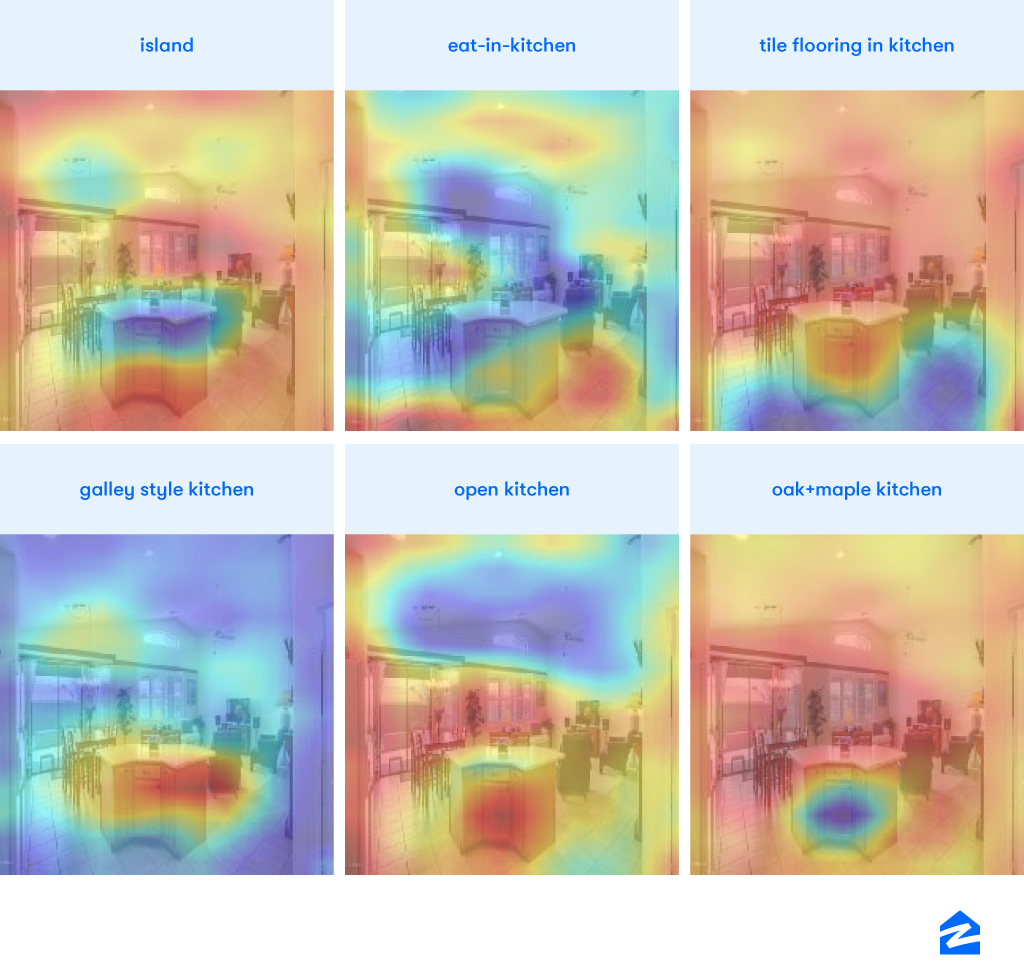

The CAM also looks much better compared to previous architecture. We can clearly see various discriminative regions matching to the location of the attribute.

The CAM also looks much better compared to previous architecture. We can clearly see various discriminative regions matching to the location of the attribute.

Class activation map with fully convolutional architecture using ResNet34 base model trained on imagenet dataset. The activation maps looks much better and shows focused regions(blue) for each class. (The color scale is inverse of the normal representations.)

After the encouraging results of phase 1, we decided to expand to more categories and check the generalizability of our models and techniques. We also wanted to validate the model pipeline and find ways to improve model performance.

The major bottleneck in expanding to a larger number of categories was the requirement of a validation dataset. Since hand labeling the dataset was the only way to get validation data, we wanted to make use of available open source datasets to reduce this overhead. We explored COCO and ADE20K data set and listed common attribute with the Zillow attribute list. ADE20K had the maximum overlap between the attributes, but the data set size was really small. COCO had only a few categories in common but had a large amount of data. We created a 25K dataset for benchmarking and validation.

We trained our model pipeline on the open-source dataset to evaluate the model performance. With 30 attributes, the model had an mAP(micro) of 0.734 which was reassuring. We also observed that for attributes with less than 500 images, the model performance was poor. We also wanted to create a baseline for weakly labeled data by training on our weakly labeled data and validating against the open source dataset. To our surprise, the model performance was unexpectedly bad and revealed the next set of issues:

The labelling criterion for the attributes was different across Zillow and open source dataset for a few classes. In order to have a consistent evaluation dataset and metric, we labeled 700 images to act as validation dataset.

With the increase in the number of attribute classes, the model localization deteriorated. In order to tackle this problem we decided to try different loss functions, training techniques, and architectures. During our initial experimentation, we had found a fully connected layer network performed better than a dense layer network. However, this conclusion was based on a network that had ResNet50 pre-trained on places365 dataset. In order to isolate both components, we tried using dense layer networks with ResNet34 trained on imagenet data. To our surprise, the architecture performed better compared to a fully connected network trained on the same dataset. This may be due to the nature of the task of scene classification which needs the model to focus on the whole image instead of certain parts of the image. However, the CAM’s still did not look inspiring as shown below:

CAM activation of a ResNet34 model with dense layers trained on imagenet dataset.

GradCAM activation of a ResNet34 model with dense layers trained on imagenet dataset.(The color scale is inverse of the normal representations.)

In order to solve this mystery, we tried GradCAM and it produced much better results. GradCAM uses the class-specific gradient information flowing into the final convolutional layer of a CNN to produce a coarse localization map of the important regions in the image and is a strict generalization of the CAM. We believe this is the reason for a better visualization and it also assured that the model performance is not due to co-occurrence capture but due to image attributes.

Our research also revealed a variety of interesting approaches to tackle the problem of noisy and an incompletely labeled dataset. Here are a few approaches which we explored but only on the surface:

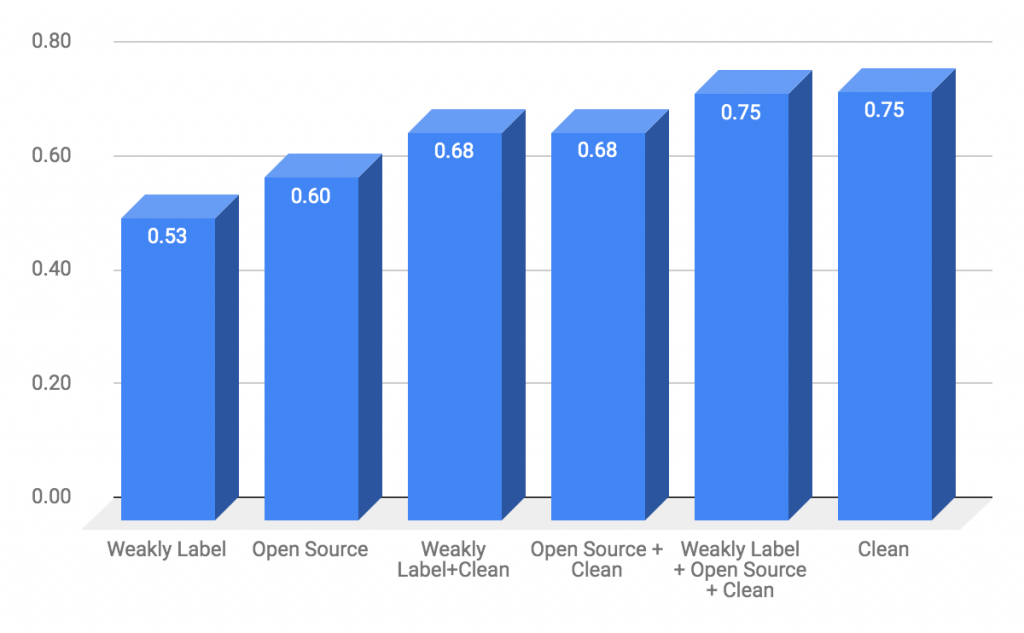

Comparison of model performance across different domain adaptation scheme. Depicts models ability to learn with weakly label and minimum clean data

This project showed us the potential of weakly labeled data in successfully training deep learning models and have paved the way for a lot of future projects. Personally, this project was a great learning experience for me and helped me learn the end-to-end project cycle of training, building and deploying deep learning models. I deployed the best performing model as a RESTful API service. Check out this previous post to learn more about deploying Deep learning models at Zillow. It also exposed me to the issues of translating business problems to machine learning problems and gathering relevant data. I was able to witness the learning power of deep neural networks even with noisy data and various ways of interpreting the end models.

This blog was part one of the project dealing with real estate images. Keep an eye out for part two where we will discuss how we use deep learning and NLP to improve keyword detection in listing descriptions and improve upon our weak-label generation process.