Building a strong foundation to accelerate StreetEasy’s data science efforts

Data is at the foundation of everything we do at StreetEasy and Zillow. Users of our websites and services can get recommendations through our homepage and tailored emails; they can read blog posts (such as this) that contain the latest trends and numbers; and they can even access data, such as existing inventory and prices, through our StreetEasy Data Dashboard.

Given the importance of data at StreetEasy, we recently set out to improve the quality and availability of our data. This blog post describes that journey.

There is a huge difference between data and easy-to-use data. The former can lead to bad decisions and sub-par experiences; the latter leads to a superb product and experience that enables the people who use our services to make quick, data-informed decisions. Let’s illustrate how that works.

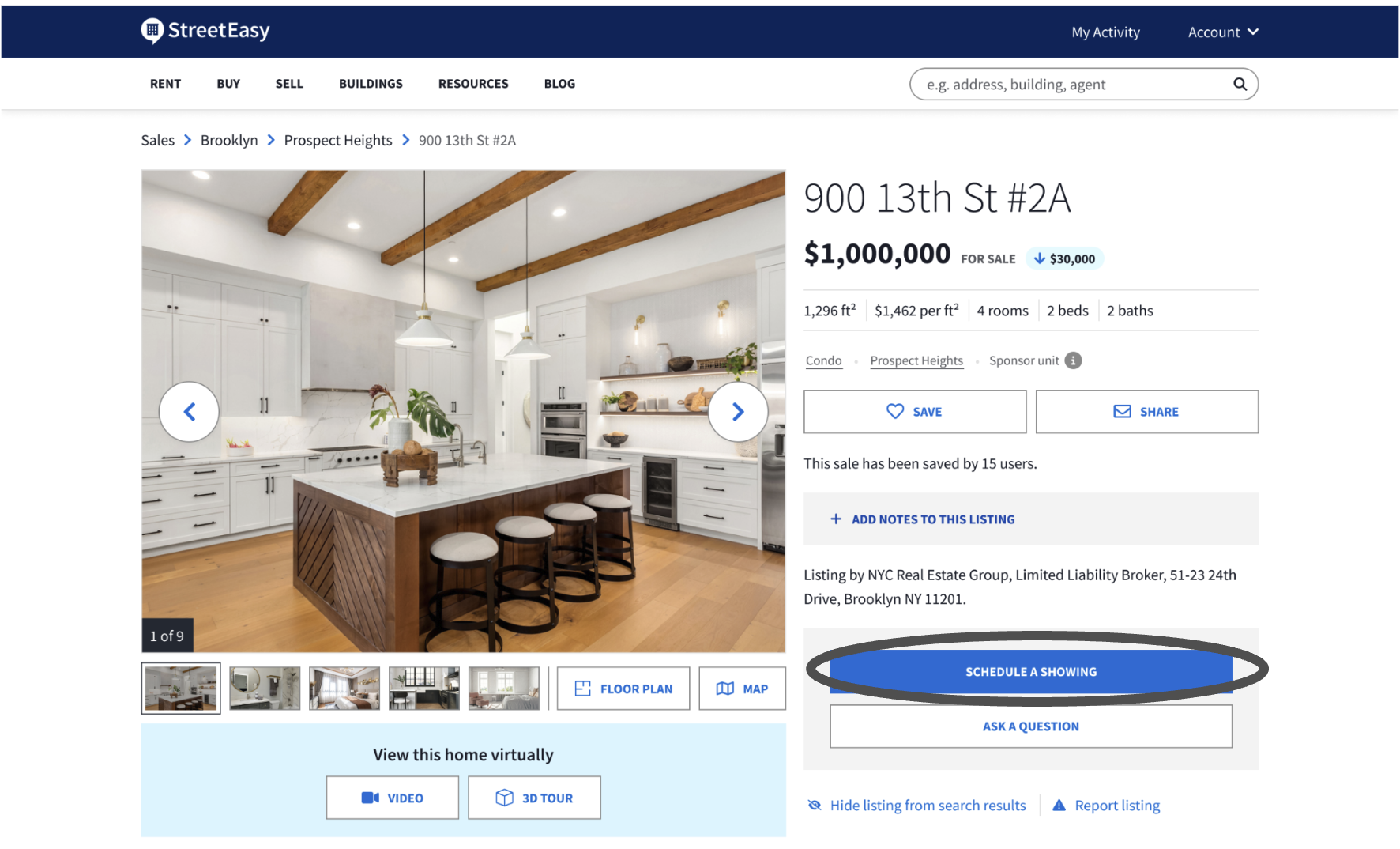

Example of a key user action we are interested in tracking.

As the data team, we want to calculate the submit rate, i.e. the share of sessions that result in at least one submit. A submit is when a user clicks on the “SCHEDULE A SHOWING” button and submits a request (see image). The raw data that we log populates a large table of events (i.e. actions a user takes online) that contains billions of rows and hundreds of columns. But before it can be used to answer even the simplest of questions, the data (shown in the table below) must be transformed.

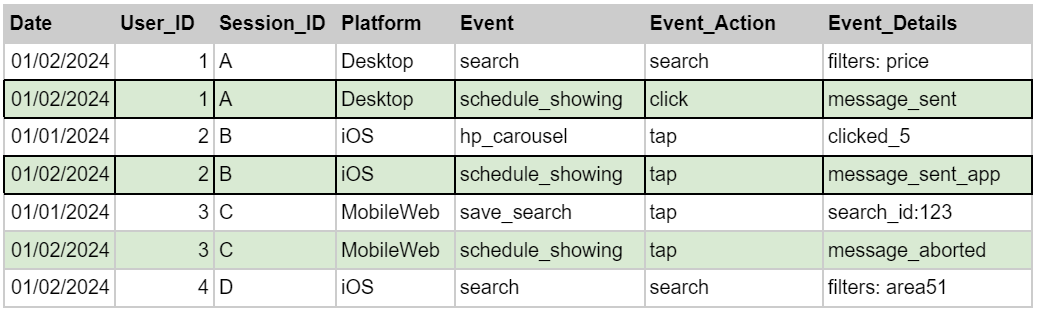

Illustrative example of raw event data. While our actual data looks quite different, this example clearly illustrates some of the practical data challenges our team used to encounter.

For example, to calculate the submit rate, one needs to identify the event that corresponds to the action we are interested in. One can do this by finding the schedule_showing event and filtering for those instances when a message was sent (Event_Details = message_sent or message_sent_app). There is a lot of nuance in the data, such as:

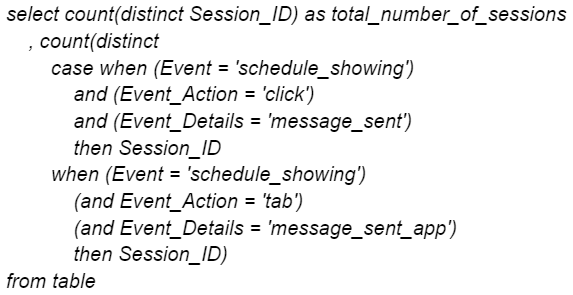

If we were to write a SQL query to do this, it would look something like the following (this example is illustrative and not an actual SQL query):

This example shows the difference between data and easy-to-use data. While the data is technically available and one can calculate all sorts of metrics, the process is difficult, cumbersome and error prone. This can lead to a variety of issues, such as:

In the remainder of this blog post we will explain how we overcome these issues.

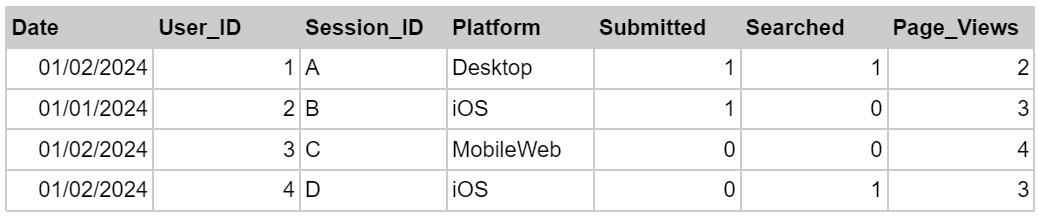

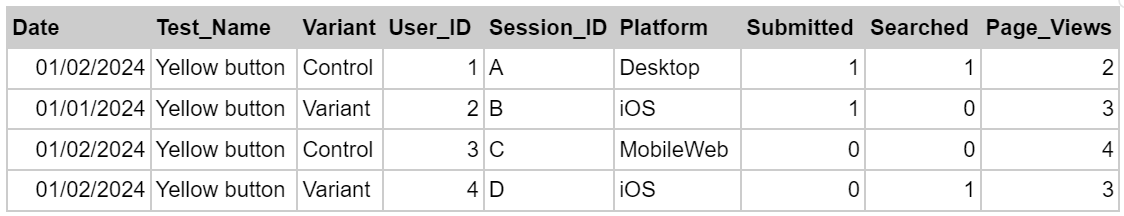

The issues described here have to do with the transformation layer in ETL/ELT. Data needs to be transformed into a format that makes analyses easy, reproducible and reliable. The table below offers an example of what this means. It summarizes the activity that happened in each session and transforms the raw data, making analyses very easy. For example, the column “Submitted” tells you whether or not there was a submit in that session. The average of that column is the submit rate, which can be calculated with two lines of very basic SQL.

Illustrative example of a certified dataset.

There are countless advantages to transforming the data in such a way. A few highlights are:

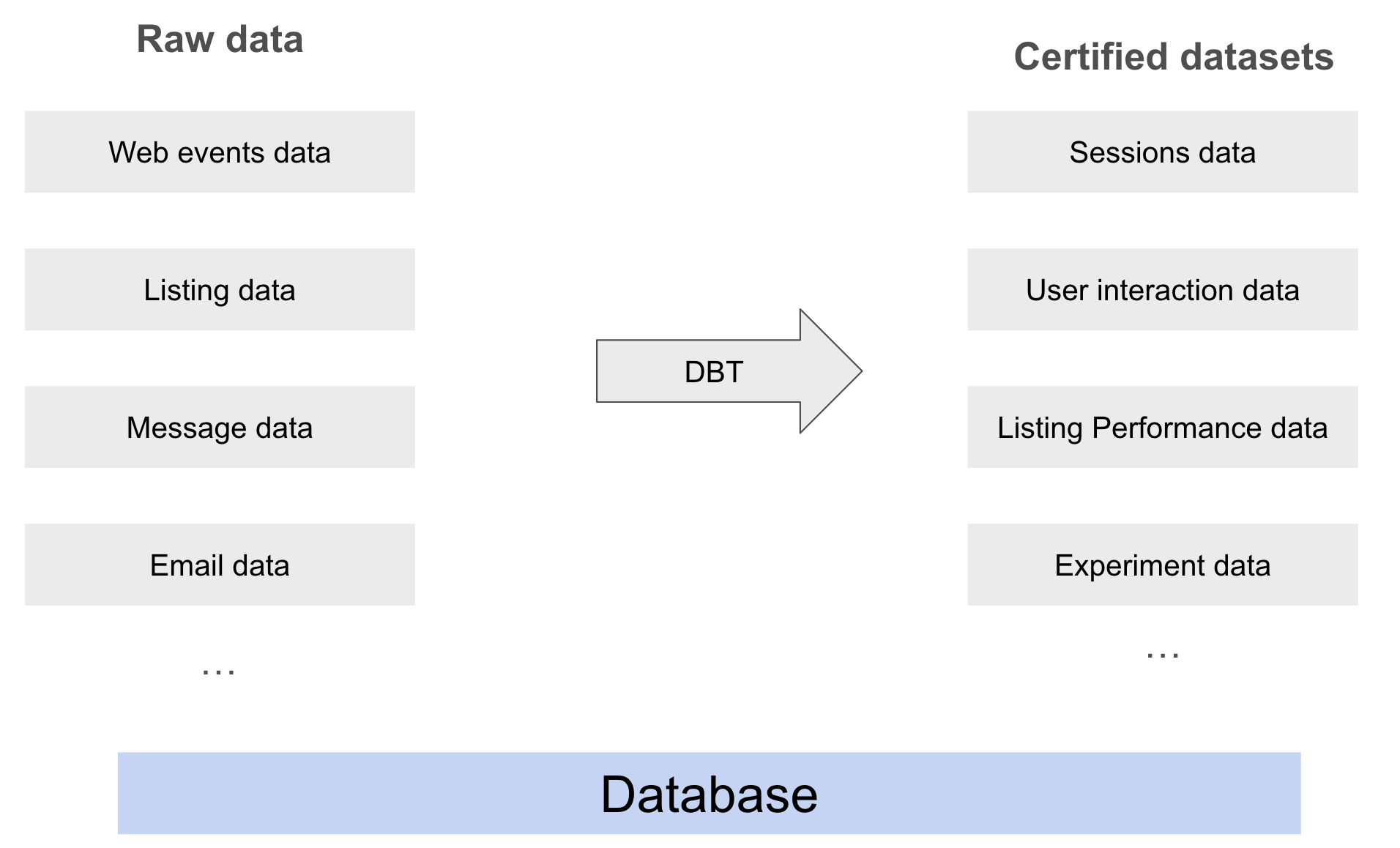

The main tool we used to achieve this is dbt, a data transformation tool that enables data analysts and engineers to transform data in a cloud analytics warehouse. Dbt allows us to orchestrate these transformations at scale and have the entire team contribute to this project. In our case, hundreds of tables (from different applications) are processed into tens of certified datasets every few hours. These datasets share a few important properties:

The schematic below explains the process of transforming this data at scale.

Schematic of how our raw data gets transformed into certified datasets.

Test cases for certified datasets

The creation of certified datasets has completely changed how we run data analysis and allowed us to be much faster as a team. Below we discuss a few examples

Prior to creating our certified datasets, running even a simple A/B test was a challenging proposition. Each test needed an ad-hoc, complex SQL query that pulled specific metrics from our raw data. So we created a slightly different version of the sessions table that included information about which experiment and variant a session belonged to (see below). This table makes it trivial to analyze and quantify any change in the session metrics for a specific test, all one has to do is a group by in SQL.

Illustrative example of a certified dataset for our experiment data.

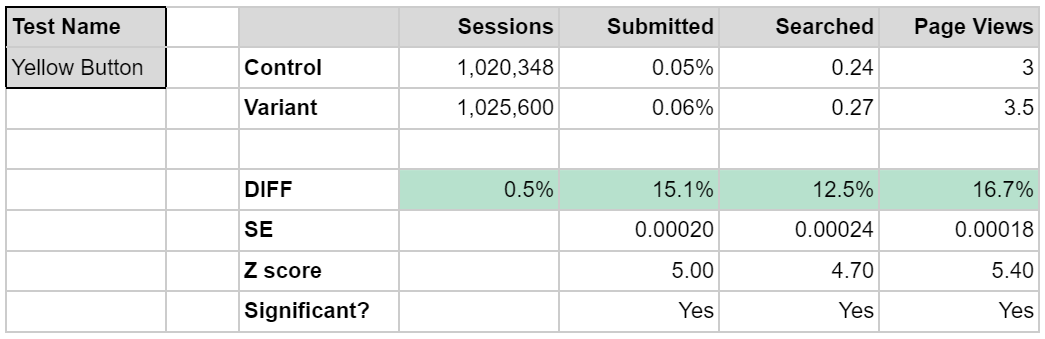

One of the benefits of this certified dataset is the ability to automate A/B test analysis. Indeed, we have created a dashboard through which a user can input the test name and quickly generate a view of how different standard metrics are performing. A statistical analysis can also be done on the fly, and users can quickly see if the lifts in their metrics are 95% significant.

Illustrative example of a dashboard that tracks the results of an experiment. In this experiment, the user can quickly see that the variant leads to statistically significant lifts in some KPIs.

Access to accurate, reliable and documented data also makes ML model development much faster and easier. This became evident in a recent project that required a model that would predict whether a lead (when we connect an agent to a potential buyer) would result in a real estate transaction with that agent. The goal of this project was to prove the feasibility of this endeavor and make the case for investing more in this area.Luckily, the team had already worked to provide access to a certified data set that was clear, well-structured and documented. So we could plug that data set directly into our model, and in less than a day we had a good prototype that proved our ability to make these predictions. This level of fast turnaround was unthinkable in the past, and has led to that project being prioritized on our roadmap.

The ability to conduct self-serve analytics is one of the holy grails in our field. Many vendors claim that they can provide everyone at a company access to all of its data, without writing a single line of SQL or having to know any data nuance. Reality is more complex. StreetEasy’s journey to self-serve analytics has evolved over time. We quickly abandoned the hope that a single tool would allow everybody to access all the data and to run any analysis they want. Instead, we created a pragmatic, multi-pronged approach that gives our stakeholders a variety of data access options.

Our approach is based on a key insight: about 80% of the data queries are frequently asked (e.g. how many users do we have for each platform?). Some of these questions are trivial (e.g. the user breakdown by platform), while others are trickier.

But because they are frequently asked, they are a perfect use case for automation, i.e. creating a dashboard that everybody at StreetEasy can access. The remaining 20% of these questions will be ad-hoc and exploratory. This is a natural and healthy outcome of continuously pushing the boundaries of our business and looking for new opportunities, which leads to always asking new questions and running new analyses.

Based on what we described above, our approach is made up of three pillars; the first pillar addresses the frequently asked questions, while the last two are for ad-hoc and exploratory data analysis.

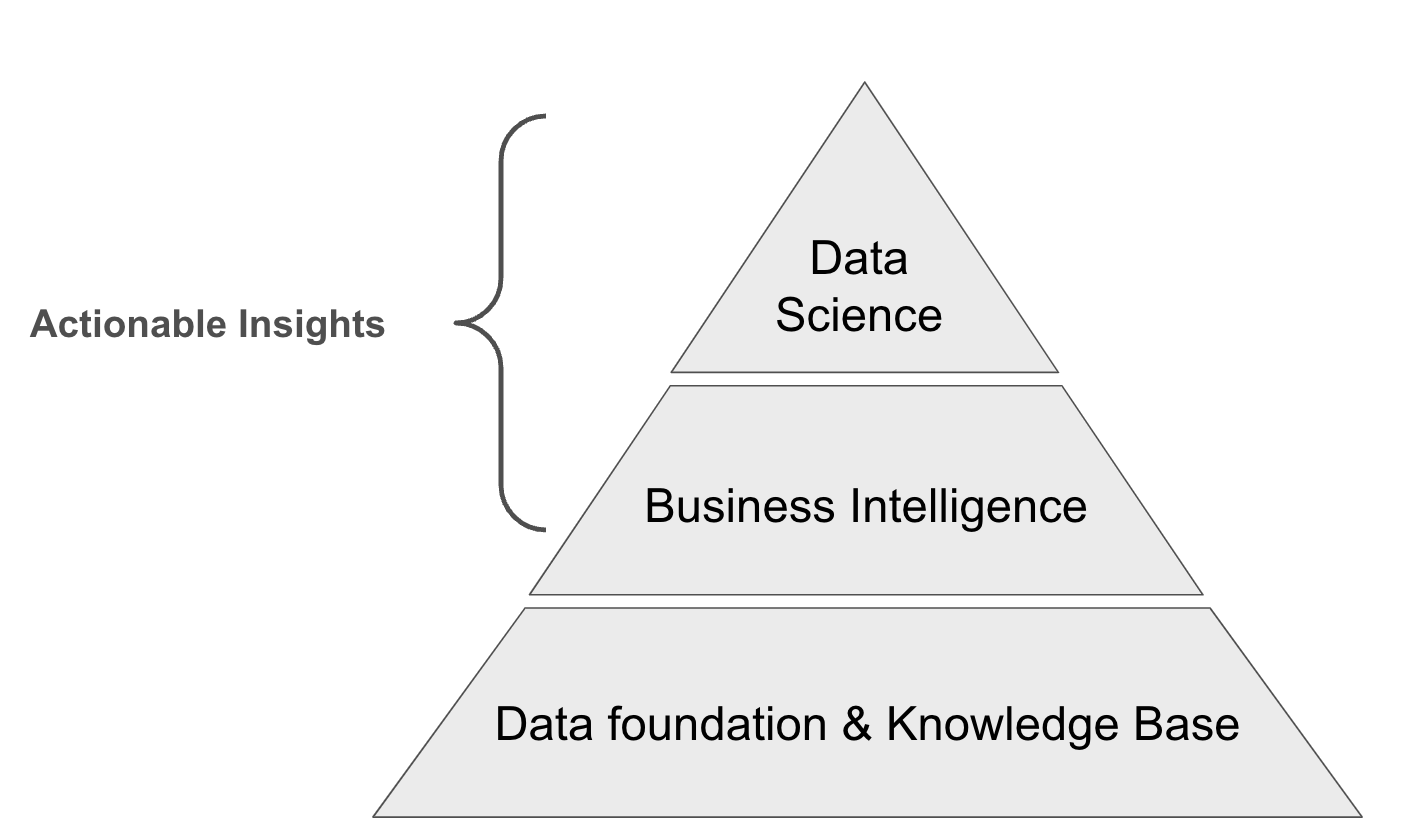

When one thinks about leveraging the power of data to create a better product and experience for their users, it can be helpful to think about the process a bit like building a pyramid: We all want to leverage Business Intelligence and Data Science to provide actionable insights for the business (the top parts of the pyramid). But before anything else can be done, you need a strong (data) foundation. The work described in this blog post allowed us to build this foundation; we’re now enjoying the fruits of our labor (the top of the pyramid).

This project was a real team effort and therefore there are many people to thank. A special thanks to Lisa Karaseva, Deans Charbal, Boian Filev who spearheaded using dbt to create our first certified datasets. Similarly, thanks to Nancy Nan, Claire Tu, Illia Maliarenko, William Wallace for creating and reviewing our certified datasets.

StreetEasy is an assumed name of Zillow, Inc. which has a real estate brokerage license in all 50 states and D.C. See real estate licenses.