Image Processing at Zillow: Out with the Old and In with the AWS

The old saying goes that real estate is about Location, Location, Location. We’d like to submit a new saying: online real estate shopping is about Photos, Photos, Photos. Nothing delights Zillow’s users more than big, beautiful, and bountiful photos on real estate listings. And nothing delights agents, sellers, and property managers more than the page views, leads, and traffic they get when their listings entice shoppers with great pictures!

As Zillow’s popularity increased and agents started providing higher resolution photos on their listings, we found that our legacy imaging system could not keep up with the demand. We realized we needed to scale in a big way. Amazon Web Services (AWS) was the way to get us there.

This blog post walks you through our journey from our local data center legacy imaging system of the past to our AWS-powered imaging system for the present and future. We’ll show you:

We also discuss a few options we’re considering for making the new system even better, so please feel free to comment with your opinions and experiences!

Zillow currently processes over 3 million new images each day. This includes listing images; profile images for users, agents, and other professionals; home project images through Zillow Digs; and other assorted image types. Some are manually uploaded and some come in bulk feeds to be downloaded. The rate of new images coming from these bulk feeds can be unpredictable and is typically batched into groups throughout the day rather than an even rate.

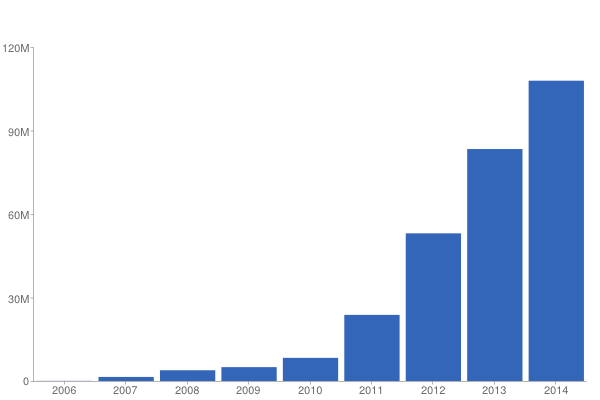

We have a lot of turnover in our images, so we don’t end up persisting 1+ billion images (3 million * 365) each year . However, the chart below shows how many more images we have started to store as the years have passed. You can see why we needed to scale up our system!

Our legacy system lived in our own hosted data centers (with the exception of a CDN for image serving). Images were downloaded out of one queue; stored on an NAS device in the “pyramidal TIFF” format; and served to the CDN out of a local Squid service.

Its primary problems were:

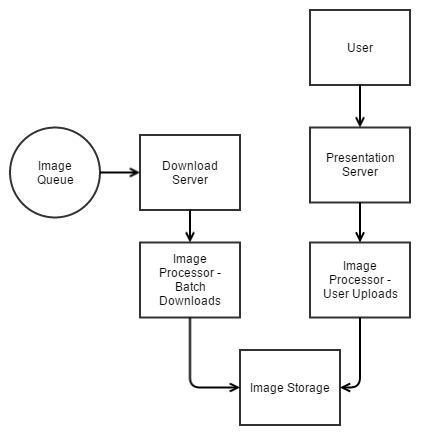

Here’s a diagram of our downloading and processing pipeline:

As you can see, manual uploads of images came in one path (on the right) and images provided from listings feeds were entered into another path with a queue (on the left). Because images from hundreds of feed sources were downloaded out of a single queue, we could not take advantage of the fact that some image sources allow much faster downloading with more concurrency than other sources. This caused head-of-line blocking problems (a slow or problematic image at the front of the queue would hold up other images) and the potential for overwhelming slower image sources with an image request rate that was too high (while at the same time not being able to take advantage of sources that could support that rate).

Images were stored on a large NAS device in the “pyramidal TIFF” format, which is essentially a multi-layer image consisting of progressively smaller jpegs. The concept is that you can produce any image size efficiently by opening the file, seeking to the layer holding the next-largest size, and resampling it appropriately. This avoids the expense of scaling the original image (which is possibly much larger). Our scaling was done on the fly when an image serving request came for a particular size of image. Scaling on the fly can be expensive, so we relied heavily on caching via our CDN and local Squid service. If the cache was even partially blown, recovery would overwhelm the dynamic scaling layer and the NAS device for hours.

The tool that we used for processing the image to store it in pyramidal TIFF format was aging and not easily extensible. We could not easily put in image quality improvements like removing solid color borders from images or supporting CMYK/other colorspace images.

Putting images “in the cloud” may sound like a simple endeavor, but we actually had to build a substantial amount of infrastructure to end up with a system that solved the problems of our legacy imaging system.

Here’s a summary of the problems we set out to solve and how we solved them:

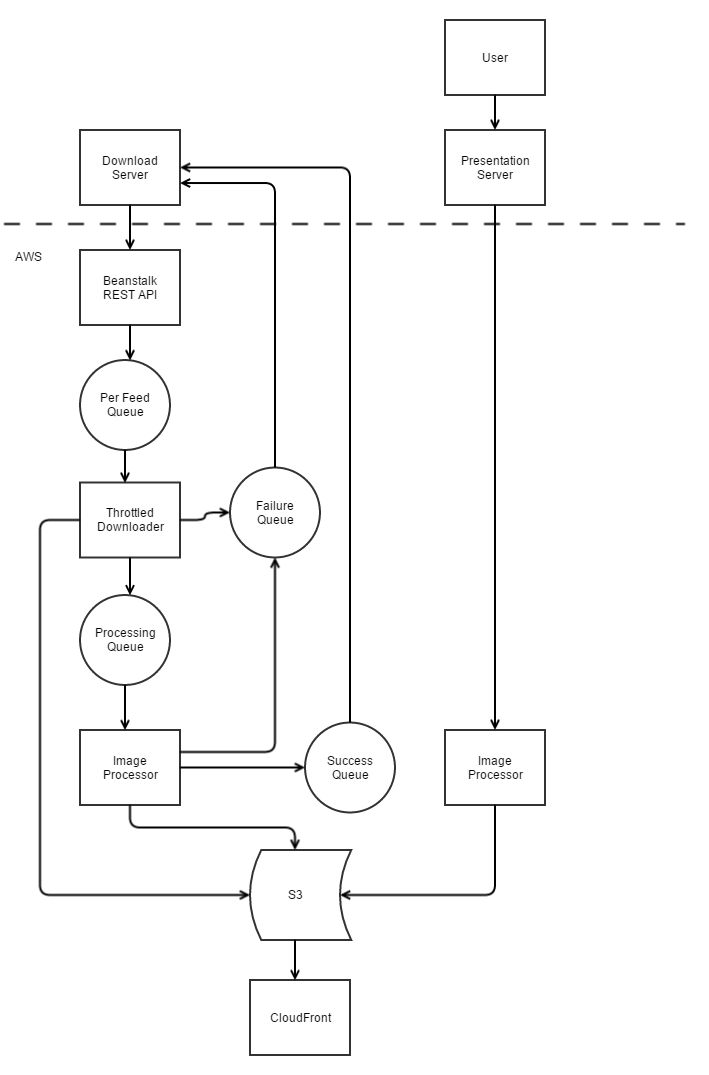

Here’s a diagram of the system that we built. The individual services are described below.

Download Server (DLS): This is a service in Zillow’s data center that manages image download requests coming from listing feeds. This includes ensuring that our database of images (which also lives in our data center) is kept up-to-date with the results of the cloud image processing.

Beanstalk Rest API: This service is just a front-end in the cloud for the DLS. It takes an image download request and puts it in one of the per-feed SQS queues. We have one SQS queue per feed solve the head-of-line blocking problem.

Throttled Downloader: The throttled downloader does the actual downloading of the image. It controls the rate and concurrency at which we download images for each feed source, allowing us to take advantage of providers that support fast downloading and to not deluge providers that don’t. If the image download succeeds, we write the original image into S3 to be used in the image processing step. If the image download fails, a message is queued into the failure queue to be processed by the DLS.

Image Processor: This is a beanstalk worker role deployment. It runs the Python Imaging Library with some custom code. We take the original images stored in S3 and process them through various image quality methods (such as removing solid color borders). We also generate a standard set of sizes for each image that we know we need on the site (for example: a large one for our full page photo gallery, a smaller one for our emails, etc.) and store those to S3. If the image conversion succeeds, a message is put into the Success SQS Queue. Otherwise it is put into the failure queue.

CDN: Images are served out of S3 and cached in the CloudFront CDN. We serve up to 15,000 images per second and have never had performance issues with this model.

In the process of moving over to a new imaging system and AWS, we learned some important lessons.

Measure! The initial versions of our apps did not expose enough metrics. Due to this, we could not figure out which component of the pipeline was contributing to image processing delays. We ended up having to invest a lot of time after the system was already live adding CloudWatch metrics to track latencies. Once we did this, we had a clearer picture of the causes and could take action to eliminate them. In some cases, we could use the metric thresholds to drive autoscaling activities, so no human intervention was required.

Use CloudWatch Alerts. We found cases where Beanstalk containers did not behave as expected. For example, in a worker role, sometimes the system would scale down, but leave a bad instance (one that failed early in the initialization phase) in service. The bad instance would never pick up any work. And since we used CPU thresholds to add/remove machines, the autoscaling group would never do its job because the CPU of the bad instance was always at zero. We could detect this by adding alerts on queue levels. We also added alerts to other parts of the pipeline which allowed us to closely monitor the SLA of the various components.

We are continuously tuning the new system for best performance, lowest cost, and most functionality. Here are a few areas we want to tackle in the future:

Sometimes an old system is worth maintaining, but other times you’re better off throwing it away and starting from scratch. Given the importance of images to the Zillow user experience, we wanted a system that could grow to match our needs. By moving to AWS (S3, CloudFront, Elastic Beanstalk, and SQS) we no longer have to worry about forklift upgrades, cache flushes, or capacity. The move was not without its challenges (in large part because we had very little prior AWS experience), but we are happy with the new system and eager to continue improving it.