Leveraging Knowledge Graphs in Real Estate Search

In the age of the internet, there is no dearth of information on any topic we want. But organizing information in a structured manner and making it consumable is still a challenge. Knowledge Graphs (KG) are one such tool for storing information in a more structured manner, capturing the relationships across data points, and making it easy to consume — both for humans and machines.

We experience the benefits of a KG during our internet searches, when structured information gathered from a variety of sources is summarized by the search engines. For example, a keyword search for the word “New York” returns a variety of results related to land area, weather, demographics, and news reports, all of which are linked and structured under the same page easily. The results are similar even when you look for “NYC”. This is a nice example of how a KG can be used to visually represent the relationship between a query and indexed data from different sources, making the data easily available for a variety of tasks and consistent for related searches.

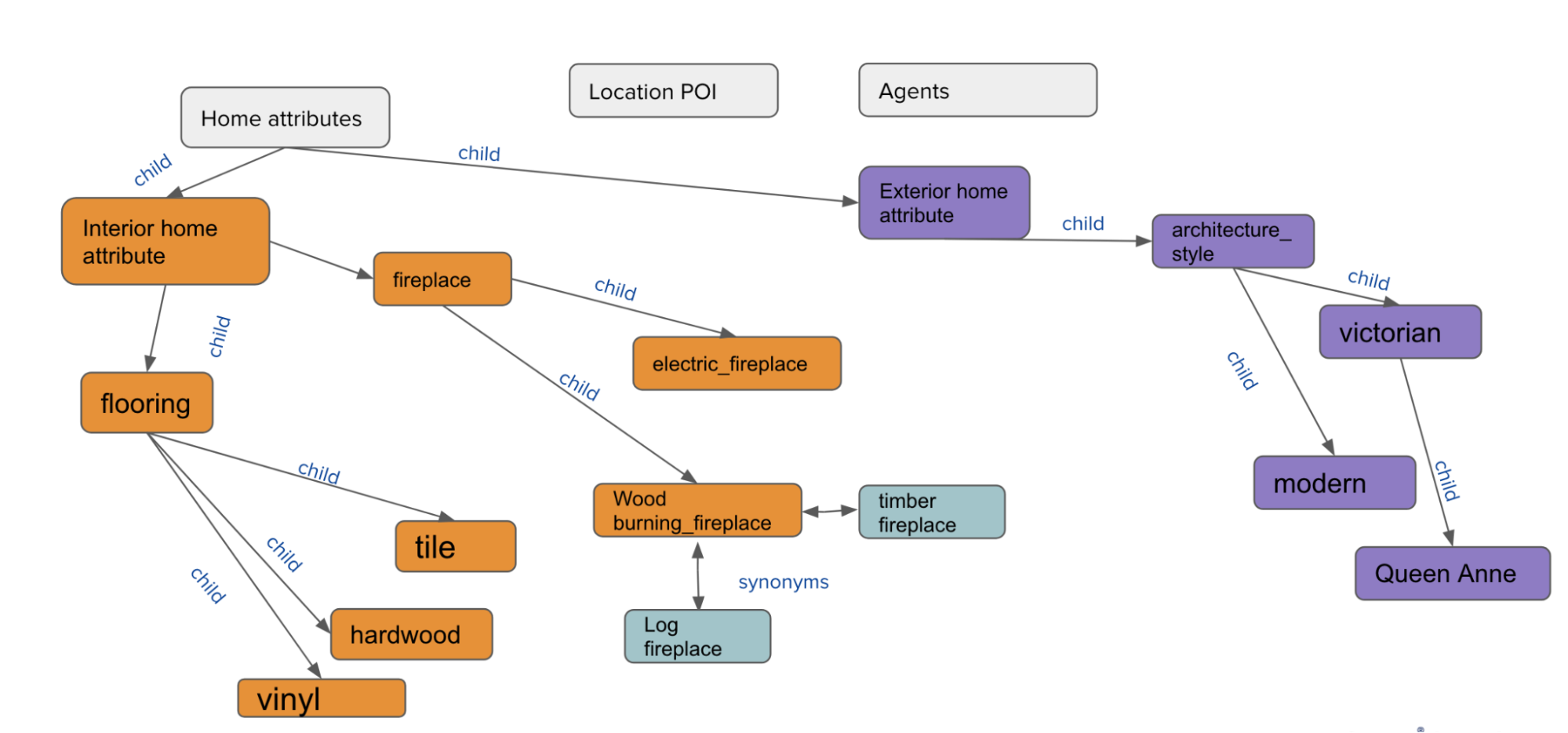

At Zillow, in addition to an abundance of user engagement data, we work with large amounts of home-related data in the form of listing images, listing descriptions, home attributes, neighborhood information, points of interest (POI) datasets, etc. We also work with several in-domain knowledge banks, such as curated blogs, real estate attribute definitions, and annotation guides that are relevant to the business. In order to create seamless experiences for our users, and help them find their next home, it’s critical that the business consume and structure this data correctly. Figure 1 is an illustrative knowledge graph representing the relationships among a small subset of possible home attributes

In summary, a knowledge graph can help Zillow:

Figure 1. A small sample of a real estate KG consisting of concepts for different home features and connected to each other through Parent / Child (i.e., Hypernym / Hyponym) and Synonym relationships

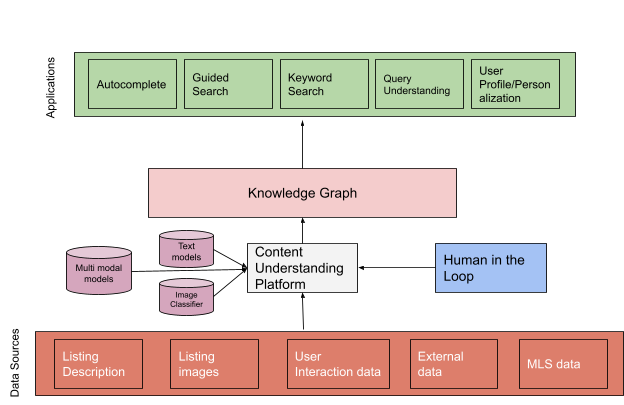

The diagram in Figure 2 shows the functional view of a KG enriched by data from a variety of sources and supporting various use cases and product experiences. The Content Understanding Platform is Zillow’s internal platform built to tap into a variety of structured and unstructured data sources available to extract information and store it in a KG, with the help of Human in the Loop (HITL) validation (i.e., a human validating correcting model prediction). The platform is crucial in aggregating and normalizing information and acts as a bridge for KG creation and updates. The platform hosts a variety of image and text models and can make near real-time predictions for supported use cases. The data present in the KG powers a variety of applications, as shown below. We will now briefly touch upon how the KG helps to power these applications.

Figure 2. Applications of a Knowledge Graph (KG), together with data sources and models informing the KG.

As shown below, we solve several important problems with the help of Knowledge Graphs:

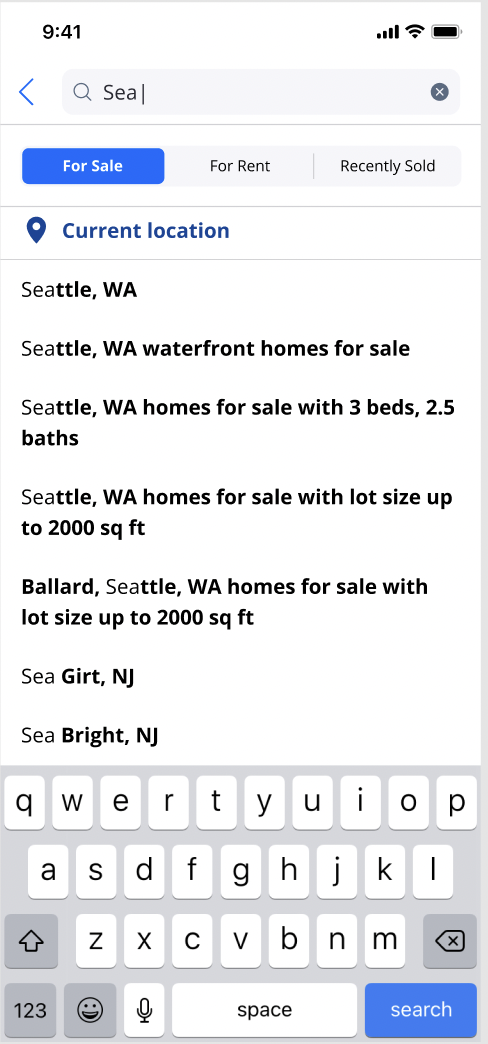

Search Query Autocomplete: The KG provides a variety of concepts that can be presented to users in order to provide them with the right options as they search for homes. The KG offers suggestions related to various home concepts, such as amenities, specific architectures, or locations:

Search Query Autocomplete

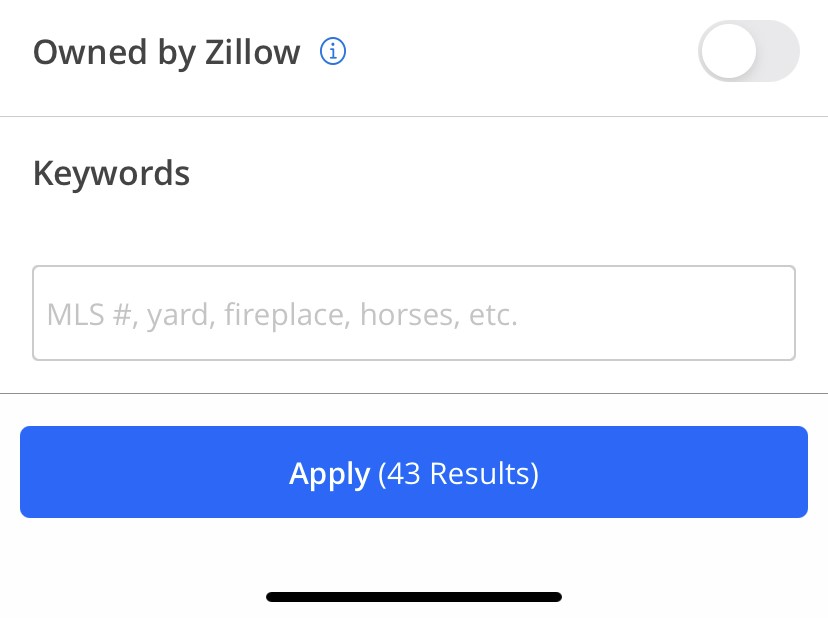

Keyword search: The KG plays a critical role in understanding user intent during keyword search, normalizing the query keywords based upon canonical concepts that can be used to generate consistent retrieval of relevant listings, which are indexed with the same canonical concepts. On the indexing side, the KG enables standard indexing of listings and normalizes the listings in terms of the canonical concepts. A keyword search that leverages a knowledge graph in this way is referred to as a concept search:

Keyword Search

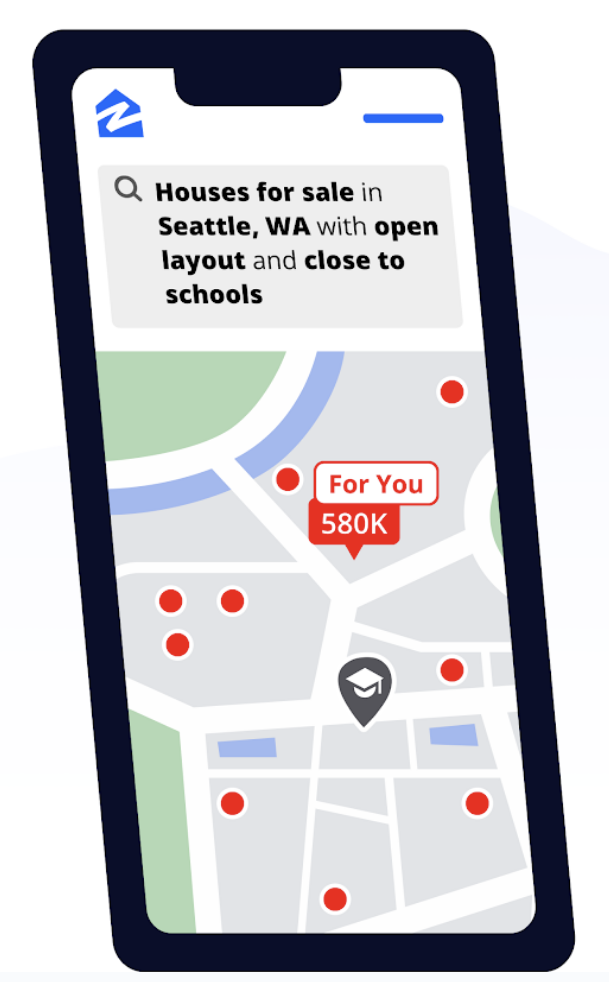

Query Understanding: The KG helps in understanding various components of a user’s natural language query and enables concept search for items they are looking for in the query:

Query Understanding

User Profile: A user profile in search and recommendation engines is a personalized dataset that captures a user’s preferences, behavior, and attributes in order to tailor the experience and improve the relevance of search results. KG helps create new user profile features based on the user’s interaction with explicit searches or their interaction with listings that are relevant to specific KG nodes they might be interested in. A more accurate user profile helps improve personalized recommendations:

User Profile

At Zillow, we work with data sources that are both structured and unstructured. Here are some examples of each type:

Creating a KG typically includes the following steps:

Knowledge extraction: This process deals with aggregating information across different data sources and getting it ready for ingestion in the KG. In our case, these are some of the data sources listed above. We use both statistical models and the latest transformer-based model to extract this information. (Read this blog post and this blog post for more information on keyphrase extraction.) We will touch briefly on the processes through which we extract this data:

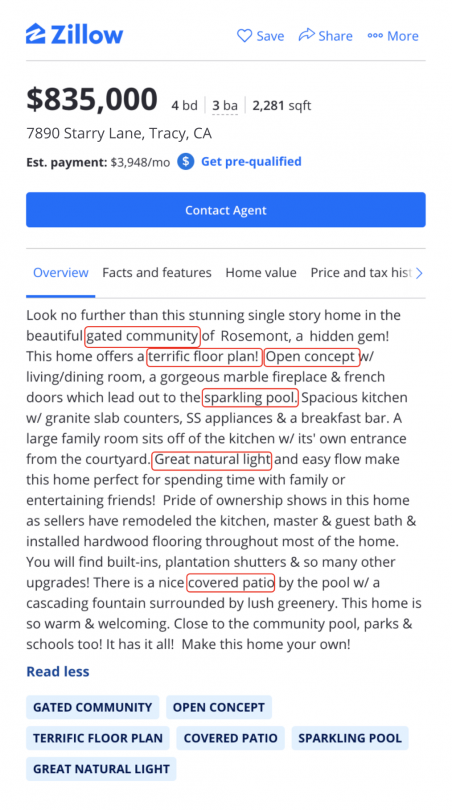

Figure 3: Example of extracting important home-related attributes from listing description.

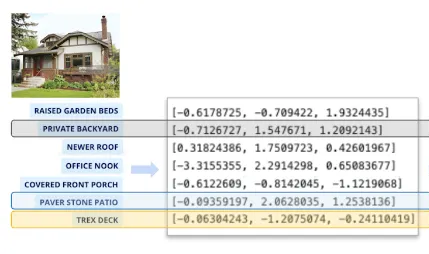

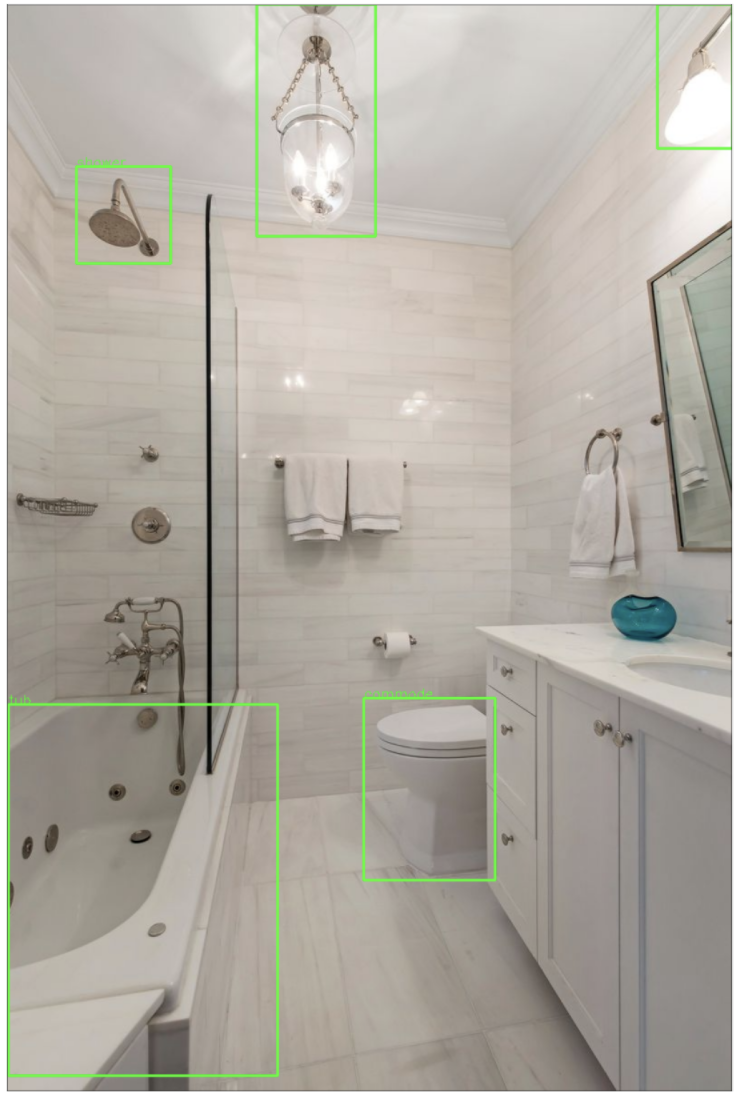

Figure 4: Example of identifying important home attributes from images

Defining Ontology: The next step to creating a KG typically involves normalizing the various data sources to standard ontologies and storing them in a standard format for easy consumption and inference. In simple terms, Ontology refers to the standard entities, classes, and relationships defined in a KG, and guidelines on how to interact with them. Here are some sample node types and relation types defined for the real estate domain:

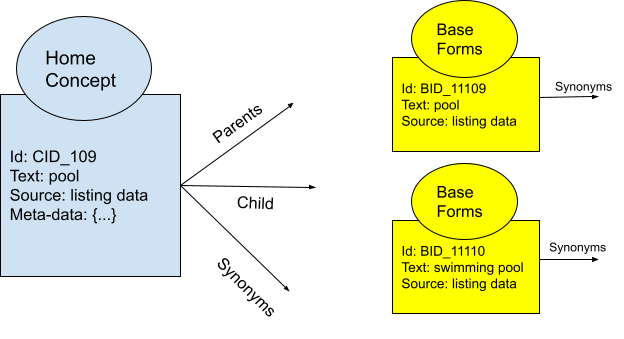

Figure 5: Example of Ontology nodes related to Home concept and Base form along with a set of relationship edges.

In Figure 5 we show two different node types to represent information:

The same design principles can be easily extended to other nodes such as agents, listing, etc.

Normalization and entity disambiguation: One critical aspect of ingesting data across various sources is the different forms in which the same entity can appear across the dataset. For example, the home concept “pool” can appear as “pool”, “swimming pool”, “swimmingpool”, or “has_pool: True” across various text data sources. However, we know that they all refer to the same standard concept of pool, and hence they need to be normalized and stored correctly in the Knowledge Graph. There are a variety of ways of handling this task, but we will talk about two broad classes of methods we use:

Connecting nodes in the Knowledge Graph: A major step in KG creation is connecting the nodes with relevant links to make the KG more informative and help find newer insights. A part of the linking process can be done with the help of:

We will quickly touch upon one such method we use for home concepts that are normally in text form. For one of the use-cases, we care mostly about synonyms, parent and child links detection as explained:

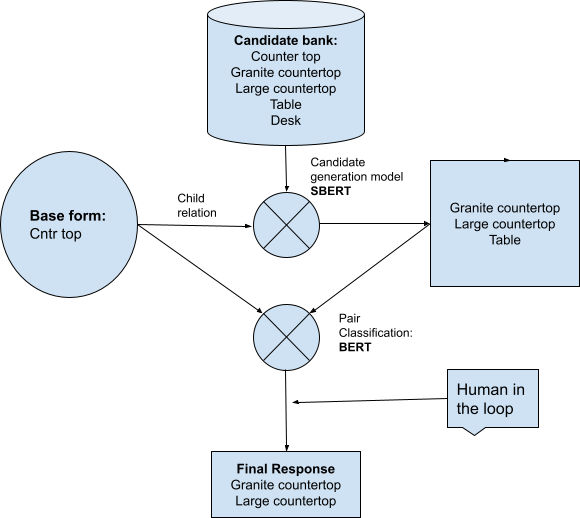

We have trained BERT-based models in-house that can help classify relationship types given a pair of nodes or generate candidates. Figure 6 depicts the flow of the link discovery process:

Base form represents the phrase for which we want to find child nodes. The candidate bank can be a list of all available nodes we can potentially connect to.

Figure 6: Explaining the process of discovering links across nodes in KG

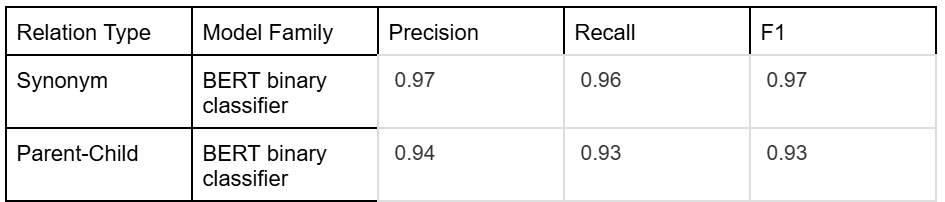

Table 1: Performance of in-house trained BERT-based models for Synonym and Parent-child relation discovery

KG updates and versioning: As you may have guessed by now, the process of creating and maintaining the KG is a dynamic process since there is a constant inflow of new information and updates sent across data sources. This makes the process of maintaining and updating KG a critical and challenging task in order to return correct information at any point in time. Typical updates can range from updates to listing description, image, and property structure data that is more common to rare occurrences of updates in definitions of some key concepts, parent-child relationships, and new node creation. In order to cater to the above-mentioned changes, we need to have a KG update workflow in place and a versioning methodology for easy tracking and analysis in the future. Two broad classes of updates we see at Zillow and the complexities associated with them are:

There are multiple ways to handle the above-mentioned updates and tracking of the KG depending on the use case, frequency of data updates, and consuming applications. At Zillow, we adopt the following mechanism of KG updates and versioning:

The KG has been a great tool for us in aggregating data across different sources, standardizing it, and powering many new experiences and products. This enabled us to launch the first Natural Language Search experience in the real estate domain and we experienced lifts in customer experiences measured through AB tests. We also observed a significant lift in the number of properties shown for keyword searches, the ability to understand user queries better, and better relevance score for properties shown to users. The standardization also led to a better understanding of users and improved our search and ranking algorithms. The initial success has been encouraging and paves the way for our future extension of the KG, as well as delighting our customers through new products and services.

This work would not have been possible without the active support and contribution of the amazing Search AI team here at Zillow. Kudos to Raghav Jajodia Supriya Anand Shourabh Rawat Jyoti Prakash Maheswari for the work in creating the platform and technology that helps in improving and creating new experiences for Zillow Customers. We would also like to thank Eric Ringger and Matthew Danielson for reviews and suggestions for the content of this blog.