Next Best Action platform: democratizing personalization with contextual bandits

The process of buying, selling or renting homes can be complicated, often leaving buyers, sellers and renters feeling unsure and overwhelmed. As a multifaceted platform, Zillow connects these buyers, sellers and renters with local market experts, and provides the information, technologies and services needed to help people confidently navigate their move. Given our varied offerings and diverse customer base it’s critical that we present a personalized and contextualized view of a customer’s likely next actions across the various touchpoints within the Zillow ecosystem. In addition, we must offer up content that resonates with them most, whether encountered on the Zillow app or website. This approach ensures that Zillow simplifies the complex real estate process by guiding customers in the right direction.

With this mission in mind, we developed the Next Best Action (NBA) platform, which enables product and marketing teams to easily personalize and optimize customer communications, messages and calls to action (CTAs) for their most important and relevant next steps

In this blog post, we will cover the NBA vision, the core AI model used, the infrastructure and its performance in a specific case, along with our plans for the future.

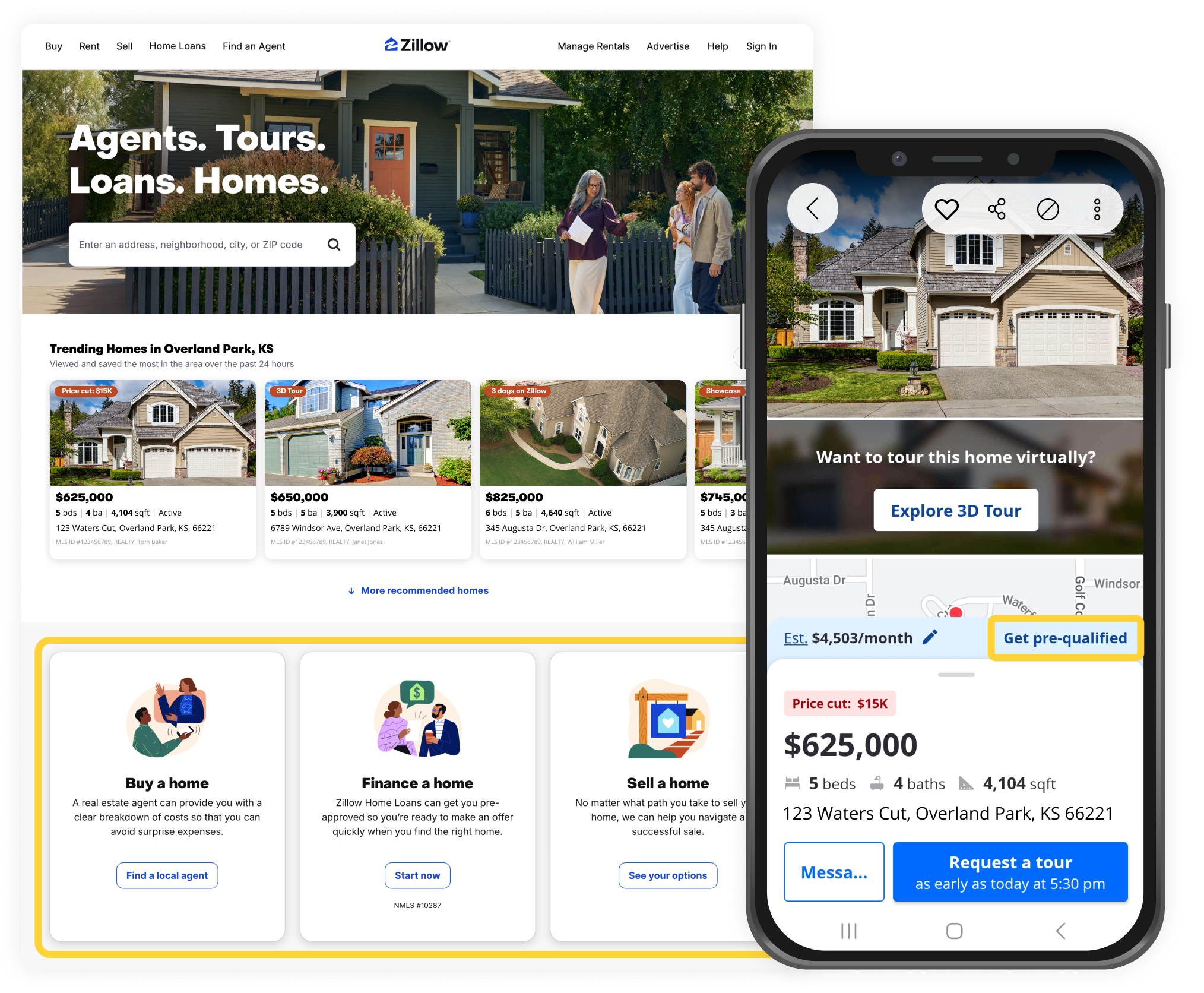

Given the variety of services, technologies and information available to support customers throughout their moving journey, our product and marketing teams create various entry points and value-proposition messages across the Zillow app and website. For example, the “Reason to Believe” tiles on the homepage help customers navigate Zillow’s main product offerings. And home details pages prompt users to take a tour, contact an agent or talk to a lender for pre-qualification. (See Figure 1 for two example touchpoints.)

Figure 1: Left panel: The module of ‘Reason to Believe’ tiles on the Zillow homepage. Right panel: The module of calls to action in a Zillow home detail page.

Marketing and product teams often use a manual, iterative approach to curate messages; rely heavily on manually-tuned rules for targeting users with the most effective communications and CTAs; and roll out experiences globally after A/B testing. This approach has demonstrated significant business value during the initial product launch phase, but it becomes more challenging to meet customers’ needs as the product and customer base grow.

Challenge 1: Lack of personalization — Teams rely on A/B tests to find winning messages, often adopting a “winner takes all” approach. This fails to address the full spectrum of customer needs. For example, if a message succeeds with 60% of customers, it means the needs of the other 40% of customers aren’t being prioritized, which can reduce customer retention in the long run.

Challenge 2: Slow speed & increased effort to learn — For teams without personalization resources, optimizing messages via sequential A/B testing requires the use of an iterative, rule-based hypotheses. This approach slows down the learning process and requires additional engineering and data science resources. Even teams with sufficient AI resources face lengthy experimental cycles that require significant time and effort to collect data, build and evaluate models, and conduct A/B tests—often taking weeks or months to complete the process.

Challenge 3: Opportunity cost of sequential testing — As new products and CTAs are introduced and creative customer-targeted messages are designed, stakeholders must evaluate these new variants and calibrate them against what’s currently live on the website, app or other property. Exploring the viability of new products, CTAs or messages can negatively impact overall engagement if they prove to be irrelevant or nonviable.

Challenge 4: Stale rules and models — Manually-tuned rules and static models are based on the data collected for a specific time period, often failing to adapt to shifting customer needs and business priorities.

The NBA platform aims to overcome the challenges of the traditional iterative and sequential approach to optimizing communications. Our goal is to enable product, marketing and business teams so they can easily and efficiently create personalized experiences that target users with relevant messages and dynamically adjust to changing business goals. More specifically, with NBA, we have focused on achieving the following objectives.

NOTE: For simplicity, in the following sections we will use “action” to generally refer to any messages, CTAs, communications or product offerings.

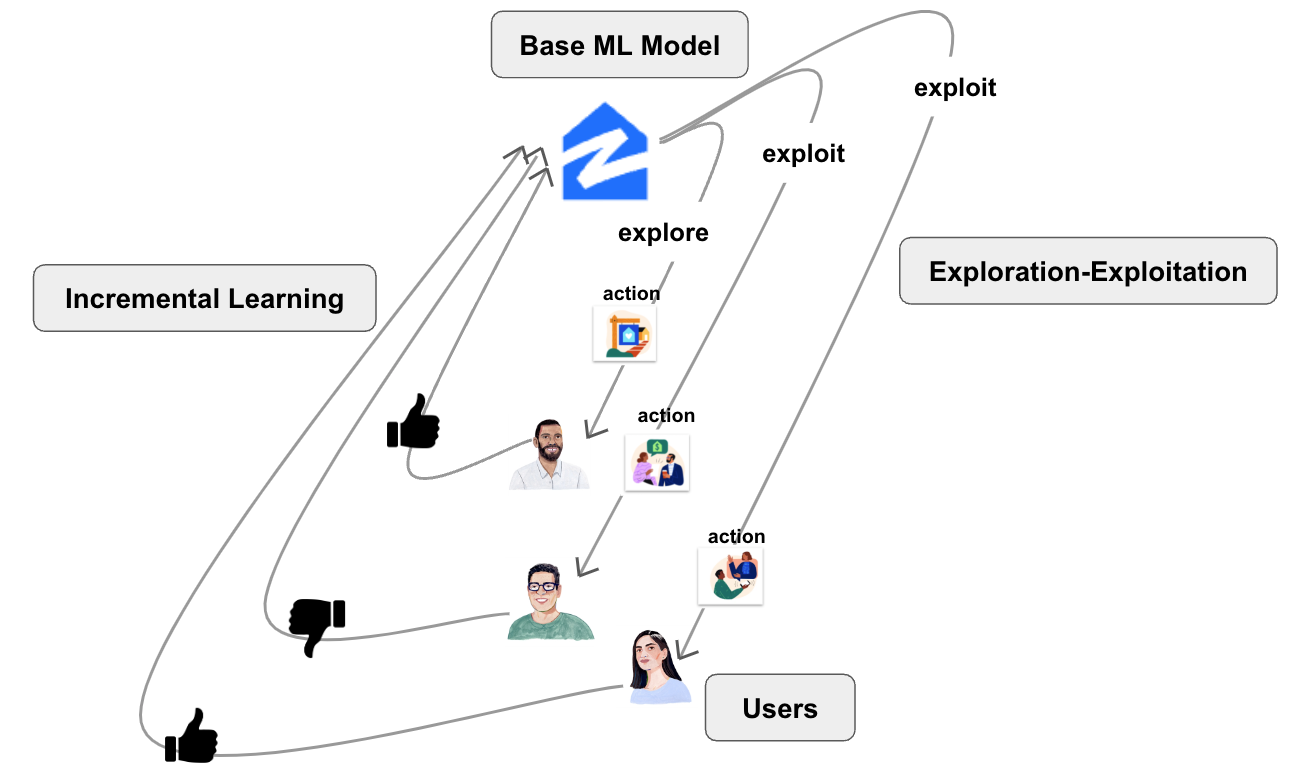

One of the techniques commonly used to achieve such a vision are bandit algorithms that optimize the explore/exploit tradeoff. Contextual bandit algorithms are a special type of algorithm that tailor actions based on specific user contexts. In this section, we introduce the core AI model for NBA, which is the contextual bandit model (CXB), as well as the core AI capabilities enabled by the model.

We formulate the action personalization as a contextual bandit problem, where at each time step t ∈ {1, 2, …, T} (or trial t), a learner repeatedly:

The goal of the bandit algorithm is to find a policy that maximizes the expected sum of reward over time. This policy dictates the action choice at each time and improves under exploration over time.

Figure 2: A diagram for personalizing users’ next actions through the CXB model.

Specifically, the CXB model we build for the NBA platform is composed of three key components, as follows the diagram illustrating the model components is provided in Figure 2).

Overall, the CXB approach calls for using an online learning algorithm that sequentially selects the next best actions, or explores to serve users based on contextual information about them and their actions.It simultaneously adapts this strategy based on newly collected user feedback and action pools, in order to maximize total reward.

The following AI capabilities are unlocked in the CXB model in order to fulfill the NBA vision.

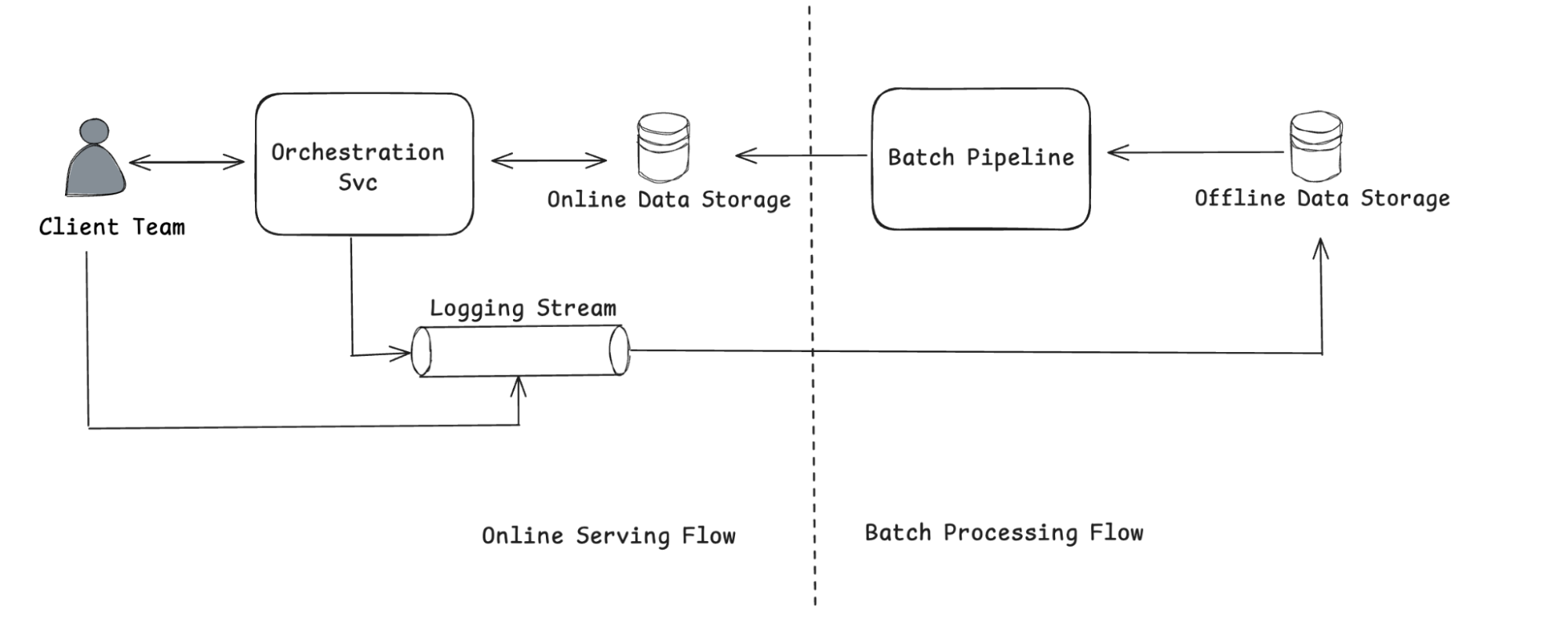

To support the aforementioned AI capabilities across various use cases, we built the NBA infrastructure, which includes a batch processing and an online serving flow. The batch infrastructure handles:

The online serving component processes requests from client teams and retrieves personalized decisions from online storage. The response information from the NBA orchestration service and the reward information from client systems are both critical to the NBA platform’s learning capabilities. For this reason, the online server features a library that will help render the required information into a standardized format for batch processing.

In this section, we showcase the performance of the CXB model in a specific use case to demonstrate its effectiveness in application.

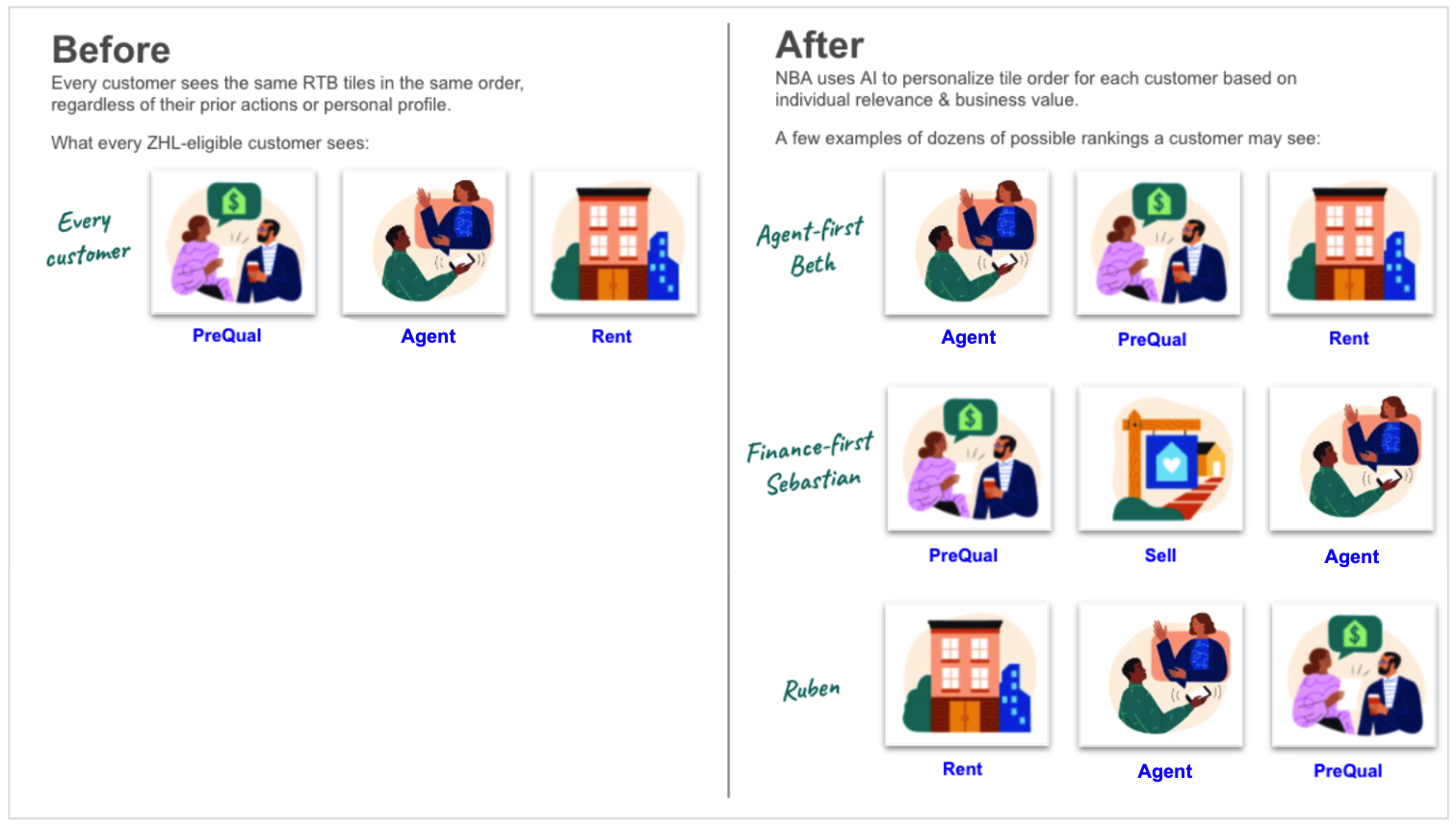

Each month, the Zillow homepage serves as the starting point for millions of customers’ real estate journeys. The “Reason to Believe” (RTB) tiles on the homepage (see the left panel in Figure 1) help customers navigate Zillow’s main product offerings without needing to use a complicated top navigation menu. Before the integration with NBA, all eligible customers saw the same RTB messages in the same order, regardless of their past behavior or persona. We leveraged the NBA platform to optimize the content and ordering of the RTB tiles out of the four possible candidates that appear on the Zillow homepage: Home Loan Prequalification, Buy with Agent, Rent and Sell. Figure 4 below shows two different user experiences before and after the NBA personalization. In this use case, each RTB corresponds to one action in the CXB model. This model gradually learns the user’s preferences on RTBs through collecting feedback. This process considers users’ context information, including their home-browsing and engagement history, as well as the business value of clicks on different types of RTBs.

Figure 4: A comparison in user experiences without NBA and with NBA.

Based on this use case we ran multiple experiments in order to validate each of the NBA platform’s AI capabilities. In this subsection, we present the key results from both online A/B testing and offline evaluations.

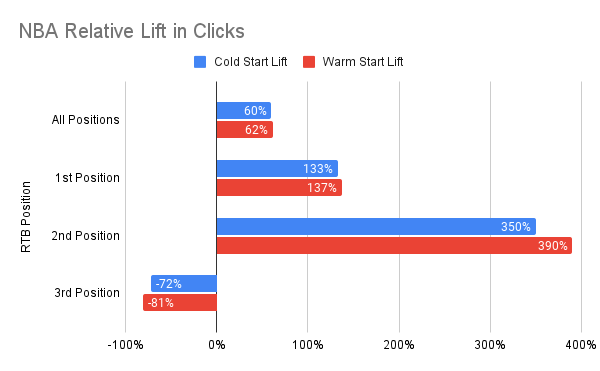

Figure 5: A comparison on NBA relative lift in clicks to demonstrate the AI capabilities of personalization and cold start.

Personalization. To showcase the personalization capability of the NBA platform, we conducted an online A/B test, comparing the static RTB orderings against the personalized ordering from NBA. The results are visualized as red bars in Figure 5, which shows that compared to the status quo “winner takes all” baseline, NBA successfully increased the total clicks, as well as shifted more clicks to the top positions. This demonstrates that NBA can offer an improved and personalized user experience by placing actions that users are more likely to engage with in the prominent top positions.

Cold start. To validate the cold-start capability of the NBA platform, we initialized the CXB model in two ways: cold start by randomly exposing different RTBs to users, and warm start by recommending actions based on a pre-trained model (using data collected from the randomization test). We then compared the model performance in an online A/B test. As a result, the cold-start bandit achieved comparable performance to the warm-start bandit within five days of exploration(displayed in Figure 5). This suggests the potential elimination of separate random data collection for new use cases, and indicates that experimental cycles can be drastically reduced without compromising the quality of user messages/actions.

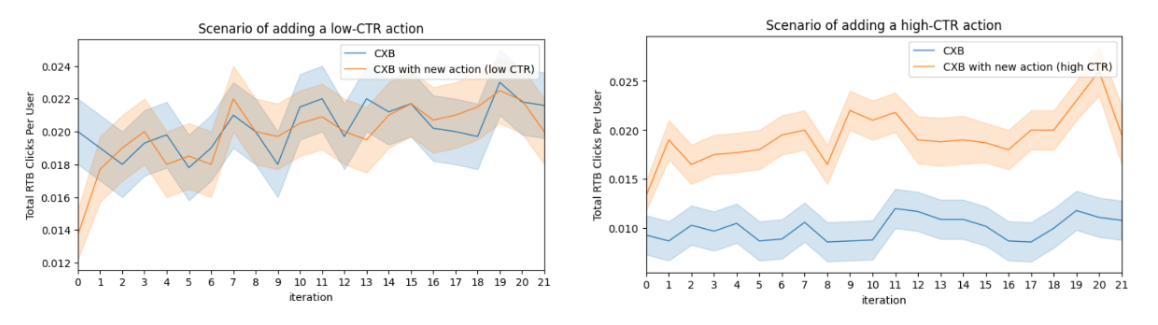

Figure 6: A comparison of the total RTB clicks per user between NBA without new action (blue) and NBA with new action (orange), to demonstrate the AI capabilities of adaptiveness through exploration and exploitation. Left panel: The new action added has a low CTR. Right panel: The new action added has a high CTR.

Exploration-exploitation and adaptiveness: To showcase that the NBA can support a changing set of actions, we conducted offline experiments to simulate the process of adding a new action to the contextual bandit ecosystem and compared the performance of the NBA with the new action against the one without the new action. The experiments show that if the new action added has a low click through rate (CTR), the CXB model will capture the same amount of clicks as the one without the new actions (Figure 6, left panel). If the new action added has a high CTR, the bandit model will adapt to capture more clicks than the one without the new actions (Figure 6, right panel). This demonstrates that regardless of which new actions are added, the model is able to automatically explore their viability within the experience and adapt to provide a good personalized ranking among all actions. Adding new actions will not hurt total reward, regardless of which actions are added.

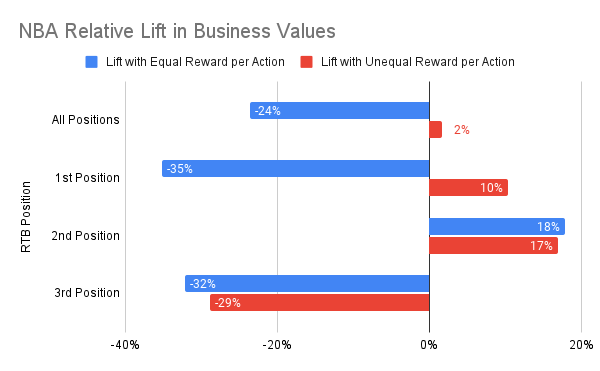

Figure 7: A comparison of NBA relative lift in business values to demonstrate the AI capability of the business “steering wheel”.

Business “steering wheel”. To validate that the NBA platform can adapt to changing business objectives on the fly, we conducted an online A/B test. Both buckets were initially set with equal rewards for each action; then one was changed to unequal rewards reflecting the actual business value of clicking each action. Compared to the equal reward model, the true business-oriented bucket converged to the optimal solution within three days in response to an updated reward of each action, achieving the lift (as depicted in Figure 7). This resulted in an overall increase in the business value of clicks and increased the value derived from the first two positions. This validates the NBA platform’s capability to adapt with the changing business goals, optimize on the fly and drive more high-value clicks.

In this section, we will outline the exciting future work that will help unlock the next phase of the NBA platform. This includes real-time serving, test-free impact measurement and scalable action serving for multiple use cases.

We have already launched the initial version of the NBA system, which pre-computes decisions offline and serves online upon request. However, the system lacks the ability to process the real-time context information, as well as more timely (e.g., session-based instead of daily) model refreshing. We believe that building an NBA system that takes in real-time user features and context, then conducts real-time decision computation and model updates will significantly boost the performance of the platform, both in terms of personalization and business-value optimization.

One of the key advantages of NBA is its adaptiveness. Because the bandit model learns online and adjusts to its optimal state upon changes (e.g., adding a new action), this allows us to eliminate the need for traditional A/B tests . However, businesses sometimes want to understand the ROI of iterations, similar to what traditional A/B tests provide. In response, we are developing an analysis method that will offer similar insights and impact measurement as traditional A/B tests.

As Zillow develops the real estate super app, an integrated digital experience that connects all of the fragmented pieces of the moving process on one transaction platform, a significant challenge arises for NBA: how to serve distinct needs for all use cases across different touchpoints, while ensuring that prompts do not conflict. To this end, we are exploring a taxonomy approach that will effectively organize use cases and lines of business, and serve as a backbone to scale the NBA model across different touchpoints.

This project is a true team effort, and there are many people to thank. A big thank-you to the MarTech, Marketing, Data Engineering and Growth AI teams for bringing the NBA vision to life. Special thanks to Matt Schuerman and Aditya Sundaram for leading the engineering effort that made this incredible platform a reality for Zillow teams and customers. A special thanks also to our cross-functional product and engineering leaders — Rebecca Brown, Dili Wu, Sam Jorgensen, Ondrej Linda, Dan Glasser, Deepthi Kondapalli and Ayse Kulahci — for navigating this NBA journey. Similarly, thanks to Connie Jimenez and Kane Merrill for their partnership in marketing experimentation.