Helping Buyers Explore the Real Estate Market via Personalized Recommendation Diversity

In this post we examine the application of a diversification algorithm to improve the diversity of our home recommendations.

In this post we examine the application of a diversification algorithm to improve the diversity of our home recommendations.

Note: All work described in this blog post was done by Kai Liu from the University of Texas at Austin.

Home recommendations at Zillow are delivered via several channels, including email and mobile push notifications, to present a prospective buyer with a collection of relevant homes. Real estate poses several unique and interesting challenges for designing such a system. For example, in many high-demand real estate markets newly listed homes are of great interest and relevant to prospective buyers. Although new homes have not yet been seen and interacted with by a sufficient number of users — hence implicit signal about them is lacking — they need to be promptly recommended to relevant users. A content-based model can address this new-listing cold-start problem by scoring the relevance of each home for each user by evaluating the match between the user’s preference profile and the home’s attributes.

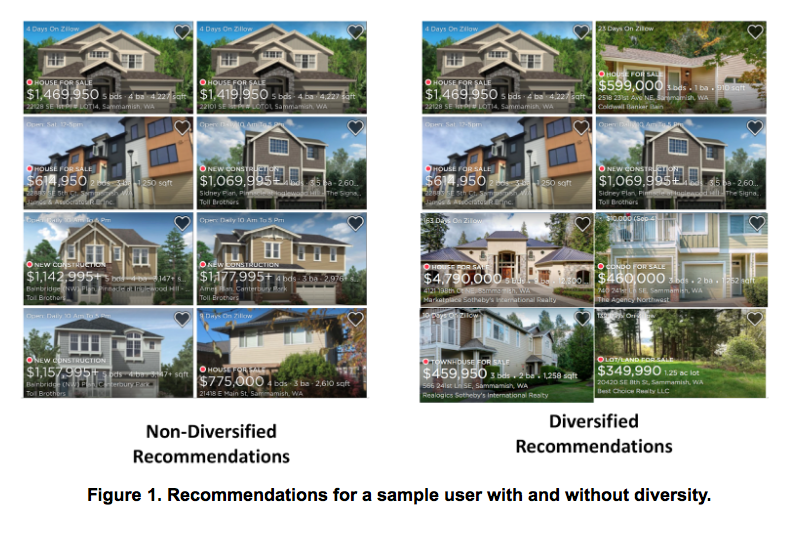

Such a content-based model is trained to predict a user’s click probability for each home (or “listing”) independently of all other homes. Consequently, similar recommended homes tend to have similar scores, and simply ranking these items by score may result in recommending nearly identical items (Figure 1). This lack of diversity among the recommendations fails to provide users with the variety of options necessary to discover what they care about, especially for buyers exploring the home market.

In this post we examine the application of a diversification algorithm [1] to improve the diversity of our home recommendations. The main reasons for choosing this method are its ease of implementation and its applicability as a post-process for our (or any) recommender system.

Our home recommendation engine produces a set of candidate homes for each user, each with its predicted relevance score. The user’s candidate set is selected with the objective of including all of the homes that are relevant to that user while minimizing the size of the set. For example, one such possible candidate selection strategy might only consider homes in cities where the user has recently searched and which are priced within the user’s expected price range.

Next, we take the scored candidate homes as input and feed them to the diversification stage, where we re-sort the scored list A = {a1, a2, … an} by optimizing the following submodular objective function [1]:

Here Ak is a subset of size k of the set of candidate homes A. Each home ai in A represents a one-hot-encoded d-dimensional category vector for listing item i. There are d categories (the number of different types of homes that we are trying to diversify over). Further, w denotes a d-dimensional vector that encodes user preferences for each of the d categories. Scalar λ is a weight on the user preference, which can be adjusted to vary the emphasis on diversity. Finally, s(a) is a function which maps an item to a score, such as the click probability (i.e., relevance score) computed by our recommendation model.

In the above equation, the weight vector w emphasizes the importance of each category to a user. By summing over per-item category vectors a in a given subset of items, we compute the total number of times that each category occurs in this subset. The logarithm has the following effect: the incremental utility of each additional item from category i decreases as more items from the same category are included in the subset. By optimizing this submodular objective function, highly relevant items are selected while preference is also given to selected items spanning a diverse set of categories.

The actual solution to the above optimization problem is computed using a greedy forward selection algorithm. First, we start with an empty list of selected items. Next, we provisionally add each available item individually to the selected set and compute the value of the objective function on that provisional set. The item that produces the best score is added to the selected list. This process is repeated until the required number of top diversified items is generated.

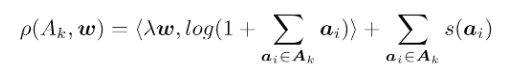

While we could partition listed homes based on price or house type, at a more fine-grained level there are no natural categories readily available. As a result, we decided to partition all listings into a small set of distinct categories via clustering. For simplicity we consider only the top most discriminative home attributes, namely price, square footage (sqft), and house type. We cluster the listings into 20 clusters using the bisecting k-means algorithm [2].

To verify that our clustering method learned meaningful categories, we first visualize the differences between the clusters. To conveniently visualize multiple clusters in multi-dimensional space we use the parallel coordinates method shown in Figure 2 [3]. We can see that different clusters group various types of homes based on their size and price range as one might expect.

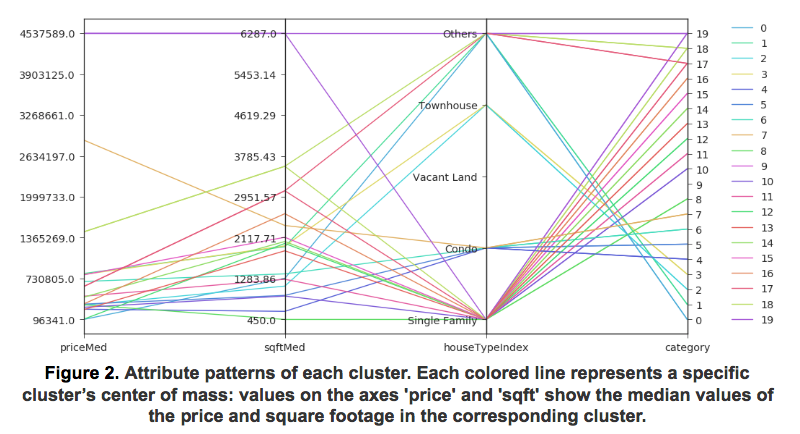

In addition, we have also compared the histograms of available homes in several distinct real estate markets, namely New York City, San Francisco, Columbus and Kansas. Figure 3 visualizes the home categories’ histograms, clearly depicting the varied nature of these distinct markets.

We compute two different types of user preferences here. The first one is a global user category preference, which not personalized and is instead is computed based on all users’ click history. The second method is personalized category preference, which takes the global preference as a prior and then adjusts the estimates for each user individually based on their past click history. Let’s consider each in turn.

Global user preference wg is defined as the user preference for home categories for the average user. We use the per-category click-through-rate (CTR) across our entire training population to compute wg . To make the estimates more robust to low sample counts for rare home categories we smooth them with a Dirichlet prior.

When computing personalized user preferences, we leverage the precomputed user profile, which contains a history of all recent user clicks and can therefore be used to estimate a user’s interests cu for each listings category. To improve our estimates for users with limited past click history, a Bayesian estimation is again applied to estimate the personalized user preference. Based on the method described in [1], we use a Dirichlet distribution as a prior distribution with a parameter α0, where α0 is assumed to be the global user preference (our wg). Then the personalized user preference can be computed as:

![]()

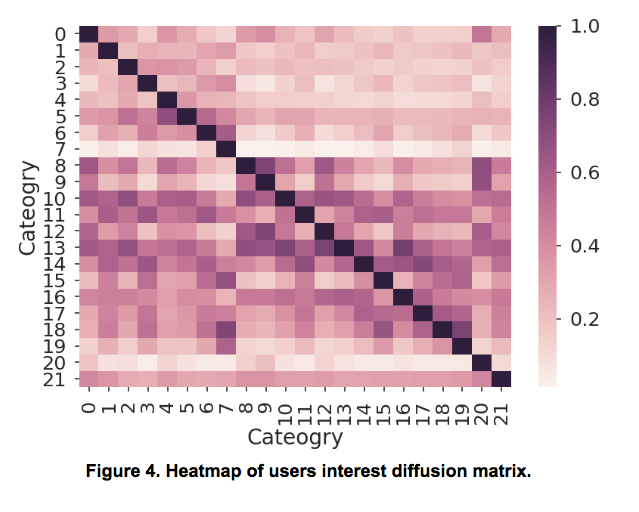

In addition, users’ interest on different categories are commonly correlated. For example, if 80% of users who clicked listings in category i also clicked listings in category j, there is a high chance that a user who only clicked listings in category i might also be interested in category j. To give these users the opportunity to interact with listings from different categories, we add a user interests diffusion matrix M. Each element mij represents the proportion of users who clicked listings in category j and also clicked listings in category i. This matrix M is multiplied with the user preference estimates effectively diffusing some of users’ preferences to other similar categories. Figure 4 shows the generated user signal diffusion matrix based on users’ click history.

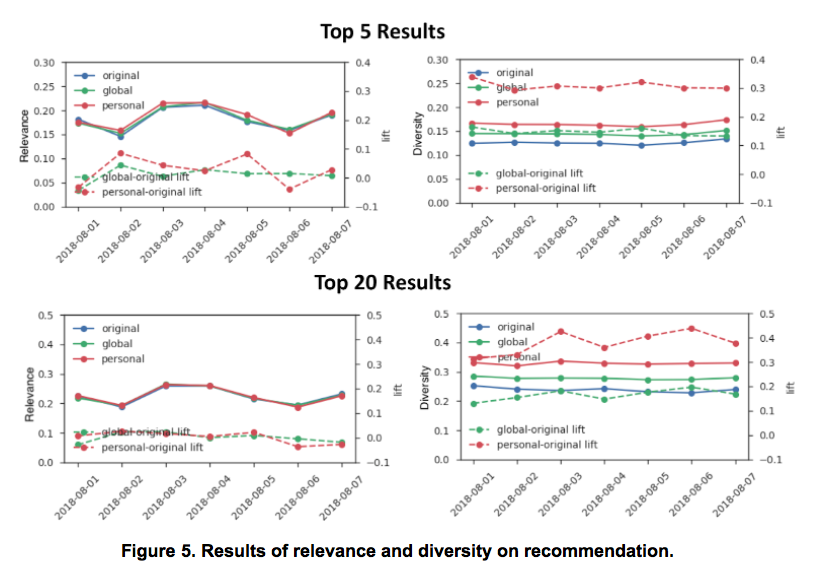

To evaluate the performance of the diversity model offline, we consider both the relevance and the diversity of the re-sorted recommendations. For relevance, we use NDCG as our metric. For diversity we measure the average proportion of categories with at least a single home selected up to a given rank. Ideally, the re-sorted recommendations would have a high diversity compared to the original recommendations while maintaining a comparable level of relevance (i.e. neutral NDCG lift). We compared the relevance and diversity of our recommendations for a sample of users over a period of 7 days. Both NDCG and diversity are evaluated for the top 5 and top 20 recommendations. The results are shown in Figure 5. We can see that both global and personalized diversity models maintain similar relevance to the original ranking based on the NDCG metric; i.e., the NDCG lift remains neutral within bounds. However, both the global and personalized diversity methods provide significant lift in our diversity metric, with the personalized diversity model especially lifting the diversity by ~30% and ~40% at top 5 and top 20 recommendations, respectively.

In this blog post, we explored the application of a personalized diversity algorithm method first proposed by researchers at Amazon [1]. The method improves the ability of Zillow’s customers to explore diverse real estate market options. We have demonstrated that this diversity model helps improve the diversity of home recommendations without sacrificing any relevance whatsoever.

If you find this interesting and if you would like to apply your data science and machine learning skills to our large-scale, rich and continuously evolving real-estate data, please reach out – we are hiring in multiple roles.

[1] Teo et al. Adaptive, Personalized Diversity for Visual Discovery. RecSys ’16. 2016(4): 35-38.

[2] M. Steinbach, G. Karypis and V. Kumar. A comparison of document clustering techniques,. Workshop on Text Mining, KDD, 2000.

[3] A. Inselberg, Multidimensional detective, Information Visualization, 1997. Proceedings., IEEE Symposium on, pp. 100–107, 1997