10 min read

Using SageMaker for Machine Learning Model Deployment with Zillow Floor Plans

In Zillow Floor Plan: Training Models to Detect Windows, Doors and Openings in Panoramas, we described how we trained our ML model to help generate Zillow Floor Plans. In this post we are going to describe how we designed and implemented the infrastructure that would deploy and serve our models.

Our goal was to build an infrastructure that was:

- Easy to integrate into our services and pipelines

- Scalable and reliable

- Allowed for easy addition of new models

- Could be repurposed with across the Zillow infrastructure

We investigated multiple options from using dedicated servers within Zillow, to running our inference servers on AWS EC2. In the end, we chose SageMaker for deploying and serving our ML models. Although there is a price mark-up around 40%¹, the ease of use, varied features, and the reliability of SageMaker justified the cost. In the following sections we are going to give a broad overview of Sagemaker, describe how we built this infrastructure, and finish with the current status of this project and our future plans.

As the whole infrastructure is built around AWS ecosystem, it would be helpful to describe briefly some of these services as we will be using their abbreviations many times within this post: Lambda is AWS’s function-as-a-service (FaaS), automatically running code without managing any kind of server or resources. Step functions allow you to coordinate multiple AWS services into serverless workflows. Each step can be a Lambda or a SageMaker operation. S3 is AWS’s storage solution and DynamoDB is AWS’s non-relational database. Cloudwatch is a monitoring and management service for all things related to AWS resources. ECR is AWS’s container registry and EC2 is the name of the AWS compute cloud.

What is SageMaker?

SageMaker is AWS’s fully managed, end-to-end platform covering the entire ML workflow within many different frameworks. It offers services to:

- Label data

- Choose an algorithm from model store and use it

- Train and optimize an ML model

- Deploy and serve your own ML models, make predictions, and take action

We already had local machines that we used to train and create our ML models. For this particular application, we were more focused on inference rather than periodic retraining cycles. What is more, GPU instances available in SageMaker were too expensive relative to the projected gain in accuracy by exhaustively retraining ML models. Therefore, we decided to use SageMaker only to serve and deploy our ML models.

Choosing the right features

SageMaker has a plethora of features which makes it a good candidate for the productization of ML models. It offers both online and offline inference solutions. It is built on top of EC2 therefore there are many CPU/GPU options available. In addition to standard EC2 instances, SageMaker also offers Elastic Inference, which uses CPU instances while hosting, but magically switches to use GPU resources during inference that result in significant cost reduction.

Our main reason for choosing SageMaker was the fact that it is highly integrated with other AWS services and most of our services were already built within the AWS ecosystem.

However, having this many features and options can be quite confusing. Therefore, to make things simpler, we needed to ask the following questions to choose the most cost-effective solution:

1- Do we need a live service for inference requests?

2- How much time do we have between the data generation and the actual use of predictions?

3- If we choose the offline inference, how should we bundle the input data?

Online inference is enabled via creating endpoints that would respond to training or inference requests. It takes around 10 minutes to create this host and enables live inference. It accepts images that are stored in S3 as input or even as an “application/x-image” data source along with your inference request.

On the other hand, offline inference, or batch transform, comes in handy where the application does not require live inference and all data is already in S3. It takes a list of input images and stores inference results as JSON objects in S3.

In this project, we would like to detect windows, doors, and openings from a panoramic image. In addition to generating high fidelity data which can be used for many other different purposes as described earlier (such as sunlight simulation, automatic room merging), our main goal is to automate floor plan generation, reducing the time and cost.

We ran numerous experiments by changing different parameters:

- SageMaker Parameters

- Online vs Offline Inference

- EC2 instance type

- Instance count

- Internal parameters

- Batch size

- Current and Projected 3D tour data

- SLA in floor plan generation

After an extensive investigation and experimentation period, we decided to use Batch Transform CPU instances. It was the most cost-effective solution for our business needs because:

- It is feasible and adequate. We did not need real-time inference, as there is enough time between the panoramas created and predictions used.

- It is an offline service, meaning that we did not need to maintain and keep a service online and make sure it has enough instances. AWS takes care of everything.

- It is cheaper. We only paid for the amount of time batch transform takes.

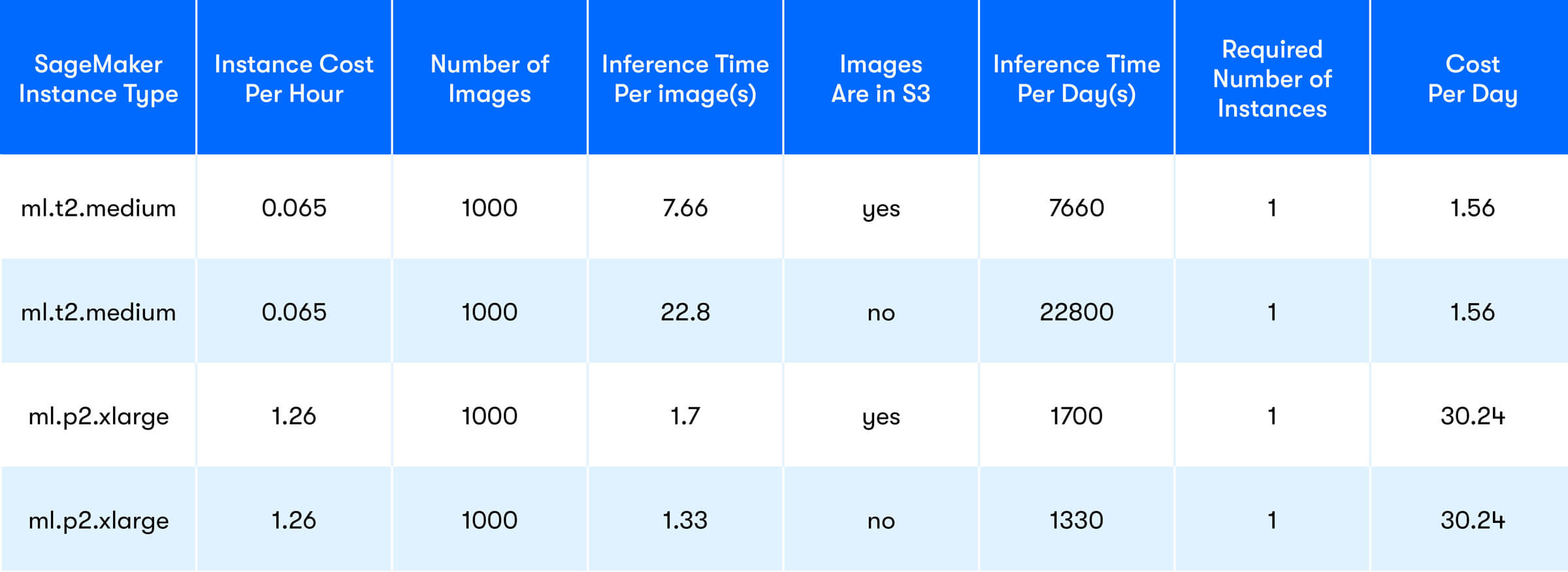

An example cost analysis

There are 3 types of costs that come with using SageMaker: SageMaker instance cost, ECR cost to store Docker images, and data transfer cost. Compared to instance cost, ECR ($0.1 per month per GB)² and data transfer ($0.016 per GB in or out) costs are negligible. What is more, if we used pre build AWS Docker images and stored the data in S3 we would not need to pay for either one of them.

Assuming that the SageMaker instance is the only cost, Table 1 describes what the rough projected cost would look like daily for 1000 images with given inference times for a given ML model.

Table 1 - Projected SageMaker cost for online inference

Table 1 - Projected SageMaker cost for online inference

Table 2 - Projected SageMaker cost for offline inference

Table 2 - Projected SageMaker cost for offline inference

For batch inference, it’s important to consider the fixed cost of booting up a Docker container and installing necessary packages which may take up to 1-2 minutes.

Integrating SageMaker into Our Pipeline

Deploying ML models in SageMaker

In order to run batch inference, SageMaker requires a SageMaker model. A SageMaker model can be considered as a configuration, which includes information about the properties of the EC2 instance created, and the location of the model artifacts.

EC2 instance configuration enables setting the number of instances, linking to Docker image in ECR, and CPU/GPU information. Model artifacts include a frozen/saved model and the inference code in a certain format. Further customization may be required depending on the underlying ML framework and Docker image being used. Writing custom code to preprocess/postprocess data and how the model will be served is also an option. Examples for these can be found in AWSs SageMaker container GitHub pages.

Every batch transform job can be summarized as follows:

- Create an EC2 instance using the image given in SageMaker model

- Load ML model artifacts and start a model server specific to the ML framework

- Given an input dataset, run inference and save outputs in S3

- Kill the EC2 instance

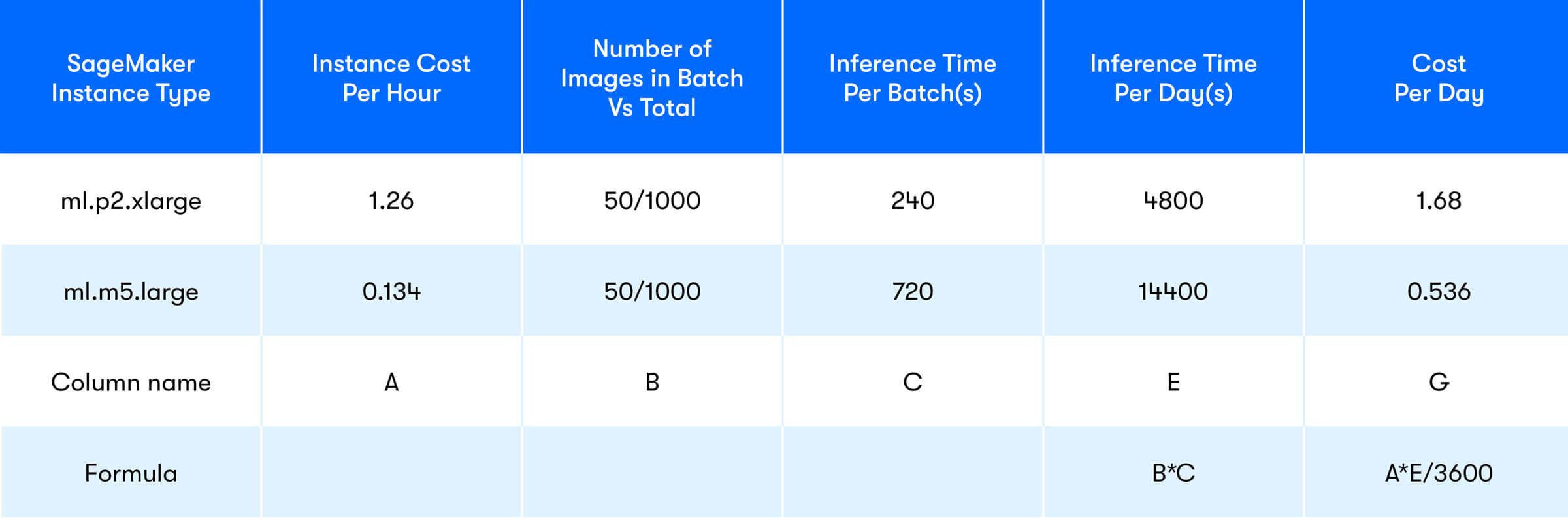

We touched base on EC2 instances and model artifacts but what are these model servers?

Figure 1 - A block diagram depicting how SageMaker creates a host to serve inference requests via ML model servers.

Figure 1 - A block diagram depicting how SageMaker creates a host to serve inference requests via ML model servers.

Machine learning model servers

ML model servers are tools for serving deep learning models trained using any ML/DL framework. They can be run individually on hosts or be used via Docker images, setting up endpoints to handle RESTful requests. These requests can be for training, inference or anything else that are defined via specialized handler functions.

As shown in Figure 1, they can be considered as a layer between actual model inference and the outside world, which allows RESTful communication.

There are different model servers compatible with different ML frameworks. TensorFlow model server can be used to serve TensorFlow models and MxNet model server can work with MxNet, Gluon or Pytorch models. As we trained our WDO model via TensorFlow, we used the TensorFlow model server for this project.

Running multi-model batch transform jobs automatically

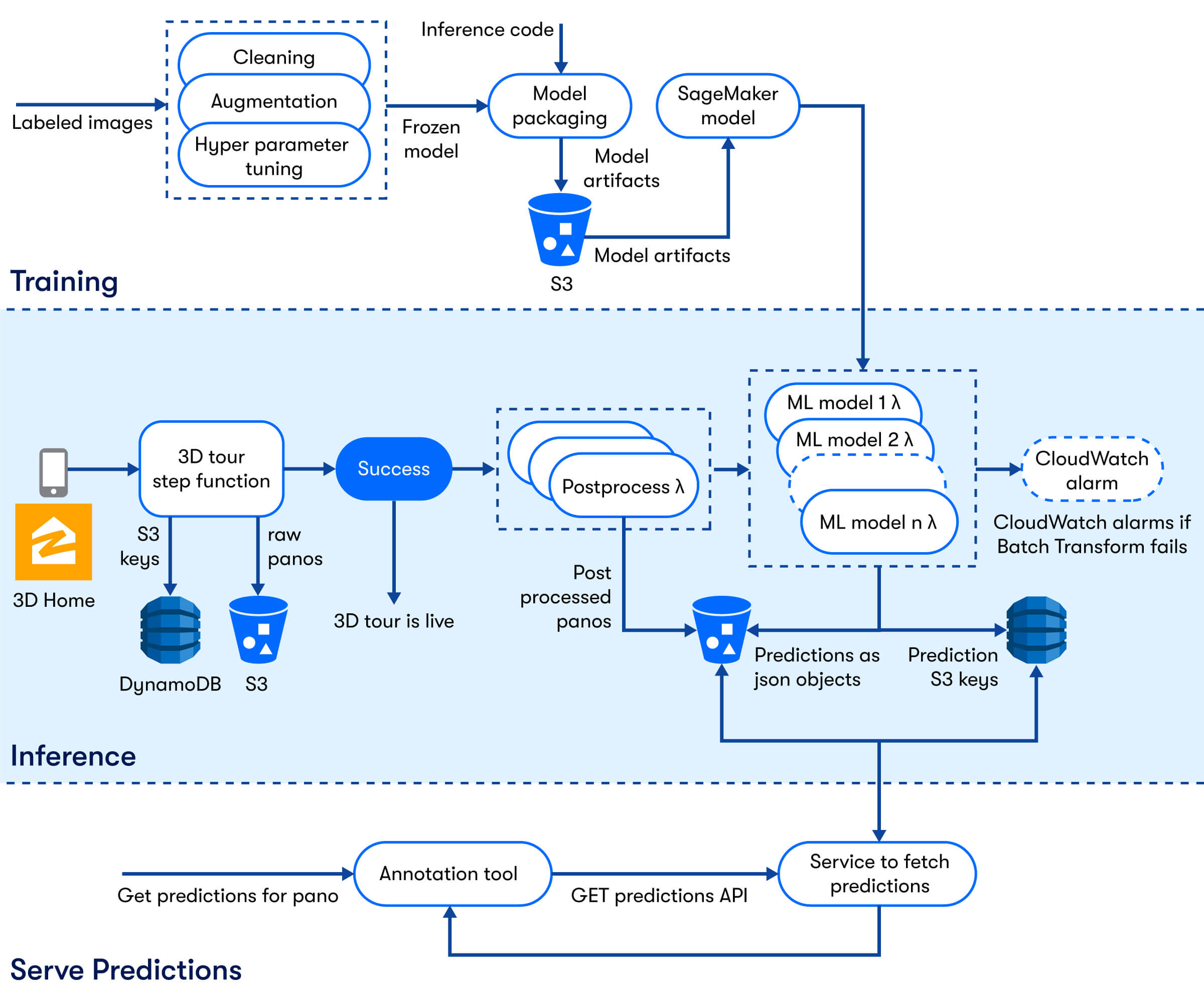

Our pipeline starts with a Zillow 3D Home™ tour. As shown in Figure 2, once a 3D Home tour is successfully completed all the panoramas are already uploaded to S3. When a tour also requires a Zillow Floor Plan™ it triggers another step function via a Cloudwatch Alarm.

This step function has several steps that have several atomic operations. First we preprocessed the input images for cleaning as well as calculating useful information. Then we ran multiple Lambdas in parallel that are responsible for the inference. These Lambdas are responsible for:

1- preparing data

2- triggering batch transform jobs

3- checking the status of these jobs

4- cleaning/filtering/mapping the batch transform results once the job completes.

Via this start-monitor-wait-monitor-end design pattern, we did not pay for the Lambda during the whole inference process, which can last for up to an hour for large image sets. Each Lambda runs in parallel with the same input image therefore additional ML models did not add to overall process time. The Lambda was written in such a way that it is agnostic of the underlying ML model. Therefore, adding a new Lambda for future ML models is trivial.

In the end we stored the predictions as JSON objects in S3. We also stored keys for these JSON objects in DynamoDB for quick and easy access to whichever service that needs it.

Figure 2 - A flow chart depicting our pipeline from receiving panoramas from users, running inference to serving prediction results to annotators.

Figure 2 - A flow chart depicting our pipeline from receiving panoramas from users, running inference to serving prediction results to annotators.

Conclusion and What is next?

As the demand for Zillow 3D Home and other virtual offerings increases we are realizing the benefits of investing significant time into the design and development of our infrastructure to ensure scalability. Now that we have a complete, scalable, and reliable infrastructure to serve our ML models, the next step is to design an infrastructure that would enable us to auto train models as we collect new data.

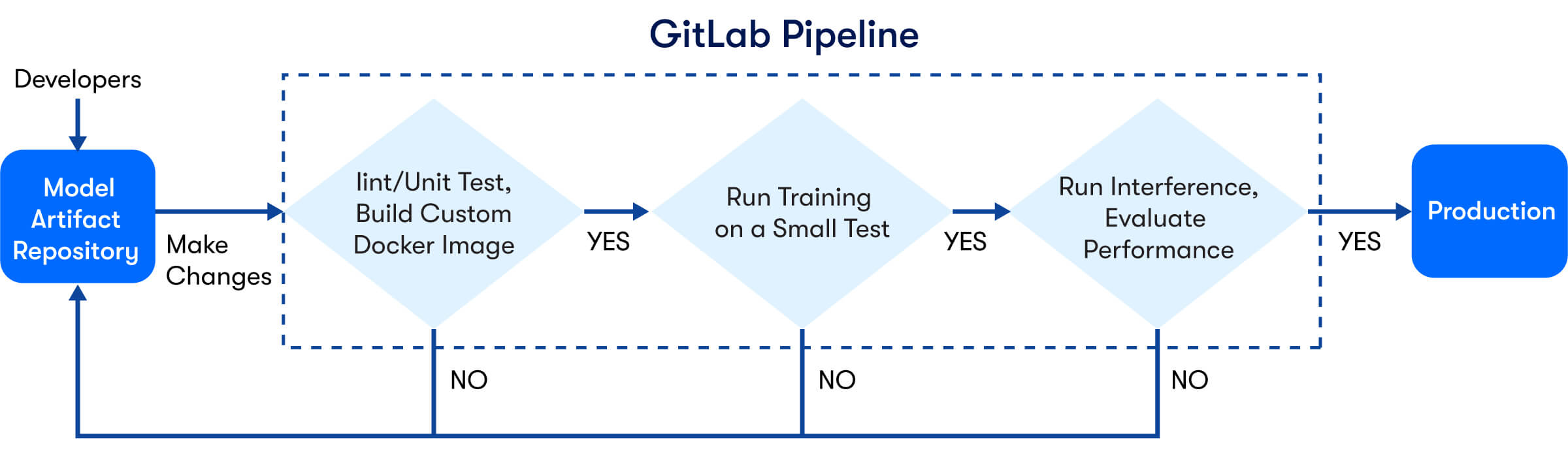

On this front, we recently moved our model artifacts into a repository with a GitLab pipeline for CI/CD as shown in Figure 3. This pipeline performs the following operations to ensure both code quality and the model performance:

- Build a custom Docker image per underlying ML framework

- Format/lint the inference code

- Run training/inference

- Check performance of the models based on predefined metrics on an image set

Figure 3 - A flow chart showing our model artifact repository pipeline for CI/CD.

Figure 3 - A flow chart showing our model artifact repository pipeline for CI/CD.

Scheduled retraining is still in the planning stage and we are currently investigating different frameworks such as Kubeflow and mlflow.

¹ This is based on an m5.large EC2 instance ($.096/hour) vs ml.m5.large standard SageMaker batch transform instance ($.134/hour).

² Taken from https://aws.amazon.com/ecr/pricing/

Related Articles

Sign up for Zillow news updates

Subscribe to receive daily emails for the latest Zillow news and announcements, product updates, research and more.