At Zillow, we have 100’s of batch ETL jobs that we run throughout the day. The resources required to process our data is in constant flux as our data volumes vary from hour to hour. We process a wide variety of batch data that can come in hourly or daily including user activity, push notification and a/b testing to name a few. To tackle the ebb and flow our data we turned to auto scaling with EMR.

Most of our ETL jobs use either Spark, Hive or Sqoop depending upon the task. Zillow runs a typical S3 data lake strategy. We use S3 as our HDFS and then spin up clusters for ETL, analytics and machine learning that all point to the same shared data on S3. Separating our compute/memory from storage has allowed us to move fast and provide enough computing power for all of our analytical needs.

Here is what our default EMR cluster for ETL looks like:

- 1 Master node which cannot be auto scaled

- 5 Core nodes which are mainly used for local HDFS jobs. Sqoop is our primary consumer of core nodes. Our cluster uses local disk when Sqooping data out of relational db’s and converting to Parquet (more on why we choose parquet in a future blog post). We store the data locally because the version of Sqoop we run has a bug where it cannot convert data directly to Parquet. The local HDFS is used as a staging area, then we dump the Parquet data to S3.

- 1 Task node – Perfect for compute power that needs no access to local HDFS.

Scale out rules:

- Core – we auto scale on HDFS utilization. If HDFSUtilization >= 80 for 5 minutes add nodes.

- Task – We have 3 rules related to yarn, apps pending and containers pending.

- If YARNMemoryAvailablePercentage <= 15 add nodes

- If AppsPending-Out >= 2 add nodes.

- If ContainerPending-Out >= 75 add nodes.

Our rules are slightly less aggressive with our scale in policy. We found that a slightly longer cool down of 30 to 60 minutes fits our workloads. Initially we tried a 5 minute scale in cool down, but found our clusters removing nodes and then adding them back in a few minutes later.

The one thing to watch out for is the time it takes to add additional nodes. We have seen it range from 10 minutes to 25 minutes to add additional Spot instances. If a job requires 50 nodes to process data, the job may arbitrarily wait 25 minutes to get the resources it needs.

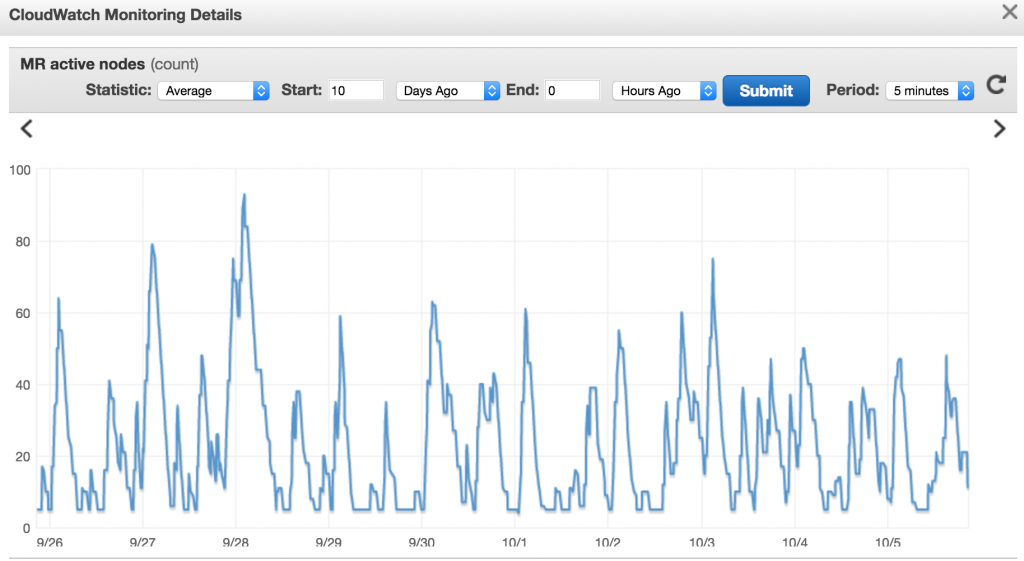

Here is our node usage over the past several days

As you can see we have several large spikes in nodes on our cluster. We sometimes receive a large batch of data that can contain up to 10tb of data. Our analysts want to query this data as soon as possible so our goal is to make it available fast, but also at a reasonable price.

The big question is how does using Spot with EMR Auto Scaling compare to reserved instance pricing for our EMR cluster?

Hourly rates:

- On demand hourly rate $0.40

- 1 year all up front hourly rate $0.231

- 3 year all up front hourly rate $0.18

Zillow’s spot blended rate over the past few months $0.125

This means we are beating the 3 year reserved hourly rate, plus we are only utilizing nodes when needed. These spot instances are not on 24/7 so we are saving significant amounts of money by only using machines when we need them. In short, we like Auto Scaling with EMR and Spot Instances!

Lessons learned

We originally set our cluster to terminate at instance hour for our scale down behavior. After a few weeks or running, we started to receive Spark errors. As noted by our colleagues at Trulia in their emr-ad-hoc-spark-development-environment blog, Spark tasks store shuffle output on the local disks. When instances are killed, our jobs started to crash. To solve this, we switched to terminate at task completion. Amazon is switching to per second pricing for EC2 so we are no longer concerned about killing resources before an hour is up.

What’s next

Spot has been working out well for us, but how long will this last? We do have some predictability with our jobs. If spot becomes more expensive than on demand we will consider scheduled reserved instances.

Right now it can take 15 – 25 minutes to spin up additional spot EC2 instances for EMR. Auto Scaling with EMR is powerful, but we would prefer that our jobs finish as fast as possible. We will investigate programmatically adding spot instances before heavy workloads hit our cluster.

Our team is constantly evaluating and playing with how many nodes to add and remove from our cluster with our auto scaling rules. We have played with 10, 15 and 20 and have settled on 15 nodes for now, but this needs further investigation to optimize job performance.