Serving Machine Learning Models Efficiently at Scale at Zillow

Zillow 2022 AI Forum

Zillow 2022 AI Forum

Zillow was founded with a goal of “turning on the lights” for consumers, and it continues as a core value of the company today. Our mission is to give people the power to unlock life’s next chapter and help them find, make a winning offer on, and purchase their next home through low-friction digital solutions.

This includes putting an estimate of a home’s market value — called the Zestimate® — on every rooftop, giving people the power to make informed decisions about one of the most important transactions of their lifetime. Many Zillow features[1] are powered by data and machine learning (ML), ranging from providing the best home recommendations, to enabling textual home insights on listings, to generating floor plans, to enabling users to perform semantic search, and to optimizing connections with our Premier Agents® partnerships — to list just a few.

As machine learning is central to so many product scenarios, it has been critical for us to invest in platform solutions. Standardized platform solutions not only provide economies of scale for infrastructure but also an easy onboarding user experience that seamlessly integrates with various internal platforms like our data and experimentation platforms as well as monitoring and alerting subsystems. The goal is to help our ML product teams iterate quickly through various stages of the machine learning development lifecycle, shipping continuously and successfully and bringing value to Zillow’s end users.

Before we talk about our solutions in this area, let’s understand the various challenges in the machine learning development lifecycle and why it is important to address them.

The machine learning (model) development lifecycle, as we ML practitioners (applied scientists, data scientists, machine learning engineers) know, is a cyclic iterative process.

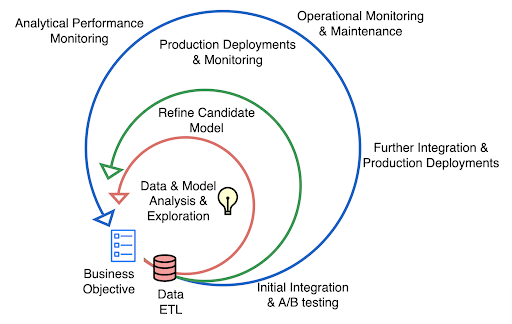

Figure 1: ML development lifecycle as inner, middle and outer loops

Many of us have seen some variation of the ML Lifecycle as expressed in Figure 1, and can identify with the various stages and loops presented here:

Note that the outer loop is also represented as the biggest loop: in practice it takes the most engineering effort to get a model running in a production environment. Even with the best of tooling and a multitude of skills, a lot of friction exists to move within and between these stages and loops easily. This makes the whole process of ML development a very prolonged and strenuous one. A similar idea is conveyed in this paper[2].

In this blog, we are going to talk about the serving layer of our AI platform (AIP) and focus on the stages in the lifecycle when trained models are transitioning into production (outer loop), with an emphasis on how we enable our ML practitioners to easily and rapidly deploy models as online web services, serving predictions with low latencies and Zillow-scale traffic to power our business scenarios.

For an ML model to be deployed in production, we’ll need to add substantial custom business logic to serve it. This includes preprocessing the incoming requests, getting them in the format compatible with the model, performing feature extraction on the fly, potentially enriching the request with some preprocessed cached features, and — when necessary — post-processing the output. Sometimes we even need to dynamically adjust the core model scoring orchestration logic (e.g., score the request with an optional additional model based on the output of a previous model).

All of this requires a customizable serving container solution allowing for runtime environment and scoring orchestration logic customizations, rather than a prepacked, pre built model server solution. While these are popular in many industry solutions, they offer a restricted runtime environment and limited flexibility for custom business and scoring orchestration logic.

As if this wasn’t complicated enough, this all needs to take place consistently across dev and production settings, with high-quality, performant code (low query response latency), continuously integrated, continuously deployed, and monitored for any system and model metrics anomalies.

All of this often needs to integrate with other data and experimentation platforms. The problem is multi-disciplinary, requiring heavy expertise with both modeling as well as engineering in the software stack. This gives rise to what we call a “triple friction” problem:

Without a flexible platform, this process happens over and over again with every project across various teams. All of this results in a lot of time being consumed to actually deploy models in production successfully and have an accelerated impact on the customer experience and business metrics.

Over the years of dealing with these friction points, our take here is that we need to solve the triple friction problem with a two-part centralized platform solution:

In addition to solving these two problems, creating a centralized platform solution also provides economies of scale for infrastructure, standardized easy onboarding to cookie cutter projects, CICD pipelines as well as seamless integrations with data and experimentation, logging, monitoring and alerting platforms and solutions. For product teams, if the platform achieves our goals, then timelines for deployment become more predictable, and AI-powered product roadmaps become more reliable.

A lot of prominent OSS (Open-Source Software) and vendor solutions have evolved over the last couple of years to support the end-to-end machine learning lifecycle, offering a variety of strengths and capabilities. It’s important to know that these solutions have been approaching the problem from different directions — some of them are more focused on production pipelines, some more on the experimentation lifecycle.

At Zillow we have actively integrated some of these platforms and solutions, which unlock huge capabilities for us without having to reinvent the wheel. Nonetheless, we invest heavily in making such third-party solutions operational at scale, compatible with our Zillow ecosystem and accessible to our internal users.

Focusing on serving ML models, some existing solutions do implement the requirements of our two-part platform to a certain extent, but not sufficiently, especially when it comes to ease of use by ML practitioners. For example, for model server creation and deployment, the existing solutions provide ways to abstract the web server creation and deployment process through configuration files, libraries and/or CLI tools. However, the end-to-end experience generally still requires developing a good level of software engineering expertise with low-level system concepts exposed (such as the setting and serialization of environment variables).

Deployments and the overall experience are primarily built for engineers. Sometimes there are also unnecessary restrictions, such as when the model and serving logic need to be packed in a special format. Many provide pre-built servers without really solving the problem that a lot of custom code and dependencies are still required with real life models. Overall, the model server creation and deployment experience still requires a steep engineer learning curve leading to a lot of conceptual overhead for ML practitioners.

For scale and performance, most industry solutions do promise a good availability and request success rate. However, our load testing results show that the latency, especially the long-tail P90/P95, can be compromised due to the special characteristics of ML serving.

Our AI platform team’s vision is to create a cohesive end-to-end ML platform for Zillow that provides deep integration with our business and infrastructure while leveraging open source ML platform solutions and tools when appropriate.

Having done a thorough evaluation and analysis around requirements and what works best with our engineering ecosystem, operations, goals and vision, we have integrated with parts of the prominent Open Source Project Kubeflow which offers a very powerful toolkit for running ML Workloads on Kubernetes. We have also actively integrated Knative and KServe as our model serving backend. More on this coming in the later section on our Technical stack.

Over our development and customer feedback cycles, we also realized the potential of Metaflow and appreciated how our design philosophies were so similar that successful projects are delivered when ML practitioners can build, improve, operate end-to-end workflows independently, focusing on data science and less on engineering. We really liked the Pythonic syntax, the concept of steps, flows and the ease of using decorators and have adopted it for our batch workflows, with our own Orchestration layer zillow-metaflow.

Note that these OSS solutions offer a good starting point, though an extensive amount of engineering is required to make them operations-ready, adapt to Zillow infrastructure, meet our customers’ SLAs, and more importantly to create a “paved path” that reduces the above-mentioned frictions to levels that help with easy transitions through the ML lifecycle stages to production deployment. We will be writing separate blog posts on our integrations with these OSS solutions soon and highly recommend exploring these technologies.

Now, let’s take a look at the applications of these solutions as they apply to the problem of deploying ML models as services.

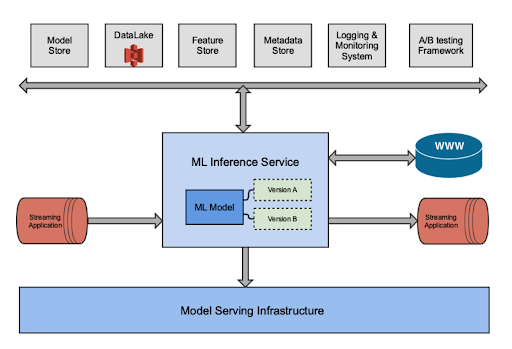

What does it mean when we say model as a service? Typically that’s a machine learning model (or various versions of it) deployed as a web service. This web service could be either interacting with various client-side applications for real time predictions (as shown in Figure 2 by the “WWW” API entrypoint), or it could be a step in a streaming pipeline, receiving streaming data from (for example) Kafka streams and sending predictions to an output stream in a near real-time manner (as shown in Figure 2 by the “Streaming Application” flow).

In both configurations, they run on top of a model-serving infrastructure, which connects with a number of data, feature, model and metadata stores to fetch relevant artifacts, A/B testing platforms for controlling model versions and treatments (and evaluating performance), as well as monitoring and alerting solutions for system and model health as shown at the top of Figure 2.

Figure 2: Models as a Service

The core concept behind “ML model as a service” is to abstract away all the “peripheral” components (i.e., all components in Figure 2 other than “ML Model”) from ML practitioners so that they can simply focus on the ML model itself, and deploy it directly as a service at ease. To enable such, we’ll need the aforementioned two-part centralized platform solution:

Now let’s dig deeper into these two layers with our findings and solutions.

For a solution to be successful here, the layer has to be easy to use for an ML practitioner and should have a minimal learning curve.

To provide such an interface, we investigated what the user layer or experience should look like. Talking to our ML practitioners and understanding their development style and patterns, we were intrigued by the flow paradigm and how often this is used in batch workflows.

In simple terms, a flow is a natural DAG (Directed Acyclic Graph) representation to mimic the philosophy of steps performing tasks in a machine learning or big data project. In fact, the concept of flow is used by many ML and data engineering platforms and frameworks such as Kubeflow, zillow-metaflow, mlflow and Airflow, primarily in a batch (offline jobs) context.

But if we look carefully, the same flow paradigm could also be applied to an online service serving machine learning models as well.

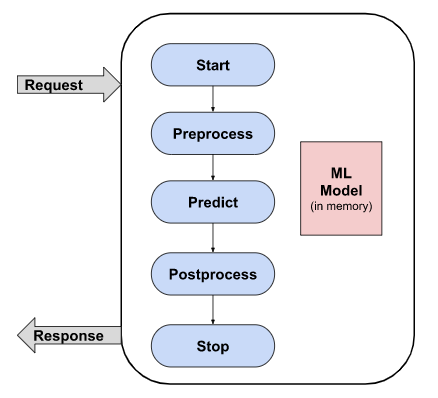

Figure 3: Service as Online flow

As seen in Figure 3, a model deployed as an online machine learning prediction service is essentially a flow containing steps that include but are not limited to starting to process a request, preprocessing the data received in the request and transforming it into acceptable format/features for the model, predicting using the deployed ML model, and postprocessing and converting the output prediction to a client agreed format.

In practice, one should be able to define and perform any step as it sees fit (e.g., the flow can potentially branch out to enable concurrent data preprocessing). Of course the load model step (which loads the ML model in memory) is universal and the loaded ML model should be active for the lifetime of the service.

This view really helped us come up with the “service as online flow” concept and create a user-friendly abstraction layer. Since Python is the preferred language for applied science at Zillow (and in the broader AI/ML community), we are doubling down with a Pythonic flow syntax to define our online serving flows.

Since we are already actively integrated with Metaflow (our version of metaflow: zillow-metaflow) for defining our batch workflows, using similar syntax has helped to keep the learning curve not only very reasonable but seamless. As a consequence, our users can express their online service code as flows in pure simple Python without requiring a deeper knowledge or in fact any knowledge of web service concepts and still deploy their models as highly performant web services (explained more in the Backend section).

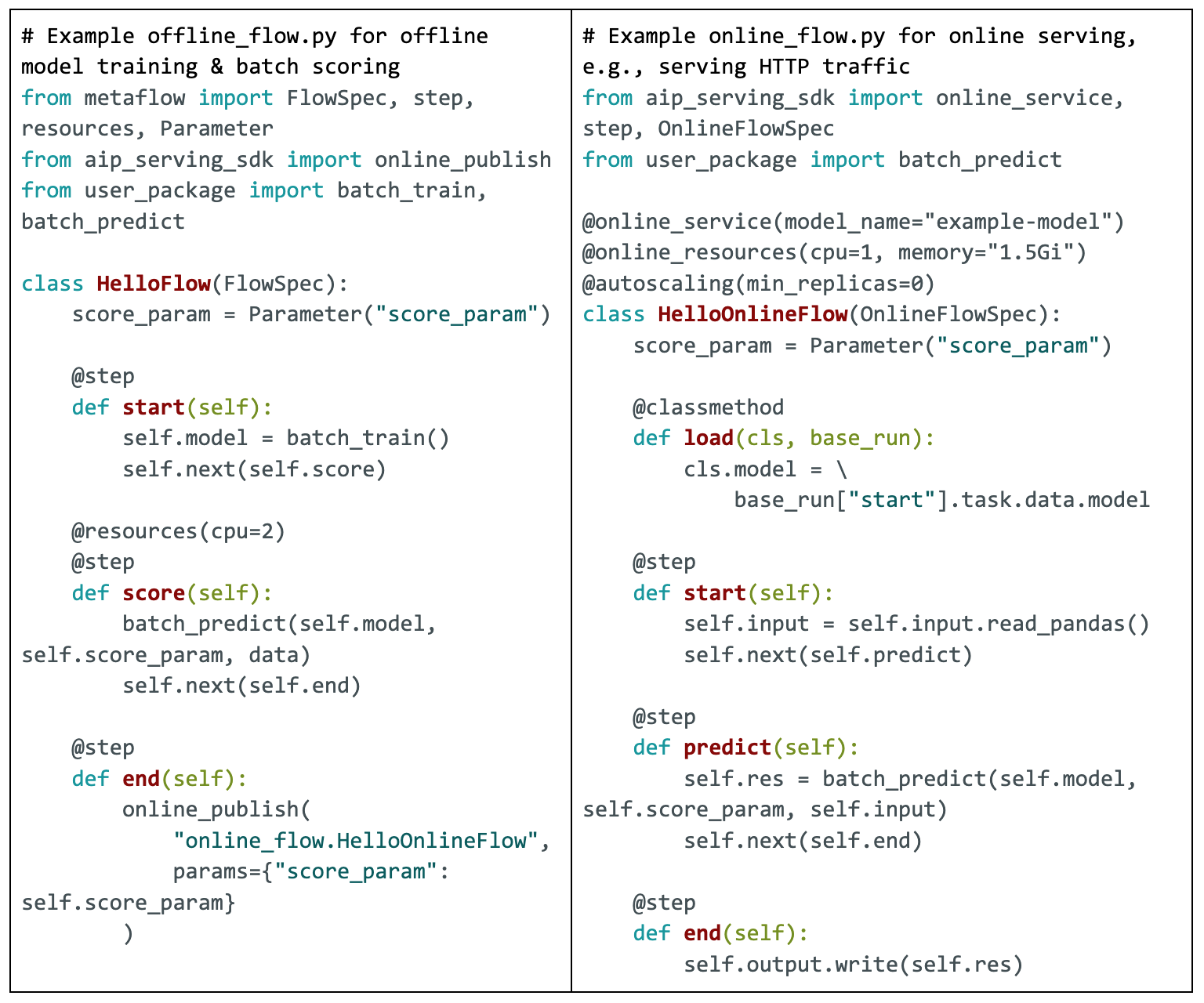

Here’s an example in which we put the offline batch flow and online service flow side by side to show what the user experience looks like:

Figure 4: On the left is an example of a “HelloFlow” offline flow or a batch training and scoring workflow. On the right, an online service flow for prediction.

The similarities in defining a flow between offline and online versions are by design. Significantly, where and how the steps mentioned in the code are orchestrated is completely abstracted from our end users. The online flow file on the right is all a user needs to define a complete service. Now let’s take a closer look at the online flow:

All these online flow abstractions are provided by the Serving SDK (Python module aip_serving_sdk). The Serving SDK also wraps a performant web server implementation (explained in the backend section), but for our users they don’t need to be worried about that at all. In the future we also plan to provide seamless integration with data, experimentation, monitoring and alerts systems leveraging the same syntax.

After the service is defined through the online flow, the offline flow will take the responsibility for service deployment, as shown in the example code using the “online_publish” function. When it executes, the “online_publish” function deploys the targeted online flow file as a real-time service. The artifact and metadata of the service deployment offline flow will also be available in the online flow’s load function through the “base_run” pointer, so that loading models as well as any other artifacts in the online flow for serving can be as easy and elegant as a one-line pointer-style call.

Such a pattern also helps naturally with the critical model retraining and refresh story, something we call Automatic Model Refreshes: for models that benefit from scheduled continuous retraining, they can be redeployed as services as soon as they get trained and evaluated in the offline flows. Note that our overall projects for Offline and Online do leverage GitLab™ and GitLab CICD overall for making all this experience happen.

All of these elements help make the model server creation and deployment story a straightforward, seamless and efficient experience. All the concepts involved here are made to be intuitive and Pythonic, without introducing much cognitive burden and conceptual overhead. The overhead is further reduced through a very similar syntax shared between offline and online flows. Furthermore, given that offline and online flow live in the same repository sharing the same container environment, there’s less of a hassle to reuse the code and align the runtime environment between model development and model deployment.

Internally, we’ve seen that the patterns of “ML model as a service” and “service as online flow” have helped us create model servers as well as deploy them efficiently. We have feedback from ML practitioners that this pattern has helped save at least 60% of the time that was spent on infrastructure type work before, and we are continuing to improve the solution to make it even better over time.

For our ML practitioners, this is all they have to do to deploy a model as a service to power core Zillow user experiences.

Behind the smooth user experience of model server creation and deployment is the underlying backend to make the model serving solution perform efficiently at scale in real production settings. We abstract this area from our ML practitioner end users completely so that they can focus on data and modeling, but this area is extremely important and is where our AI platform engineers spend a lot of time, aiming to provide an operationally excellent system.

It’s worthwhile to explore what makes ML serving different from regular web services:

Such unique characteristics have made ML serving an interdisciplinary area that many performant web serving architectures for regular web services do not perform well, essentially due to the fundamental difference between CPU-bound workloads and IO-bound workloads.

To tackle the unique characteristics of ML serving, a good first step is to introduce a request or event based auto-scaling mechanism to autoscale serving replicas based on the concurrency of in-flight traffic and the number of concurrent requests each replica could handle. This mechanism helps solve No. 2 (varying request workloads) and No. 4 (serverless) as now we can autoscale based on traffic itself rather than an indirect measure of traffic (e.g., CPU utilization).

However, this won’t help with No. 1 (CPU-bound) and No. 3 (heavy request effect). Especially as perfect load balancing is impossible, inevitably there could be surplus requests hitting the serving replica waiting to be fulfilled in the replica’s backlog queue and contributing to the context switch overhead. A long backlog queue itself could also bring in all the heavy request effects.

Solving these remaining pain points would require a strong opinion in the network architecture and data flow between the load balancer and serving replicas. Ideally we’d need a middle layer in between to buffer the surplus requests and only dispatch them to the serving replicas with capacity. If smart enough, that middle layer could also proxy the work of probes to offload some burden from the serving replicas.

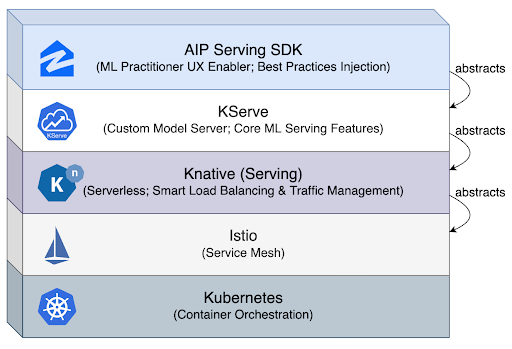

All these requirements may seem overwhelming, but actually it turns out that there’s already a mature open-source solution handling all the requirements gracefully – Knative Serving. Built on top of Kubernetes and Istio, Knative Serving offers:

With Knative helping solve most but not all of the pain points in ML serving efficiency (due to the complexities of a distributed computing environment), we are further utilizing KServe to complete the ML serving picture. KServe offers a performant base custom model server that we can further optimize upon, a great abstraction on top of Knative, as well as lots of critical features for ML serving such as request batching enabling batch optimization and separated transformer for pre/post-processing.

Figure 5: High-level architecture of the Zillow Group AIP serving stack

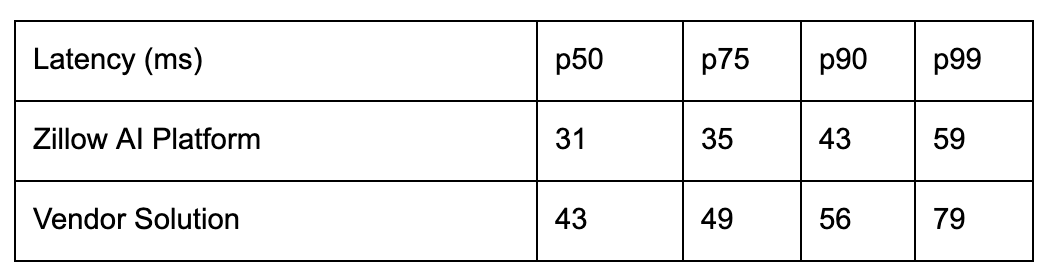

Leveraging these open source solutions and optimizing for performance internally based on our insights around ML characteristics, we manage to boost the ML serving performance in real production scenarios, compared to alternative vendor solutions.

The performance difference varies by specific models and settings, but generally we are seeing a 20%-40% improvement in terms of p50 and long-tail latencies. This in turn also contributes to 20%-80% less cost to serve the same traffic, combined with other internal compute and resource level optimizations.

Table 1. Performance comparison of a production grade model

Lastly, with the Zillow’s AIP Serving SDK on top of the stack, we hide these advanced Knative and Kubernetes concepts from our end users and only provide them with the online flow user layer that we have implemented. The deployments are taken care of by our established GitLab CICD pipelines as well as automatic redeployments on recurring trained models with “online_publish” functionality that we have introduced.

Serving SDK also nicely integrates with the Zillows tools and ecosystem on monitoring and observability with platforms like Datadog and Splunk. We plan to introduce more and more abstractions for our users related to data access as well as running seamless A/B experiments, ultimately helping them focus only on the core modeling details and metrics and abstract away all the complexities that come from cross-platform integrations.

We are privileged to be in this open source era when there are plenty of OSS including Knative and KServe empowering ML serving, whether being a primary goal (for KServe) or as a byproduct (Knative’s primary goal is to enable serverless and event driven applications, which at first glance seems to be nothing especially relevant to ML serving but turns out to be of great help).

However, we can only be aware of and fully utilize these benefits when we know the whys and hows of the ML Serving Characteristics. We hope this blog can help turn on the lights of this less visited ML serving performance facet when it comes to comparison of ML serving solutions, especially for large-scale efficient production-grade serving.

With a combination of our easy-to-use user layer enabling server creation and deployments and a very scalable and performant backend layer, we are able to provide our users a self-service serving platform, significantly reducing or even eliminating the friction between various stages in the ML lifecycle. Our vision is to make it so simple that every machine learning model can be deployed in a self-service manner, easily and rapidly empowering our ML practitioners to enable our key business scenarios.

And this is only the beginning of the story as we continue to improve the ML efficiency for both humans and machines. We have started on some interesting paths to provide more abstractions with respect to data layer access, feature extractions and enhanced observability in model performance metrics, in addition to service system metrics. We are excited about our OSS engagements and working with an active community of world-class developers to build our solutions — and we look forward to contributing in this space as well.

We are committed to continuity in improving both our user experience as well as computational efficiency and are happy to connect and collaborate as we continue to learn.

GITLAB is a trademark of GitLab Inc. in the United States and other countries and regions. All product names, logos, and brands are property of their respective owners. All company, product and service names used in this website are for identification purposes only. Use of these names, logos, and brands does not imply endorsement.