Topic Modeling for Real Estate Listing Descriptions

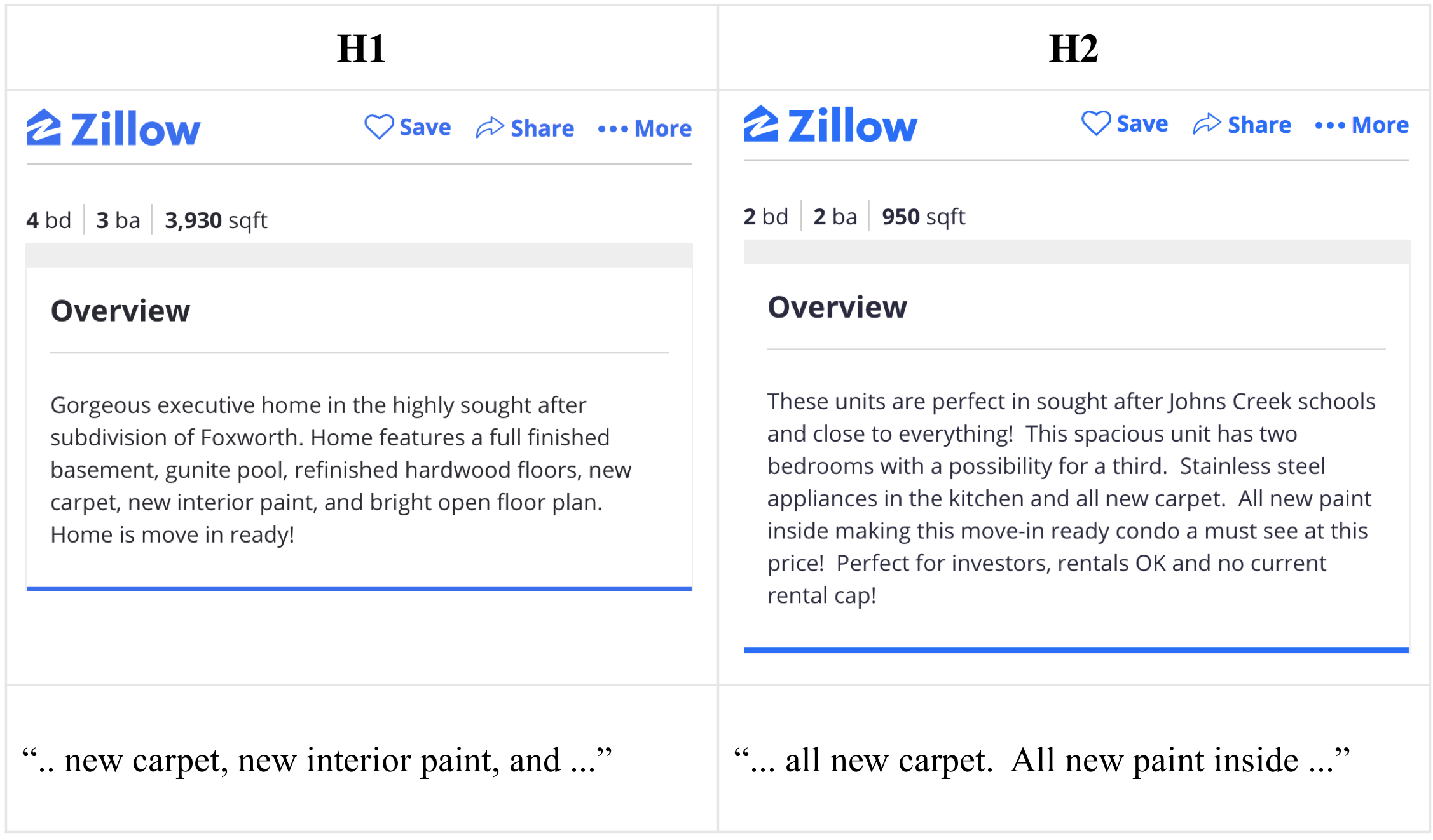

Details about a home can be provided through multiple modalities, including video, image, text, or structured data at Zillow. While structured data such as lot size and square footage of a home can be easily leveraged by a machine learning system to provide signal regarding the home, other modalities may need further preprocessing steps to capture their representations in a usable form (e.g., as a feature vector). The textual modality (in the form of listing descriptions) often contains unique information about a home. One solution to represent listing descriptions for a machine learning system is to use a document-term matrix, which is a large sparse matrix. For example, given two homes with the following listing descriptions,

We can represent the listing descriptions of these homes by their own sparse vectors, each constituting a row of the matrix:

To tackle the problem of sparsity, we can project the large-dimensional sparse space into a lower cardinality dense space via topic modeling techniques. These models represent each home as a probability distribution over topics and each topic as a probability distribution over individual words. There are many hyper-parameters to tune in training topic models, such as the number of topics or the list of stopwords. The optimal configuration of these hyper-parameters poses one of the main challenges in using topic models.

In this blog post, we describe steps that can be taken to understand the listing descriptions using a topic modelling algorithm. We focus on:

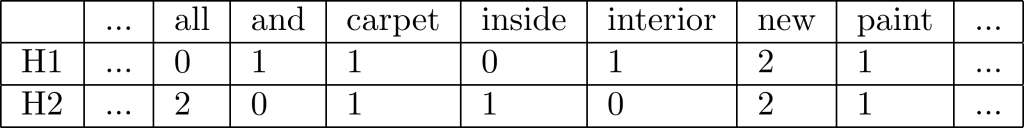

We use the now standard Latent Dirichlet Allocation (LDA) model [1], depicted as a directed graphical model with the plate notation in Figure 1. In this generative model, each word (W) in a home description is generated according to the distribution over words for the given topic (Z). In the context of the current document, the topic is generated from the document’s distribution over topics with parameter (). The topics are characterized by a distribution of words within each of K topics (). This model describes K topics that generate N words in the description of any of the M homes listed on Zillow.

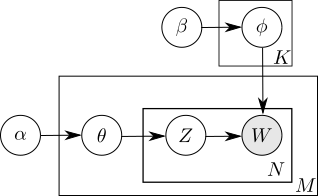

The distribution of words plays a critical role in the distribution of topics trained using LDA.Therefore, before getting deep into the topic models, let’s understand the distribution of words in the collection of listing descriptions at Zillow. First, we look at the popularity of words (tokenized by NLTK tokenizer [2]) in this collection. We remove punctuation marks and use the Porter stemmer to reduce the size of the vocabulary.

The term frequencies of the most popular words in this collection of listing descriptions, shown in Figure 2, provide valuable information regarding the distribution of words in this collection. The word “home” exists in over half of the listing descriptions. The following words exist in more than a quarter of listing descriptions in Zillow:

![]()

The popularity of stopwords in listing descriptions at Zillow is also different from the popularity in general English documents such as the one provided by NLTK. Some noteworthy differences are:

Furthermore, some interesting observations:

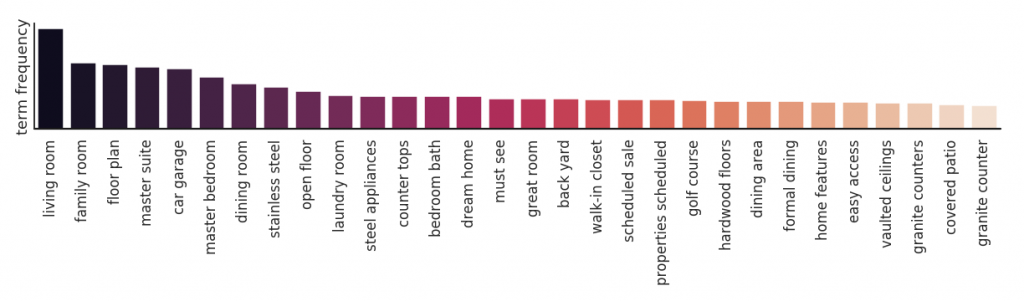

We remove general list of stopwords (provided by NLTK) and in addition to unigrams, we looked at the popularity of bigrams in this collection (shown in Figure 3) and observed that:

Words that frequently exist in a majority of documents such as “and” and “the” are often assumed to have less effect on the topics in the document describes. Therefore, as a pre-processing step to train LDA, it is a common practice to remove the list of popular words from the collection. In the following, we examine the effect of domain-specific popular words, such as “home” and “room”, on the distribution of derived topics.

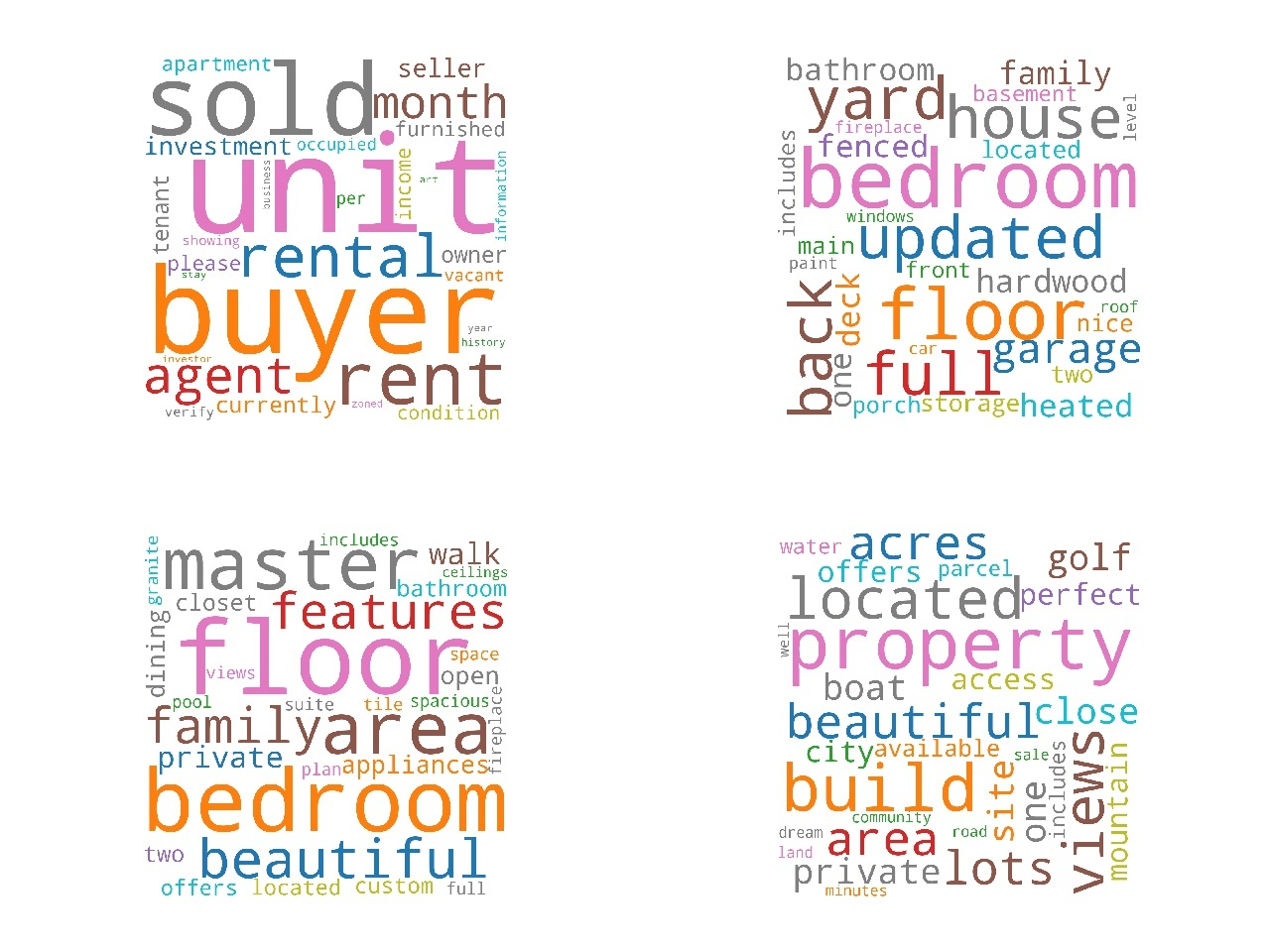

We utilize word clouds to depict the topic distributions. Considering only two topics per collection, we get the following distribution of words over topics:

In the word clouds as shown in Figure 4, the size of each word depends on the probability of this word given each of the topics – p(word|topic). In one topic, the most related words are “room” and “home” and in the other topic, the most related words are “lot” and “home”. Given only two topics, we can understand that the listing descriptions are mainly about the topics “existing homes” and “vacant lands”. This result is consistent with the fact that listings in Zillow correspond to either existing homes or vacant lands. Since the word “home” exists in more than half of the listing descriptions, it was expected to be displayed as the most related word in both topics.

From the distribution of words per each topic in Figure 5, we can see that removing the word “home” does not significantly change the semantic meaning of topics. By removing the word “home”, we can observe that other words, such as “bedroom” and “kitchen” in one topic and “property” and “located” in the other topic, have higher relevance probabilities.

By repeating the above step and removing the next most related words (“room”, “kitchen” and `“lot”) from the collection, we observe that these topics have slight changes in their semantic meanings. For example, we can see that by removing the word “kitchen”, the first topic has higher relevance to the words “bedroom” and “master”.

The next question is, whether the meaning of each topic changes more significantly when a larger number of LDA topics is extracted? In the following figures, we have four topics and we keep all the popular words:

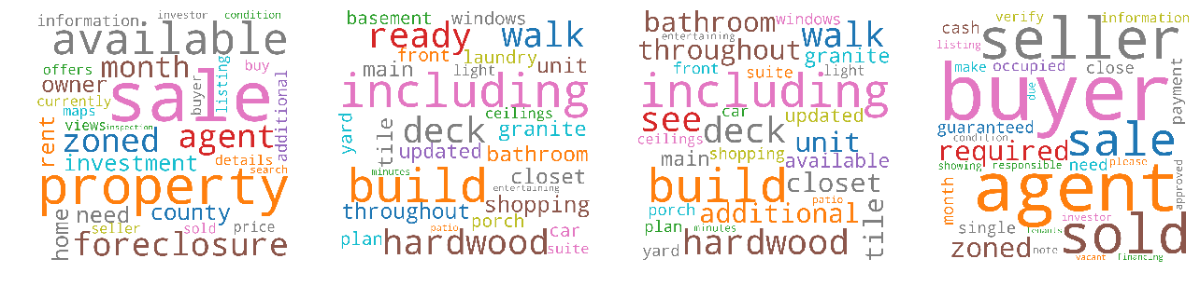

Now, we remove the popular words that appear in more than a quarter of listing descriptions and obtain the following topics:

We can see that by increasing the number of topics (from 2 to 4), removing popular words from the collection results in a more significant change in the semantic meaning of the derived topics. In the case of having four topics, by removing the popular words from the collection, instead of a topic about “view”, the model derives a topic about “sale”. On the other hand, when there is a larger number of topics, the model has a larger number of parameters and less likely converging towards the same answer. Therefore, by having a larger number of topics, the model has less confidence in its derived topics and as a result, there is a more significant change when we remove the most popular words from the collection.

The number of topics is a hyper-parameter in the LDA model. As shown earlier, given a different number of topics, the trained LDA model provides different insights regarding the collection of listing descriptions. By increasing the number of topics, the list of topics trained by the LDA model tends to contain duplicate or similar topics. For example, given 100 topics, we identify the following topics:

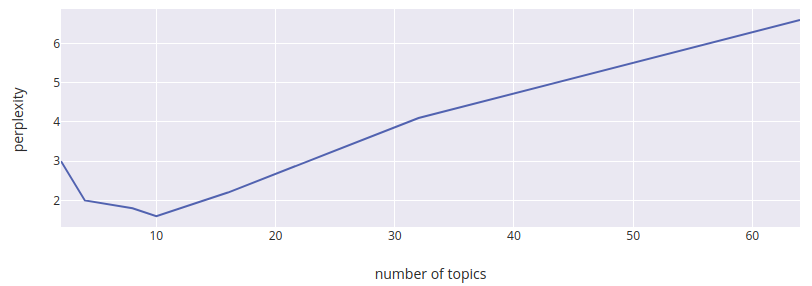

The second and third topics in Figure 9 share the same list of most relevant words, thus they can be considered as duplicate topics. Duplicate topics are generated by LDA when the given number of topics is greater than the actual number of topics in the collection. Therefore, the next question that we need to address is what is the actual number of topics in the given collection of home description. By increasing the number of topics to train LDA, the LDA model still converges to the same number of unique topics, since the actual number of topics can be less than the given number of topics. By using perplexity [3] to measure the quality of the topic model, from Figure 10, we can see that the model has the lowest perplexity when we select 10 as the number of topics to train the LDA model. In other words, we can conclude that the actual number of topics is around 10.

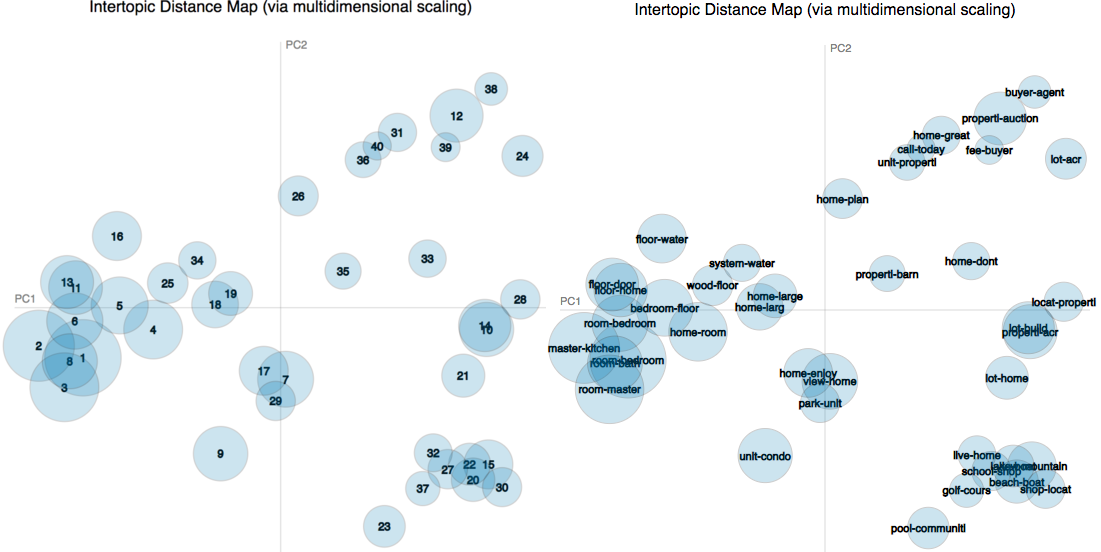

From the inter-topic distance map, described in the next section, we show that the derived topics can be clustered into a smaller number of topics.

We can compute inter-topic distances using Jensen-Shannon divergence [4], to understand the similarity of topics. Besides the distribution of words within topics, the inter-topic distances provide valuable information regarding the content of listing descriptions. By considering the distance between topics, we can infer clusters of topics. For example, from the following two figures, we can understand that the set of topics (topics 30, 20, 15, 22, … shown in the right bottom corner of the following figure) have a significant inter-topic distance from the rest of the topics. These topics can be considered as a cluster of topics that is about the location of a house and its distance from different attractions such as shops, beaches, and mountains.

The distribution of detected topics reveals the main topics that are mentioned in the listing descriptions at Zillow: “Homes for sale/rent”, “Vacant Lands”, “Real Estate Investment”, and “attractions”. The number of documents that have higher dependency with “Homes for sale/rent” is more than the number of documents that belong to the other main two topics.

[1] Blei, David M., Andrew Y. Ng, and Michael I. Jordan. “Latent dirichlet allocation.” Journal of machine Learning research 3, no. Jan (2003): 993-1022.

[2] https://www.nltk.org/api/nltk.tokenize.html

[3] Wallach, Hanna M., Iain Murray, Ruslan Salakhutdinov, and David Mimno. “Evaluation methods for topic models.” In Proceedings of the 26th annual international conference on machine learning, pp. 1105-1112. ACM, 2009.

[4] Lin, Jianhua. “Divergence measures based on the Shannon entropy.” IEEE Transactions on Information theory 37, no. 1 (1991): 145-151.