Zillow maintains a database of 110 million homes in the United States. To keep the database updated, we import data from with various feeds from the counties, brokers, and MLS. Another important source of data is the actual homeowner. We have a feature called “Claim Your Home” that allows the homeowner, after verification, to update their home data – such as home facts, description, and photos and videos. This data is also used to improve the accuracy of the Zestimate. Moreover, after claiming, the homeowners will have access to unique content and tools related to their home.

In order to get more users to claim their home, we implemented a machine learning application and multiple models to predict the homeowner for all homes nationwide. The models are used in a variety of use cases to increase home claims. For example, we display a pop-up for the user to claim the home if the model predicts a high score.

Models

There are multiple regression classification models involved – NFS (not for sale homes) property model, RS (recently sold homes) property model, user model, and an ensemble model. The NFS property model predicts the owner of a home based on user behavior for a particular home. The RS property model is similar to the NFS property model, but the model is optimized for recently sold homes. The reason to split the models is that we found the feature importance to be quite different. To avoid the vast search pitfall and improve accuracy, we wanted to reduce # of predictive variables, and thus we decided to split up the models. Finally, the user model predicts the owner of the home leveraging a particular user’s behavior across the various Zillow brands sites and mobile apps.

Training Set

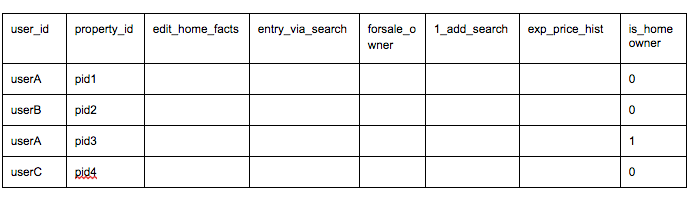

Our homeowner predictions are based on supervised learning methodologies. The training sets for the various models are based on variations of website and mobile behavioral data. The label is binary – did this user activity belong to the user that claimed the property. The training set row represents a user activity in regards to an actual property. Thus, in aggregate, the training set is all user activity events for homes with actual claims. There differences between the various models is in the feature engineering.

Feature Engineering

Feature engineering is the process of mapping important raw signals into concrete variables for the model. The decision to split our predictions into multiple models was driven by the feature engineering process and model performance metrics.

For the property models, we are interested in predicting a homeowner based on user behavior signals relative to the particular property we are predicting. In other words, for a specific property, we want to look at all user activity (e.g. page view, clicks on tabs, interaction with photos) from all users, which includes the actual homeowner, and distinguish homeowner activity from non-homeowner activity. Out of 45 different features, here are some of the important ones for the NFS property model:

edit_home_facts = (# times user triggered edit home fact event on the property) / (# of times all users triggered edit home fact event on the property)

entry_via_search = (# of times user arrived at the property via search on homepage from the sell tab) / (# of times all users arrived at the property via search on homepage from the sell tab)

forsale_owner = (# of times user triggered post as for-sale-by-owner event) / (# of times all users triggered post as for-sale-by-owner event)

1_add_search = (# of times user searched for single address for the target home) / (# of times all users searched for single address for the target home)

exp_price_hist = (# of times user expanded price history module) / (# of times all users expanded price history module)

The training data set, with a subset of the predictor variables, looks something like this:

The user model follows a similar paradigm. The difference is within the feature engineering – specifically the features only incorporate that specific user’s activity across all properties (vs. all other user activity for the particular property as in the property models).

Model Performance

The key model performance metrics we looked at are:

Precision – % of homeowners predicted correctly vs. incorrectly (not homeowner)

Recall – % of homeowners we predicted correctly returned from the overall set of homeowners

F1 – overall accuracy and effectiveness of our model

We found the property models were very strong in recall but not as strong with precision. On the other hand, our user model was weak in recall but strong in precision. By combining these various models, we are able to get a good balance of high recall and high precision.

System Engineering

Now that the model and features have been figured out, the next step is to actually make predictions of the homeowner for 150+ million homes. This requires us to move the model prototype code – which includes data ingestion, ETL, feature extractions, training and scoring the model – into real production code. Our definition of “real” production code is

Unit tests with code coverage at least 80%

Regression test suite with golden data set

Automation – continuous integration and deployment

High quality code – the right data structures, efficient but clean / maintainable Python code.

Performance & Scalability – the code runs quickly and can scale linearly as we add nodes.

We decided on Spark as our data processing platform. For data, we decided on real-time streaming data ingestion with Kinesis. The only reasons we decided on Kinesis over open-source Kafka is (1) Kinesis is cheap and (2) Kinesis is a managed service – so less work on the operations side of things.

With Spark, the data partitioning strategy is critical for performance. The goal is to avoid major shuffle operations by partitioning the data appropriately at the beginning. I’ll post on more details of Spark and performance in future blogs.

Conclusion

The home claims model has been a huge success in driving up home claims by homeowners. We have a lot of new ideas we are exploring – including using more data sources and signals, exploring different modeling techniques to improve the precision and recall, and performance optimizing our code to squeeze out better performance.