Zillow 2020 AI Forum Recap

After hosting the 2018 Zillow AI Forum in San Francisco, and the 2019 event in Seattle, this year’s installment of the forum was our first virtual event. With five presenters and over 400 attendees, the event covered the latest in Data Engineering, Machine Learning and Natural Language Processing in just a matter of hours!

I joined ZG in January 2020 and over the last 3+ months, have been thoroughly impressed (and borderline overwhelmed) with the variety and volume of ongoing R&D in AI. I see a lot of value in these forums and conferences, that are not only instrumental in bridging some of this knowledge gap and providing an excellent platform for discussion. In this blog, I’ll summarize each topic presented at this exciting event.

In my experience, a majority of time and effort behind any data-driven project goes into obtaining the required data in an efficient, reliable and scalable manner. As a machine learning engineer, I’m well aware of many of these challenges so I found this project particularly interesting.

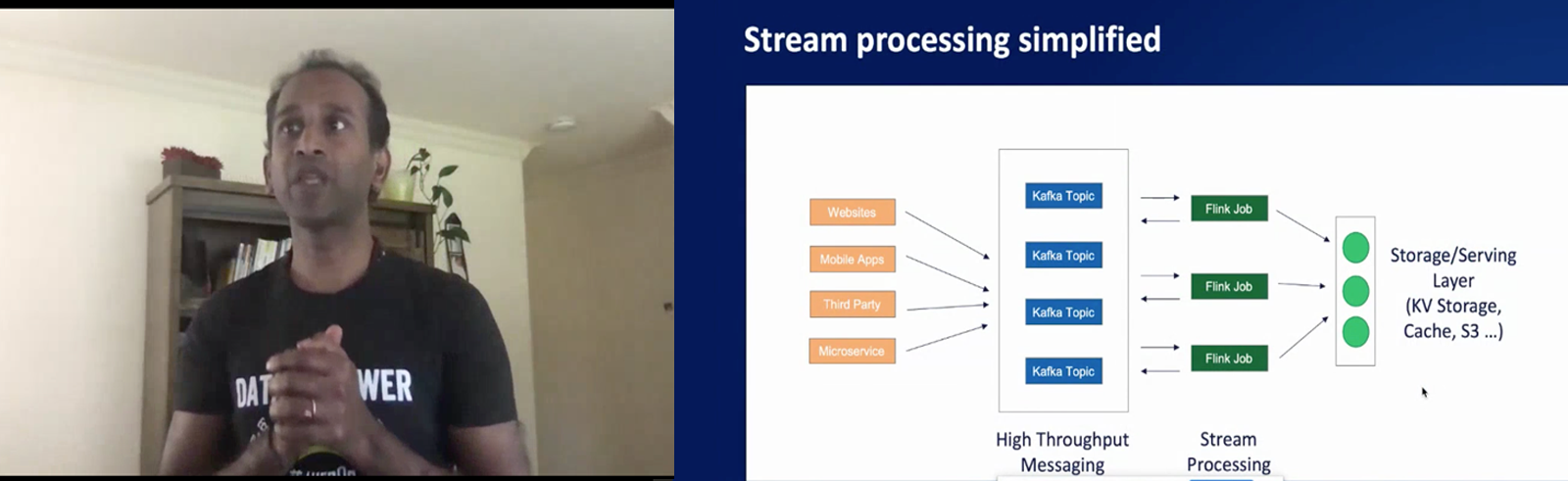

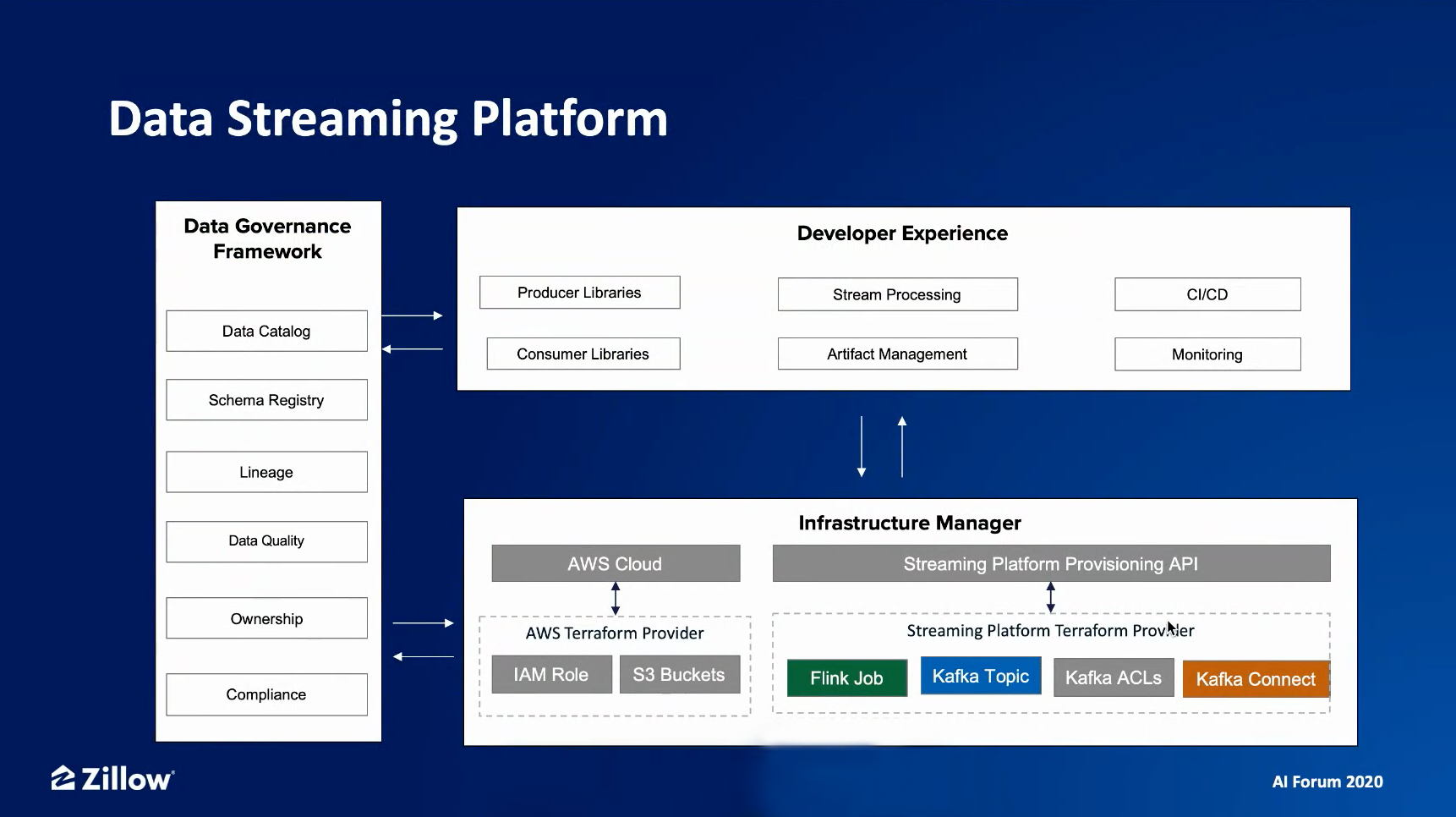

DSP is Zillow’s centralized effort to simplify all aspects of developing and running event streaming and stream processing workloads so application developers can leverage reliable data infrastructure so that they can focus on providing a delightful experience to customers. Srinivas Paluri, Director of Data Engineering at ZG, walked us through its scope, architecture and future investments.

Srinivas explaining the crux of stream processing.

Today, Zillow maintains data on 110M+ homes and ~200M users. DSP supports use-cases like property data, customer data, Zestimates, personalized recommendations, Zillow Offers and Premier Agent – accounting for 200+ streams, 5B+ events/day and 12 TB+/day!

The key components of DSP, as identified from the architectural diagram above, are:

The most promising future investments planned for DSP include:

Together, these will be great additions for simplifying the data analysis aspect of any data-driven workflow (be it ML, dashboarding, experimentation or exploration) on the vast volume of data hosted by DSP, a well-planned next step towards increasing velocity.

Dr. Marcus Fontoura, a technical fellow at Microsoft, led the second talk of the forum with a discussion around adding intelligence to cloud platforms. For someone like me who hasn’t spent a lot of time familiarizing with the back-end implementation of cloud platforms (like AWS, Azure and GCP), this was a great opportunity to dive deep into one of its key aspects. The potential of ML in business-facing applications was another big takeaway here, given that a good portion of ML education and research tend to circle around user-facing applications.

Azure Resource Central (RC), a tool that utilizes ML and prediction-serving systems for improving resource allocation and management, now sits at the heart of Azure Compute. The motivation behind the importance to this system is that each 1% of improvement in resource management at Azure scale results in millions of $ in savings.

Marcus explaining the technical details behind capacity planning and allocations

The “Primer” as discussed in the above picture evaluates various scenarios in its decision-making process, like the following:

However, running ML at such a large scale, especially in workflows requiring multiple data-intensive iterations (as is typically the case for high performance requirements), can quickly get expensive and defeat its purpose of lowering costs. Thus, RC operates on a delayed observation model (model generation happens periodically offline while it is served online for real-time predictions) as a sweet-spot between performance and cost.

In one of the final slides, Marcus reveals the high (consistently 80+%) performance of Azure’s resource management models over some of the commonly used evaluation metrics for ML models. From the previous context, we can estimate the amount of cost savings a performance this high, on some key prediction problems like the ones above, translates to. Innovations like this drive cloud platforms (and all their customers) towards intelligence!

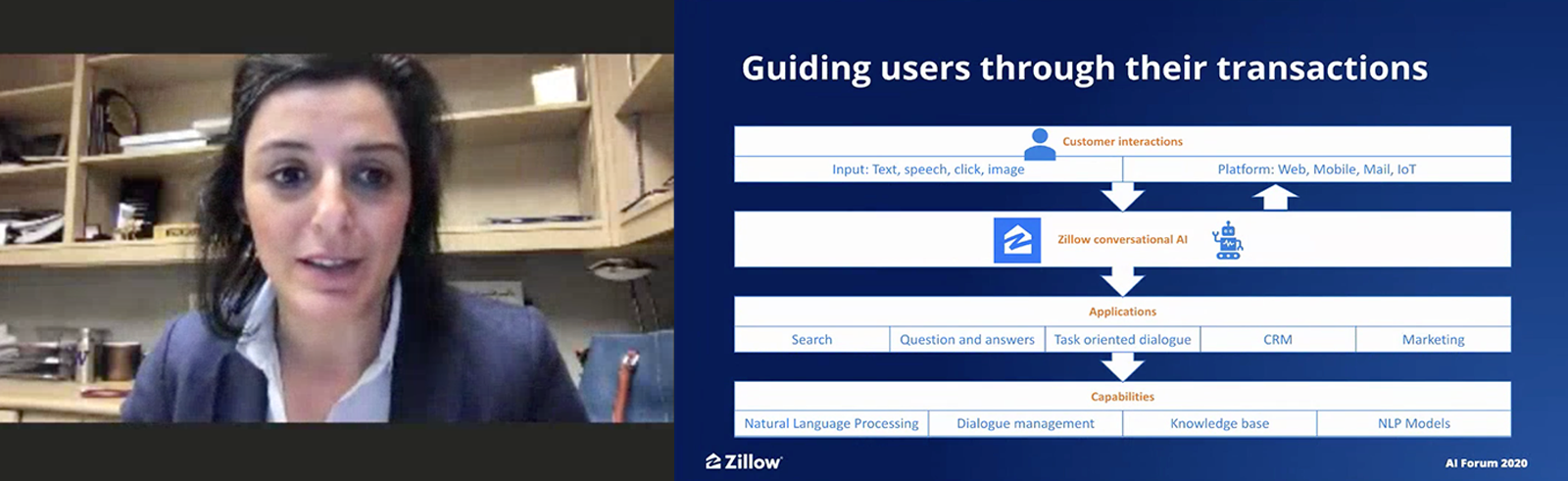

The final presentation of the forum was around conversational AI, as it applies to real estate technology. As people get more and more comfortable relying on smart assistants to streamline much of our day-to-day activities, it is inevitable for users to begin expecting the same level of convenience from their favorite real estate platforms as well. ZG’s Conversational AI team leads the company’s effort on this front, with a portfolio of use-cases scoped for the near future. I work on the Customer Experience Personalization team here so I was super excited to see how other teams within ZG are utilizing AI towards a similar cause.

Farah outlining the scope of Conversational AI at ZG.

Farah Abdallah Akoum, Principal Product Manager at ZG, began the presentation pointing out that the motivation is not to replace humans, but to assist us in unlocking life’s next chapter. Conversational AI capabilities like natural language processing, dialogue management and user behavior assessment are key to delivering much of this promise.

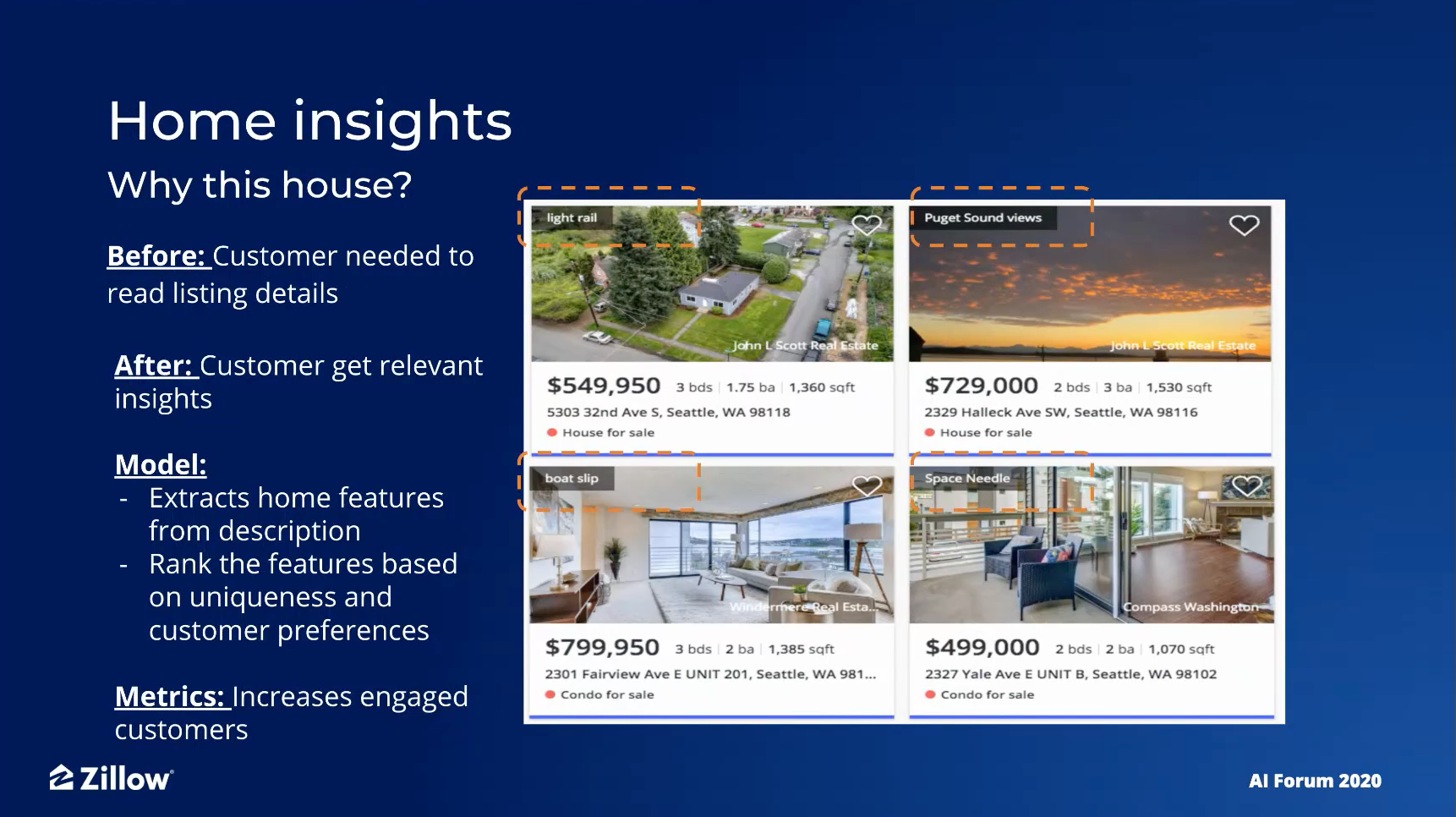

One exciting current use-case in this charter is presenting home insights along with the listing pictures that quickly display the top insights into a home on the listing itself.

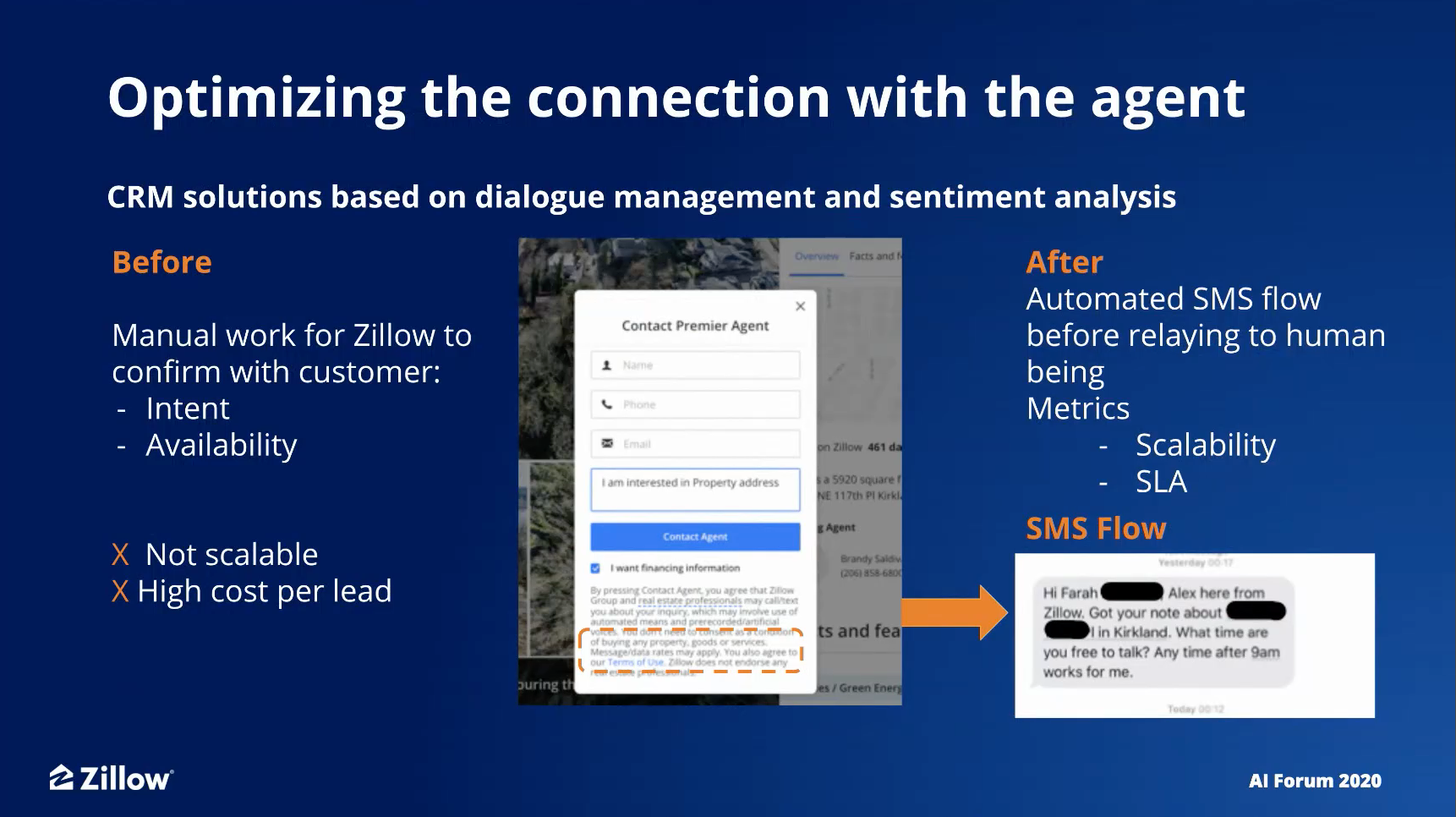

Another brilliant use-case is optimizing the connection with the agent. As we can see in the above picture, the earlier mechanism relying on manual work to confirm intent and availability with customers is neither cheap nor scalable. That is where the automated SMS flow comes into picture.

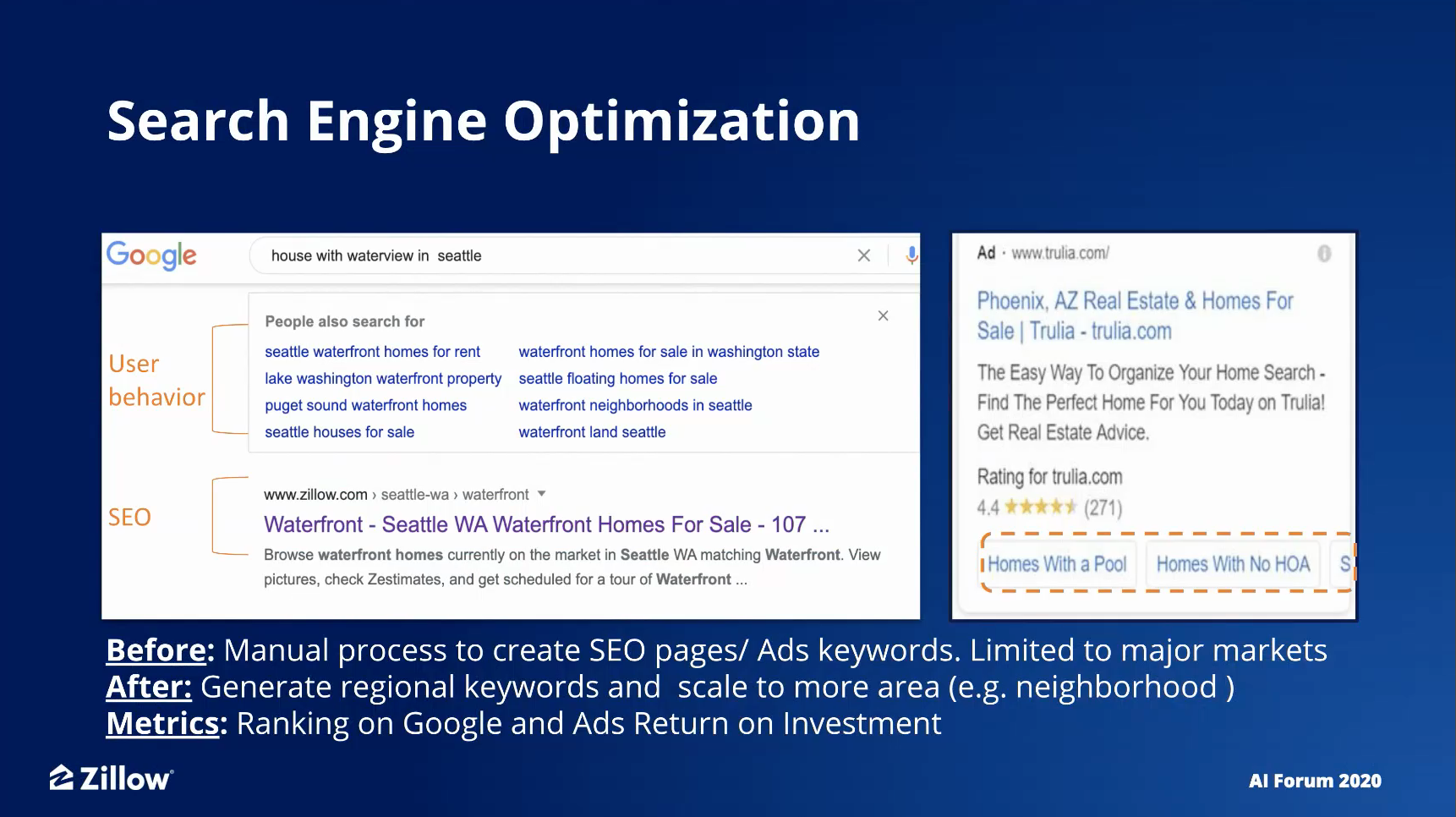

Providing additional filters in Google search results

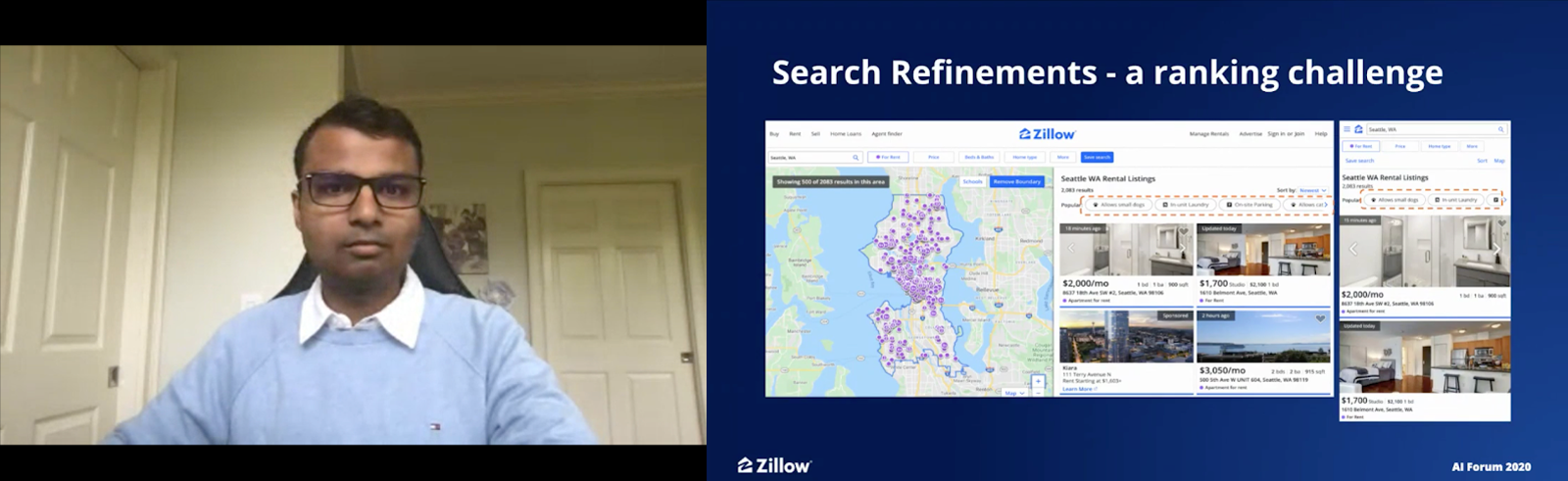

In the second half of this presentation, Tarun Agarwal, Senior Applied Scientist at ZG, spoke about how their team is tackling another critical use case in refining the customer experience – Search Refinement. With the massive number of homes available on the market, along with the multiple filters available on ZG platforms, it is easy for customers to either overlook the filters or feel overwhelmed, so the idea here is to surface contextual filters and help them in their search.

Tarun presenting the usage of contextual filters as buttons (highlighted in top-right, above listings) in both, web and mobile platforms.

Tarun delineated a variety of technical approaches (from easy to hard and cheap to expensive) behind tackling this problem. In each of these approaches, it was necessary to filter out traffic from bots and users bouncing quickly between searches, along with upweighting serious users for effective results.

The team currently uses BERT, RobertA and DistillBERT models for NLP and already deployed this feature in 3 different cities. I was very impressed to see how these filters can be really personalized based on not only user behavior, but also market segmentation (based on locations, for example.) For example, popular filters in Seattle are “allows pets” and “waterfront” whereas in Phoenix, it happens to be “Has air-conditioning”.

These solutions are also capable of recommending filters like bathroom count and price based on the number of bedrooms searched for. For example, recommended filters for the bathroom count and price range (to buy) based on a bedroom count search in Seattle:

Another awesome example of personalization was no repetition on a user-session level, which really helps keep these recommendations fresh and attract user interest!

At my first Zillow AI Forum, I gained a tremendous amount of information and was able to ask questions directly about ongoing cutting-edge projects. I’m looking forward to reconnecting and learning more about what other industry leaders are working on at future Zillow AI Forum Events.