13 min read

How the Zillow Indoor Dataset Facilitates Better 3D tours and Advances the Science of Indoor Spaces

When it comes to buying or renting a home, digital tools are becoming an increasingly popular way for people to identify what they want before they invest the time to physically tour a property.

In Zillow’s 2021 renter’s survey, more than half (58%) of renters agreed at least that 3D tours would help them get a better feel for the space than static photos, up from 55% just six months prior. Half (50%) agreed that they wish more listings had 3D tours available.

Buyers are enthusiastic about new tools as well. In another survey this past February, people were most likely to cite viewing a digital floor plan and a 3D virtual tour (both 79%) as tools they would like to use to help shop for homes.

Following these trends, Zillow’s 3D Home® interactive floor plans are becoming a more popular way to provide home shoppers the means to experience homes without being physically present. Never has this been more important than in recent times, where the ability to explore a home virtually has provided the added benefit of increased safety and security for buyer and seller alike.

The creation of such tools has been the mandate of Zillow’s Rich Media Experiences Team (RMX), whose focus is to provide home shoppers with a more immersive and authentic sense of the home. Production of 3D Home interactive floor plans creates large quantities of accurate data, which in turn are used by RMX to develop new models and methods to automate the creation process to increase efficiency, as well as for research and development of new products and experiences. In order to support similar advances in the academic community, RMX has released this data to the public in the form of the Zillow Indoor Dataset (ZInD).

In this blog post we will describe the preparation process, important attributes, and promising applications of ZInD.

The difference with Zillow’s approach

Unlike competitors that often rely on specialized 3D scanners, Zillow’s floor plans are created purely from image data. From a set of captured 360 panoramas, an interactive tour is created, providing the viewer with the ability to choose their pace, path, and viewpoint as they traverse the home.

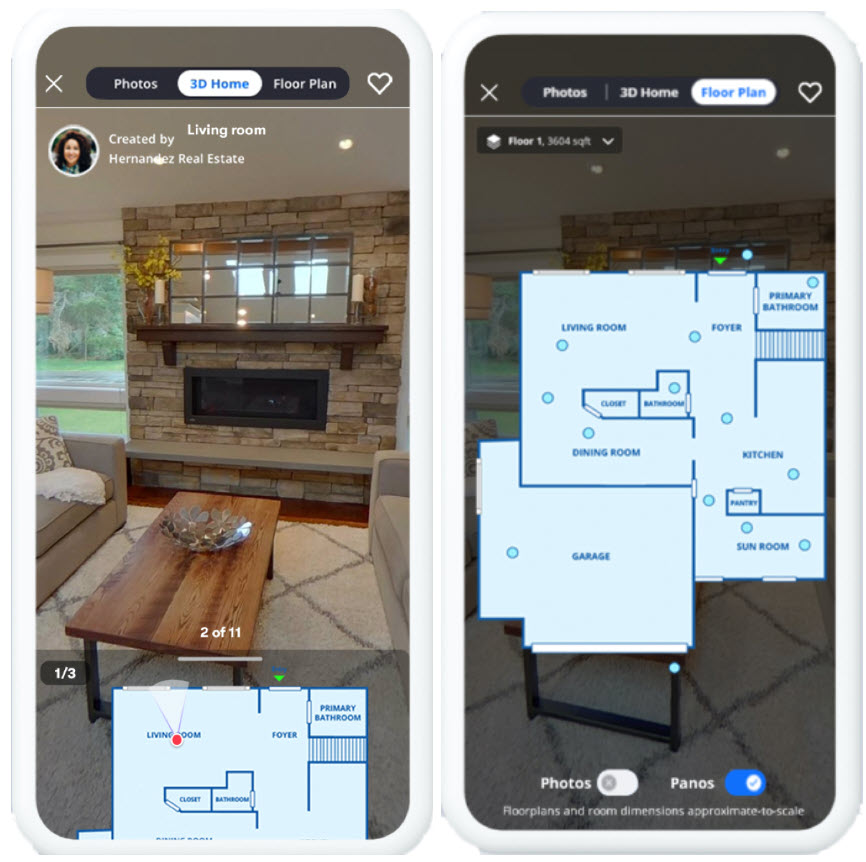

The 3D Home interactive floor plan makes use of the position of each panorama and localized pose of each listing photo, and displays the viewer’s current position and viewpoint. It simultaneously provides both local and global context, resulting in an experience that far surpasses traditional real estate imagery.

Figure 1. Zillow’s 3DHome Capture App.

Figure 1. Zillow’s 3DHome Capture App.

The secret and potential of this approach comes from ZInD. Derived from 3D Home interactive floor plan captures (capture app shown above in Figure 1.), ZInD contains 360-degree panoramas from real homes, along with annotated room geometry and 2D and 3D floor plans.

The data contained in ZInD has been used internally for model development and method validation for some time. Now, for the first time, Zillow will be releasing this data to the research community to aid progress in room layout estimation, automated floor plan creation, and other tasks in the domain of indoor understanding. While a forthcoming paper recently accepted to the IEEE/CVF Conference on Computer Vision and Pattern Recognition will go into greater technical detail, we first wish to share the essentials of the dataset, as well as the mission motivating its release.

To better understand ZInD, it is useful to understand the source, our ZInD annotation pipeline.

Our Floor Plan Creation Pipeline

ZInD contains over 70,000 panoramas from over 1,500 unfurnished homes, from which over 2,500 floor plans have been created. To capture this data at scale, Zillow partnered with photographers in 20 U.S. cities coast to coast, providing 360 panoramic images of each room in each floor.

Specific capture guidelines on lighting and the number of panoramas-per-space increase the quality of the derived floor plans. From the perspective of ZInD, increased data uniformity is an important aspect of the data intended for machine learning (ML) model development.

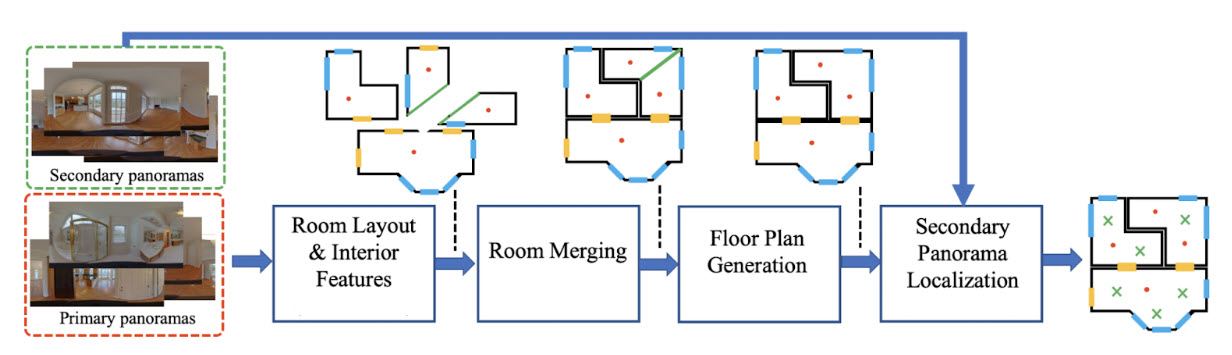

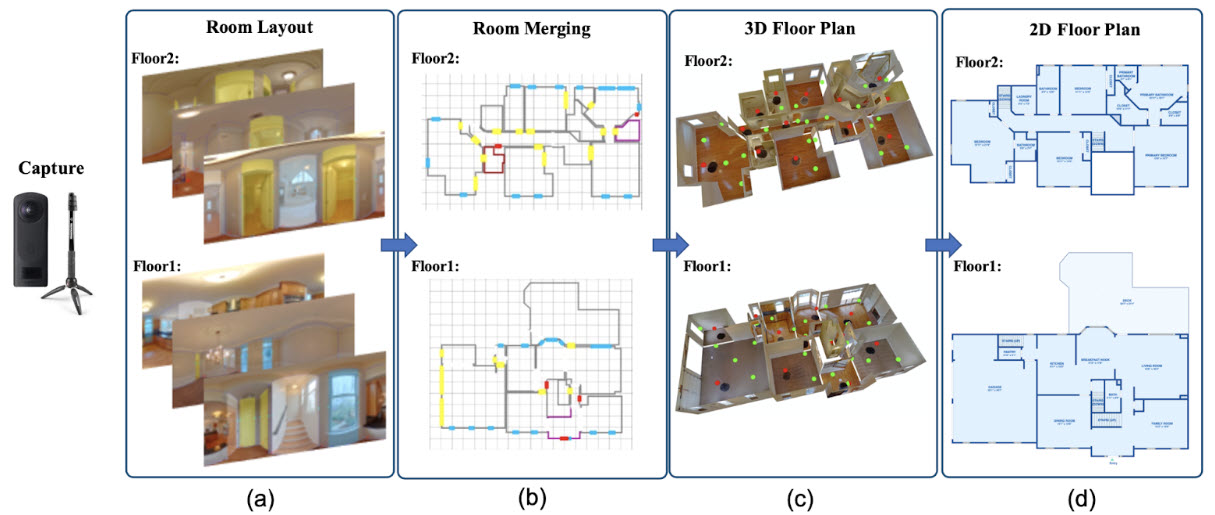

Internally, RMX has developed an extensive pipeline for floor plan creation by an in-house team of annotators assisted by automation. Figure 2 provides a high level overview of our annotation pipeline, while Figure 3 contains visualizations of the content produced at each step of the pipeline - the individual steps will be explained in the following. In total, we estimate the ZInD dataset has taken over 1,500 hours of annotation work. To increase floor plan accuracy and creation efficiency, RMX is increasingly introducing automation to the pipeline through in-house ML model development.

Figure 3. Visualization of content produced at each step of the annotation pipeline. The Zillow Indoor Dataset dataset provides visual data covering real world distribution of unfurnished homes, including primary 360o panoramas with annotated room layouts, windows and doors, merged rooms, secondary localized panoramas, and final 2D floor plans. From left-to-right: (a) captured RGB panoramas with layout, windows and doors annotations, (b) merged layouts, (c) 3D textured mesh, where red dots indicate primary panoramas and green dots indicate annotated secondary panoramas, (d) final 2D floor plan “cleanup” annotation.

Figure 3. Visualization of content produced at each step of the annotation pipeline. The Zillow Indoor Dataset dataset provides visual data covering real world distribution of unfurnished homes, including primary 360o panoramas with annotated room layouts, windows and doors, merged rooms, secondary localized panoramas, and final 2D floor plans. From left-to-right: (a) captured RGB panoramas with layout, windows and doors annotations, (b) merged layouts, (c) 3D textured mesh, where red dots indicate primary panoramas and green dots indicate annotated secondary panoramas, (d) final 2D floor plan “cleanup” annotation.

Annotation of Room Layout and Interior Features

After an initial image preprocessing step, which straightens each panorama so that the camera is upright, the room layout and interior features must be delineated. From the perspective of each panorama, an ML model estimates the floor-wall and ceiling wall-boundaries, as well as the wall-wall corners. This is commonly referred to as the room shape or layout.

Further, the model jointly estimates the wall-features: the windows, doors, and openings (WDO) of the room. An opening is a convenience of floor plan construction, used to split-up large and/or complex spaces which often prove too difficult to annotate accurately from a single perspective. Our annotation team then verifies the predictions and corrects any errors if necessary. These layouts are completed in 3D, by annotating the basic wall structure as well as the height of a single ceiling plane.

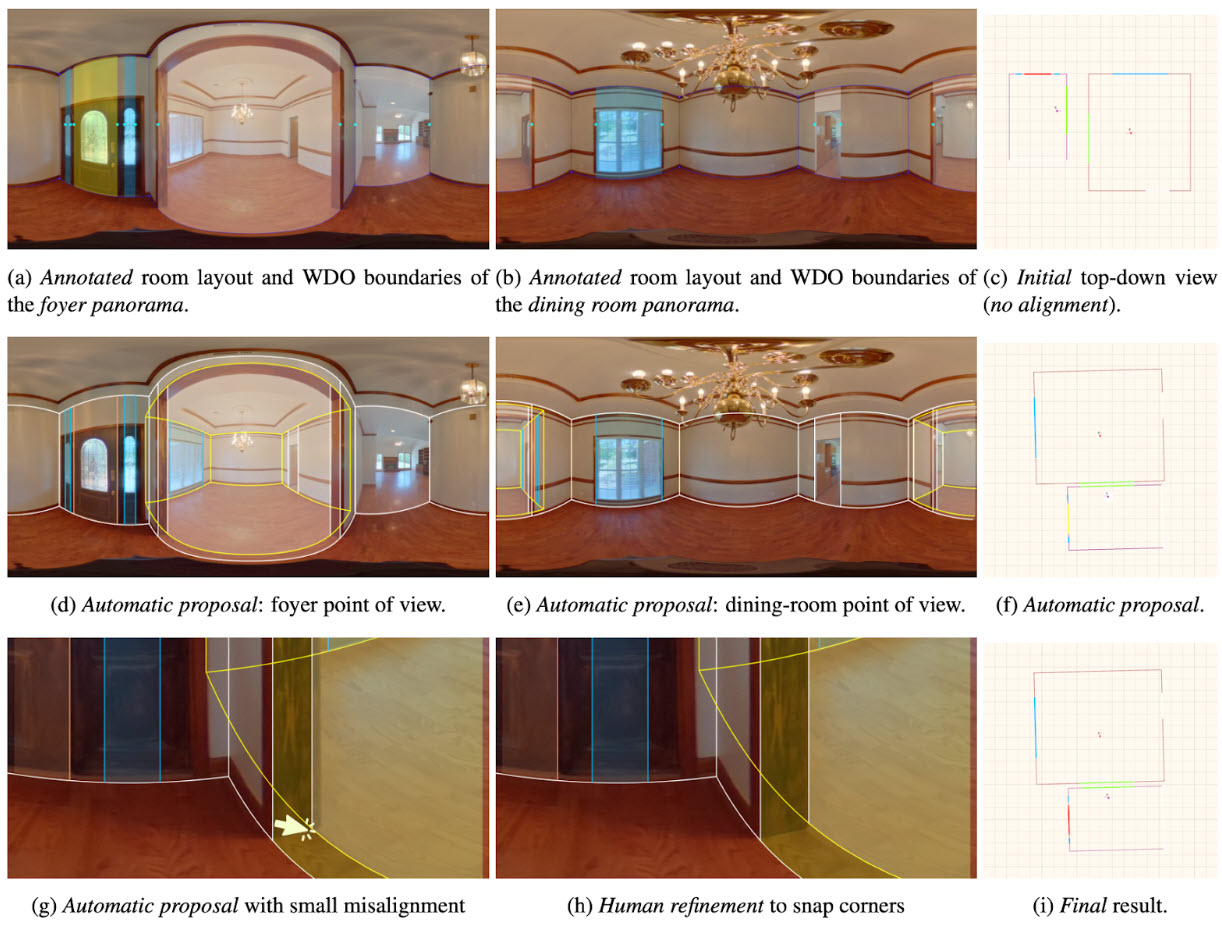

Room Merging

Following this room shape step, the separate rooms are then spatially placed together to complete the floor plan puzzle. This step is also assisted by an algorithm to suggest room-room spatial adjacencies by snapping doors and openings together while minimizing a cost function. A depiction of layout and wall feature annotation, along with room merging assisted by automatic proposals, is contained in Figure 4 below.

Figure 4. Assisted Room Merging: optimized for high accuracy with high throughput. In the 1st row we show the annotated room layouts and WDO boundaries from the previous stage. In the last column we visualize the top-down layout projection before any proposed alignment. In the 2nd row, we demonstrate the highest-ranked automatic alignment that would be surfaced to our human annotators, when they select to merge those two panoramas. Given the current proposal, the layout of the other panorama is rendered with yellow outlines. Human annotators can further refine the room-to-room alignment, as shown in the 3rd row, by simply dragging the yellow outlines.

Figure 4. Assisted Room Merging: optimized for high accuracy with high throughput. In the 1st row we show the annotated room layouts and WDO boundaries from the previous stage. In the last column we visualize the top-down layout projection before any proposed alignment. In the 2nd row, we demonstrate the highest-ranked automatic alignment that would be surfaced to our human annotators, when they select to merge those two panoramas. Given the current proposal, the layout of the other panorama is rendered with yellow outlines. Human annotators can further refine the room-to-room alignment, as shown in the 3rd row, by simply dragging the yellow outlines.

Cleanup and Final Floor Plan Generation

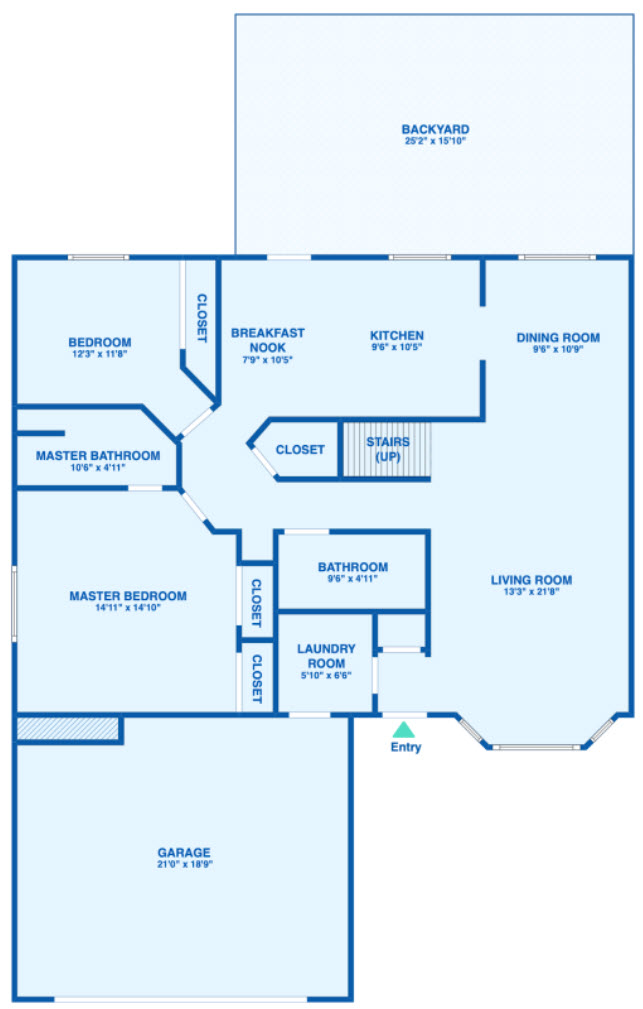

Figure 5. Example Zillow floor plan, as posted to Zillow.com.

To produce the final cleaned-up floor plan published on Zillow.com, annotators then complete the cleanup step. This step improves the quality of the final floor plan through correction of small misalignments between walls, clean up of semantic labels for rooms, and addition of small spaces which may be missing such as closets and stairs. An example floor plan is above in Figure 5.

Layout Completion

The partial room layouts that arise due to usage of openings can be convenient for human annotation and floor plan creation, but may be less-so for model development and general research purposes. As such, we subsequently merge these partial room layouts to produce complete layouts.

This process results in a single unified geometry for an open floor plan. To accomplish this, first a graph of partial room associations across openings is constructed. Partial room layouts are then repeatedly merged by binary addition of the floor segmentations, followed by contour extraction and polygon simplification.

Secondary Panorama Localization

Of all panoramas captured, annotators choose a subset for floor plan creation. These are referred to as the primary panoramas. There are often more panoramas captured than are used to create the floor plan, so to better facilitate different types of research, these additional secondary panoramas are subsequently localized on the created floor plan. This step is also assisted by automation, which suggests an initial pose (i.e., position and orientation) of each panorama and then submits it for subsequent annotator verification.

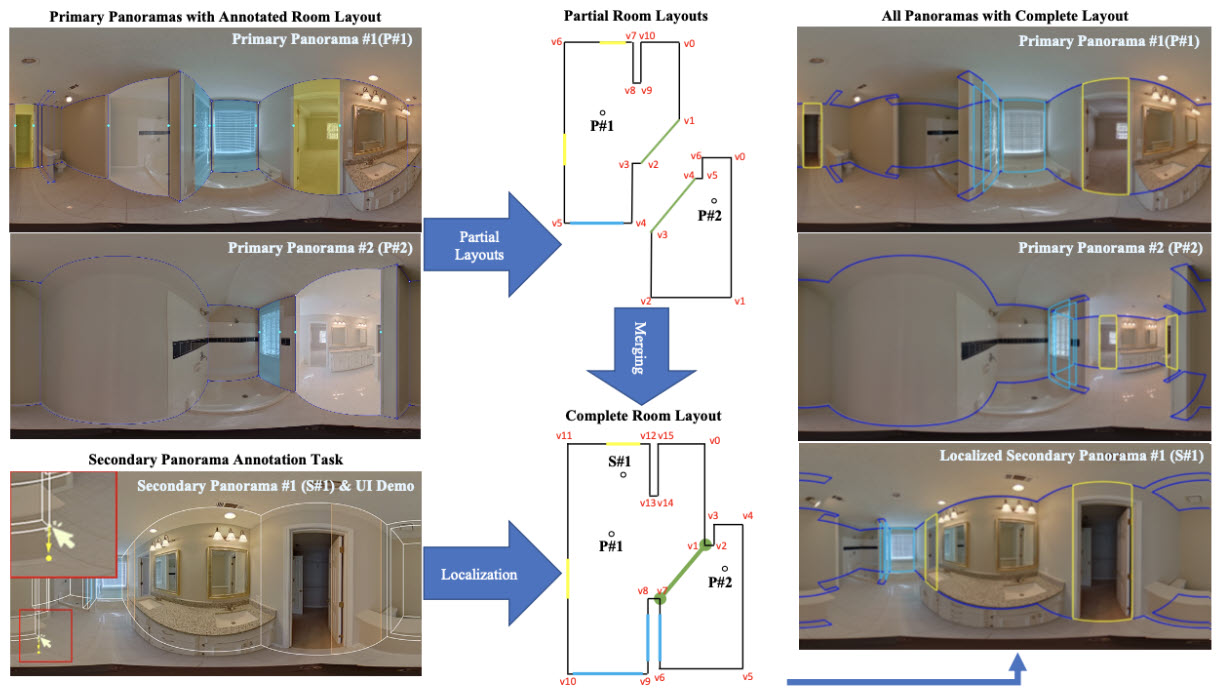

The combination of complete geometry and secondary panorama localizations produces whole layouts shared by multiple panoramic views. We believe that this increased density of coverage, as well as the increased spatial association between panoramas, will increase the utility of our dataset within the research community. The process of both layout completion and secondary panorama annotation is displayed in Figure 6 below.

Figure 6. Complete room layout and secondary panorama localization. Top/Center: process of computing complete room layouts of complex spaces, based on merged annotations of partial layouts from each panorama’s point of view. Bottom left: the secondary panorama localization task, where annotators can achieve pixel level accuracy by dragging the corners of an existing 3D layout (inherited from a reference, primary panorama) to snap to 2D image corners. Right: the complete layout from the point of view of all 3 panoramas localized on the same shared layout.

Figure 6. Complete room layout and secondary panorama localization. Top/Center: process of computing complete room layouts of complex spaces, based on merged annotations of partial layouts from each panorama’s point of view. Bottom left: the secondary panorama localization task, where annotators can achieve pixel level accuracy by dragging the corners of an existing 3D layout (inherited from a reference, primary panorama) to snap to 2D image corners. Right: the complete layout from the point of view of all 3 panoramas localized on the same shared layout.

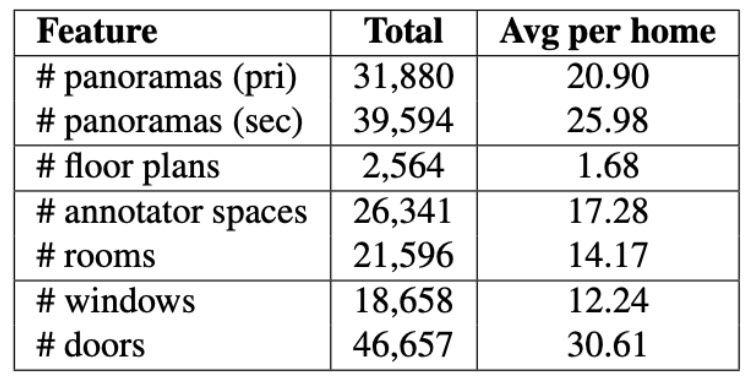

The statistics of primary and secondary panoramas, as well as floor plans, rooms, and wall features.

The statistics of primary and secondary panoramas, as well as floor plans, rooms, and wall features.

The capture and floor plan creation process is visualized in Figure 2. A visualization of the data produced for ZInD is in Figure 3. The primary and secondary panoramas are red and green, respectively, in column c.

Final Dataset Post-Processing

To reduce privacy concerns, we have automatically detected and removed any panoramas that contain:

(1) People or photographs of people to avoid sharing PI or PII information.

(2) Significant outdoor view, to avoid sharing street views or neighboring properties.

ZInD: A reflection of the real world distribution of home environments

Many datasets in the domain of indoor understanding capture only a subset of the real distribution of layout geometry, and often a simpler one at that. By providing the merged geometry of entire homes, ZlnD contains tremendous diversity, and serves as a rich platform for research and development.

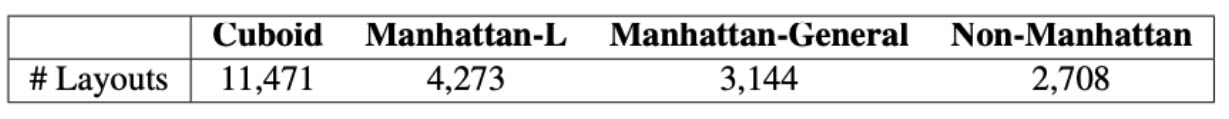

Commonly, existing datasets focus heavily on simple room layout types, such as cuboids and L-shaped rooms. However, real world homes include much more complex rooms, with bay windows, breakfast nooks, and open floor plans that seamlessly transition from kitchen to dining room and living room.

In the research literature, indoor environments consisting entirely of 90o angles are commonly called “Manhattan,” with cuboids and L-shaped rooms as common examples. In contrast, ZInD goes beyond these more common geometric types, including larger general Manhattan layouts, as well as non-Manhattan layouts such as bay-windowed rooms.

ZlnD statistics for cuboid vs. Manhattan style rooms.

ZlnD statistics for cuboid vs. Manhattan style rooms.

While typical cuboid and L-shaped layouts are sampled primarily from bedrooms and offices, the whole home environments provide a significant increase in diversity. Below you will find a sample of annotated layouts and wall features for bathrooms, bedrooms, dining rooms, kitchens, living rooms, and garages.

Figure 7. Semantic room type visualizations. Wall layouts, doors, and windows are delineated in dark blue, yellow, and light blue, respectively. Additional interior geometry, such as pillars and intermediate walls, are delineated in orange. Note the significant diversity in ZInD’s annotated geometry and room types.

Figure 7. Semantic room type visualizations. Wall layouts, doors, and windows are delineated in dark blue, yellow, and light blue, respectively. Additional interior geometry, such as pillars and intermediate walls, are delineated in orange. Note the significant diversity in ZInD’s annotated geometry and room types.

Promising Applications

An important task in the domain of indoor understanding is automatic room layout estimation. As explained above, RMX leverages an ML model to aid our annotators in this task. Importantly, the competency of an ML-model depends directly on the data on which it was trained. If the model has only seen simple cuboid and L-shaped rooms during training, it may be too much to ask of the model to accurately estimate larger and more complex layouts.

Further, such rooms may prove too difficult to completely estimate from a single panoramic perspective. By providing a diverse spectrum of real-world indoor environments, with increased density of coverage in larger spaces, we believe that ZInD will support research on new methods to accurately estimate these complex spaces.

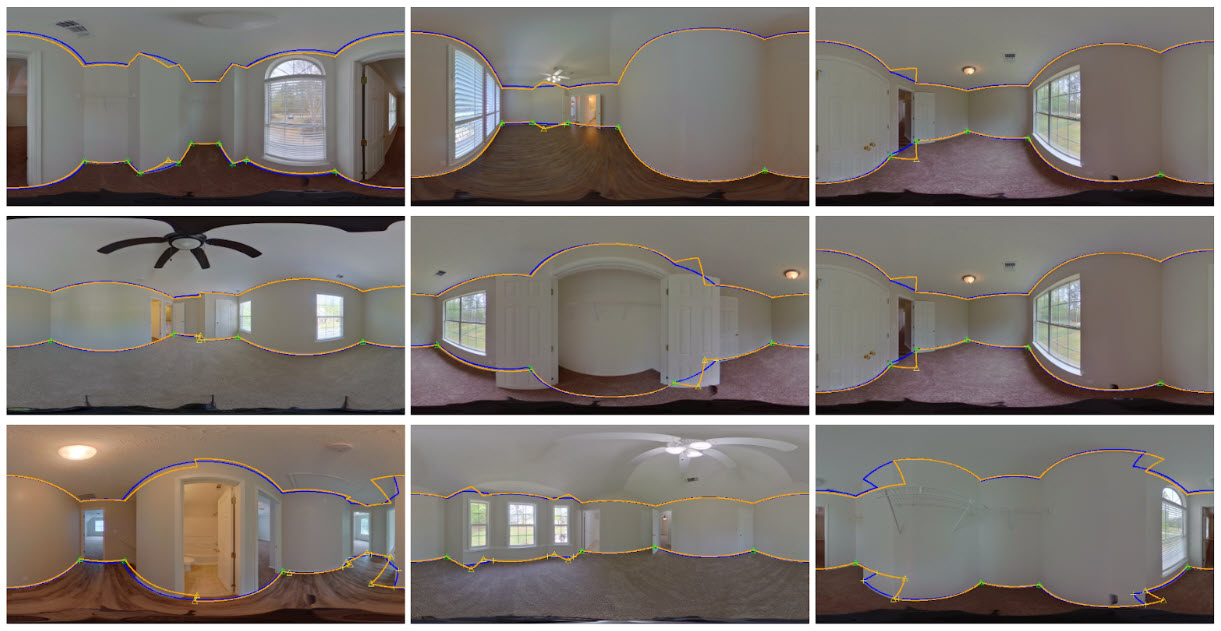

To demonstrate the utility of ZInD for the layout estimation task, we trained a current state-of-the-art model, HorizonNet , on our data. Below are examples of the predictions of the trained model on simple Manhattan layouts.

Figure 8. Estimated room layouts for simple Manhattan rooms. The predicted layout is displayed in orange with corners denoted by triangles. The GT layout is in blue with corners denoted by crosses. Corners closer than 1% of the image width are considered accurate, and are highlighted in green.

Figure 8. Estimated room layouts for simple Manhattan rooms. The predicted layout is displayed in orange with corners denoted by triangles. The GT layout is in blue with corners denoted by crosses. Corners closer than 1% of the image width are considered accurate, and are highlighted in green.

Predictions on more complex non-Manhattan layouts are shown below. HorizonNet is only capable of estimating Manhattan layouts — as shown, the predictions are unable to capture non-90o angles. This demonstrates the need for further research on accurately estimating such layouts, for which our ZInD provides a useful platform. A recent work on layout estimation for more complex geometry, AtlantaNet , relied on fresh annotations of 100 images in order to explore this space. By comparison, ZInD provides a significantly larger testbed for such research.

Figure 9. Estimated room layouts for non-Manhattan rooms. The predicted layout is displayed in orange with corners denoted by triangles. The GT layout is in blue with corners denoted by crosses. Corners closer than 1% of the image width are considered accurate, and are highlighted in green.

Figure 9. Estimated room layouts for non-Manhattan rooms. The predicted layout is displayed in orange with corners denoted by triangles. The GT layout is in blue with corners denoted by crosses. Corners closer than 1% of the image width are considered accurate, and are highlighted in green.

Conclusion

ZInD has come together as a result of the joint effort of a diverse group of engineers, scientists, and annotators. In addition, we were able to take advantage of another Zillow Group company, Bridge Interactive, to distribute ZInD to the academic community. Bridge’s flexible API and data licensing platform made sharing over 30GB of data much easier and more secure. In particular, we would like to acknowledge the contributions and team effort of our fellow members of the RMX Applied Science team, without which this release and paper submission would not have come together . In providing ZInD to the community, we hope to stimulate innovation in real estate technology, in order to further Zillow’s goal of giving people the power to unlock life’s next chapter.

Citations:

Steve Cruz, Will Hutchcroft, Yuguang Li, Naji Khosravan, Ivaylo Boyadzhiev, and Sing Bing Kang. Zillow Indoor Dataset: Annotated floor plans with 360° panoramas and 3D room layouts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021

Cheng Sun, Chi-Wei Hsiao, Min Sun, and Hwann-Tzong Chen. Horizonnet: Learning room layout with 1D representation and pano stretch data augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019

Giovanni Pintore, Marco Agus, and Enrico Gobbetti. AtlantaNet: Inferring the 3D indoor layout from a single 360o image beyond the Manhattan world assumption. In Proceedings of the European Conference on Computer Vision (ECCV), 2020.

Related Articles

Sign up for Zillow news updates

Subscribe to receive daily emails for the latest Zillow news and announcements, product updates, research and more.